BenQ said:

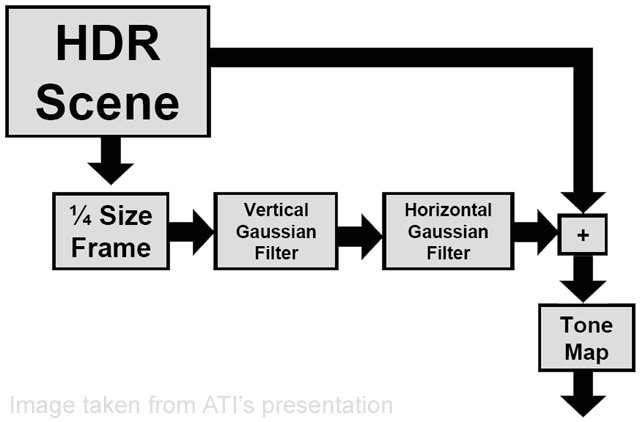

So HDR uses something called tone mapping because the "real" range of light is far more than any PC monitor can display. So they use tone mapping to ..... bah I'm still confused....

If you know anything about Photography, this is easy to understand. The idea is to supply information on brightness that is very diverse like real-world illumination, and then you scale the output to show the area of detail you want on the display. So you can scale low level values in shadows from black to white, and anything above that brightness will be whited out (overexposed). Or you can scale the image so the very brightest areas like directly at the sun are white, and everything else is much darker. It gives responses like real cinematography.

Also can you explain the differences between fp8 ( if it exists ) fp10, the "special" fp10 mode in Xenos and fp16?

There's no such thing as fp8. In an fpxx number, the xx determines the number of bits used to represent a number. In the case of fp10, 10 bits are used. These are divided in exponent and mantissa values, which in English is a decimal like 3.421 and a multiplier that 's 2 to the nth power.

In the case of fp10, there's I think 7 mantissa bits and 3 exponent bits, but I'm fuzzy. Basically the bigger the fp number, the more accurate you are, but the more memeory you need to store information, and the slower your calculations (though maybe only above fp16?). Accuracy is needed when blending etc. to elliminate artefacts.