VFX_Veteran

Regular

But that's not true. Nvidia Marbles demo proved that other graphics engines can easily use pre-tessellated static geometry without the need for an SSD.Did I say than GPU power doesn't count? I just say that without SSD this is impossible to have this level of details after it will be impossible to fully reach this level because of storage size.

Small hint the demo render at 30 fps because of Lumen not Nanite. And they are very confident they can reach 60 fps but if the PS5 GPU was more powerful they would be able to be at 60 fps without optimization or at 60 fps with a better resolution.

Yea, that's why I say GPU will reach a limit before SSD will.

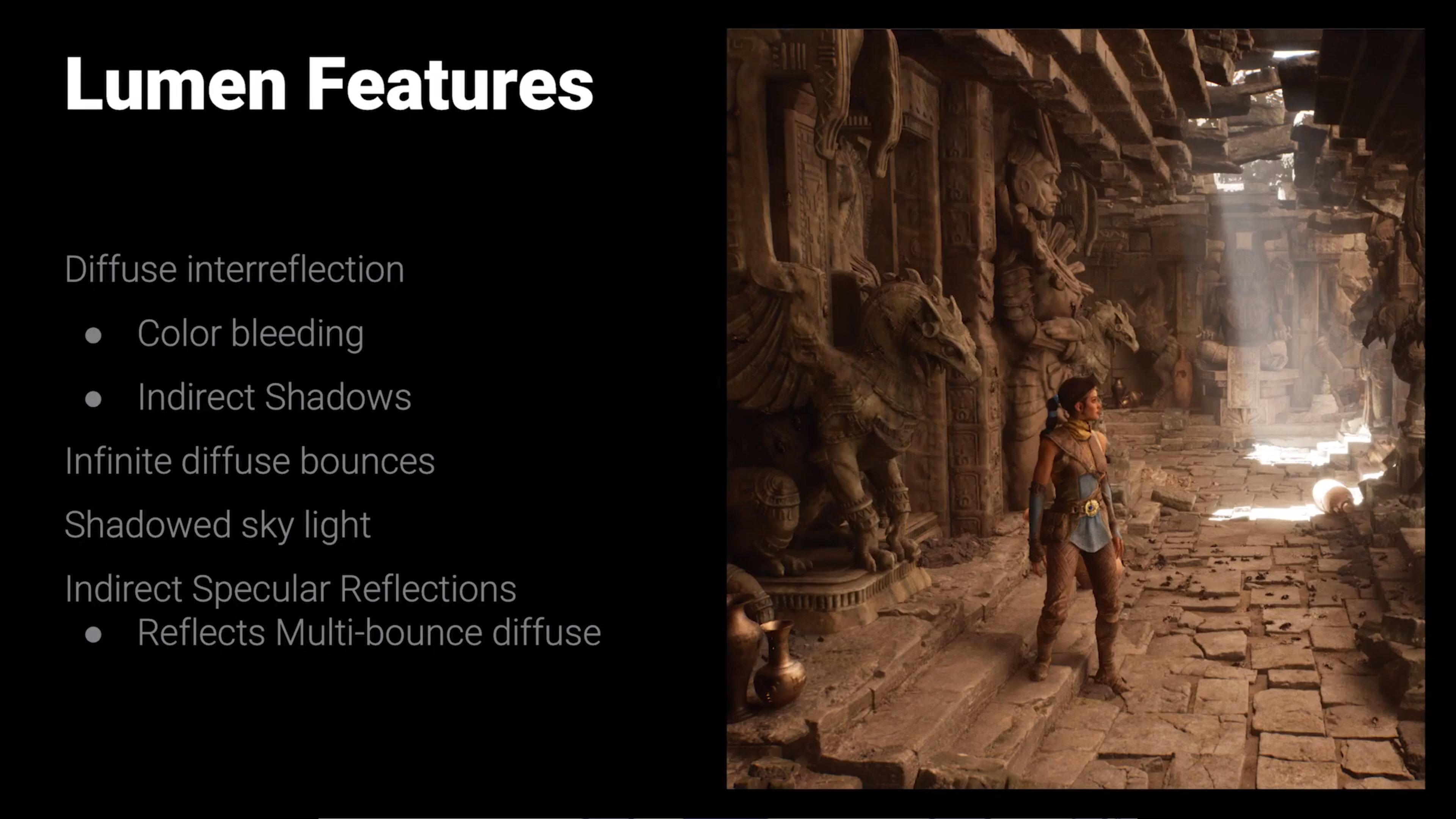

Lumen is still using the old tried and true pre-baked lighting setup or a derivative of it. It's not using the accuracy of RT and it shows in the demo. The theme this new generation is RT. The transformation in lighting is way more important IMO than static highly detailed objects that can't interact with the scene. I do like not having to bake out normal maps though. Thankfully, UE can do Lumen or the more accurate RT lighting.

As an aside, this UE5 demo tech isn't going to show up on any of the Sony 1st party games. The other developers will have to make their own and it could be better or worse. I'm told that all the 1st party studios are making their own.