All of Gen 9 graphics has been moved to legacy driver support. That's 6th through 10th gen CPUs along with related Atom, Pentium and Celerons.

www.intel.com

As a side note that is a 1.1GB driver download! It's like 2 Windows XPs lol.

www.intel.com

As a side note that is a 1.1GB driver download! It's like 2 Windows XPs lol.

Intel® Graphics – Windows* DCH Drivers

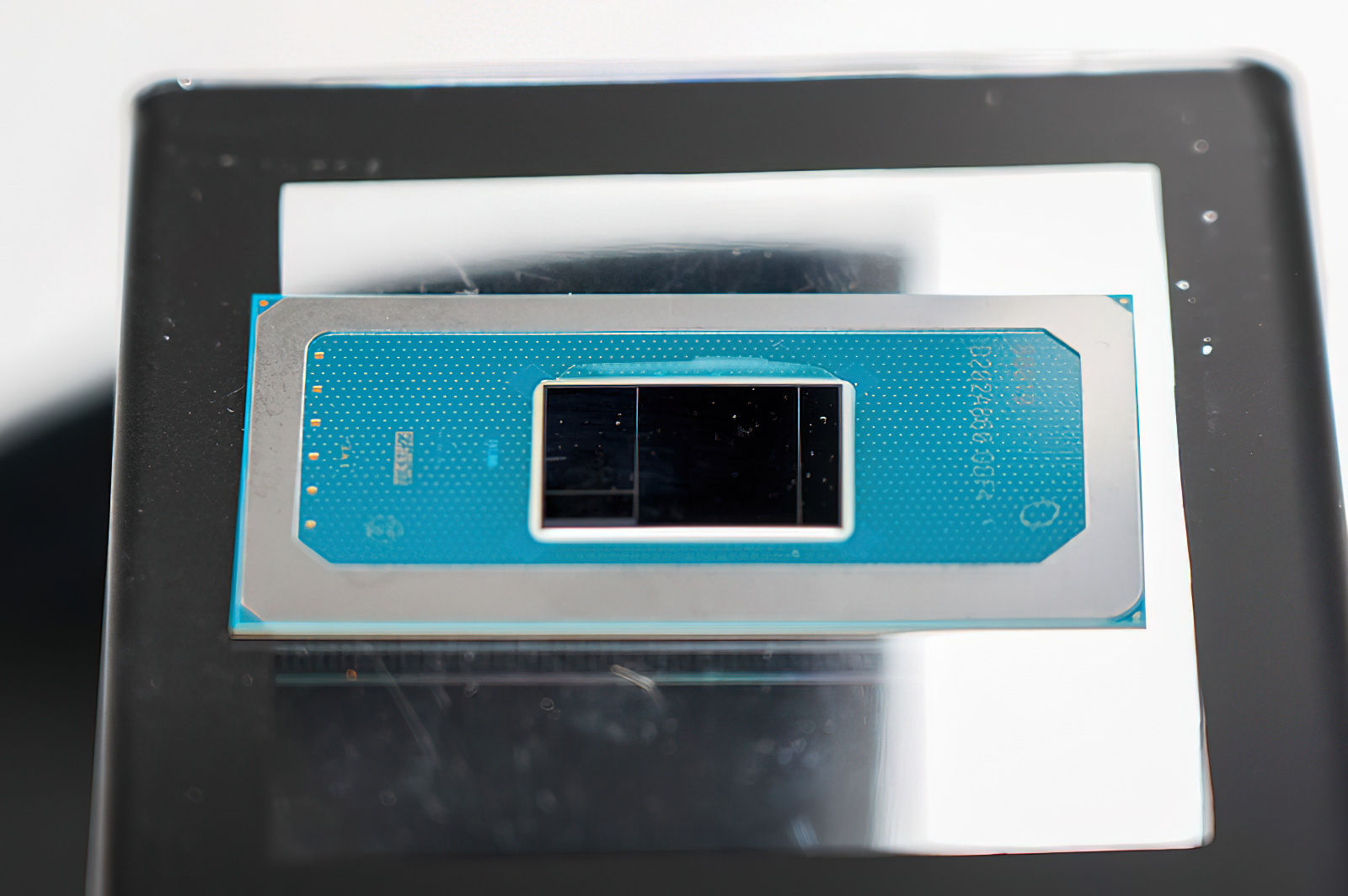

This download installs Intel® Graphics Driver 31.0.101.3790/31.0.101.2114 for Xe Dedicated, 6th-13th Gen Intel® Core™ Processor Graphics, and related Intel Atom®, Pentium®, and Celeron® processors. Driver version varies depending on the Intel Graphics in the system.