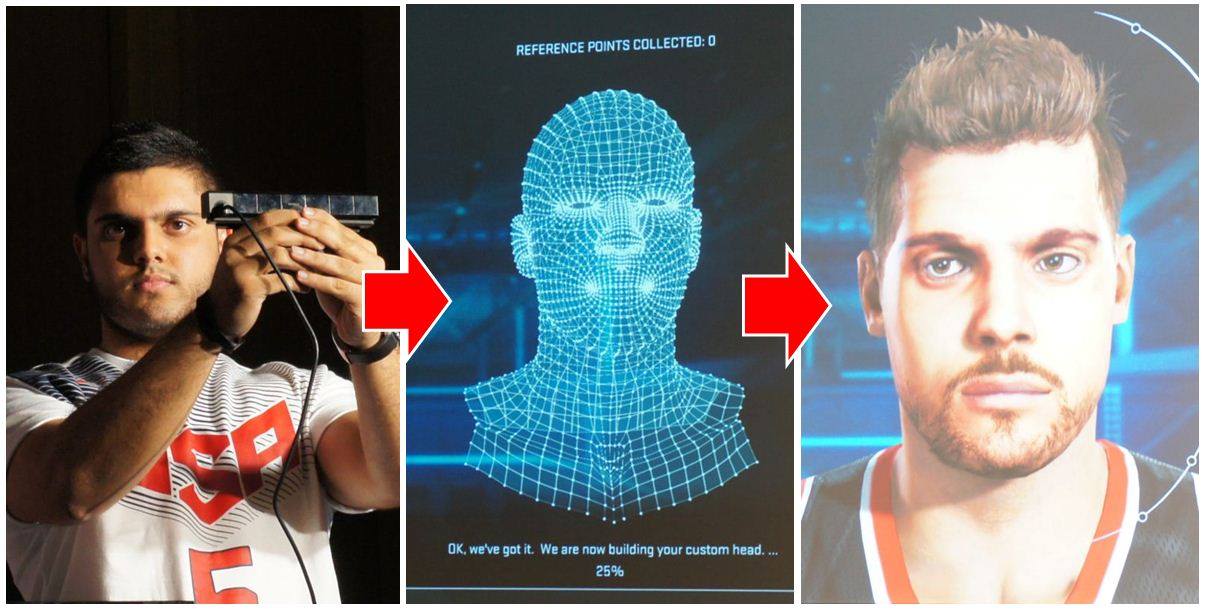

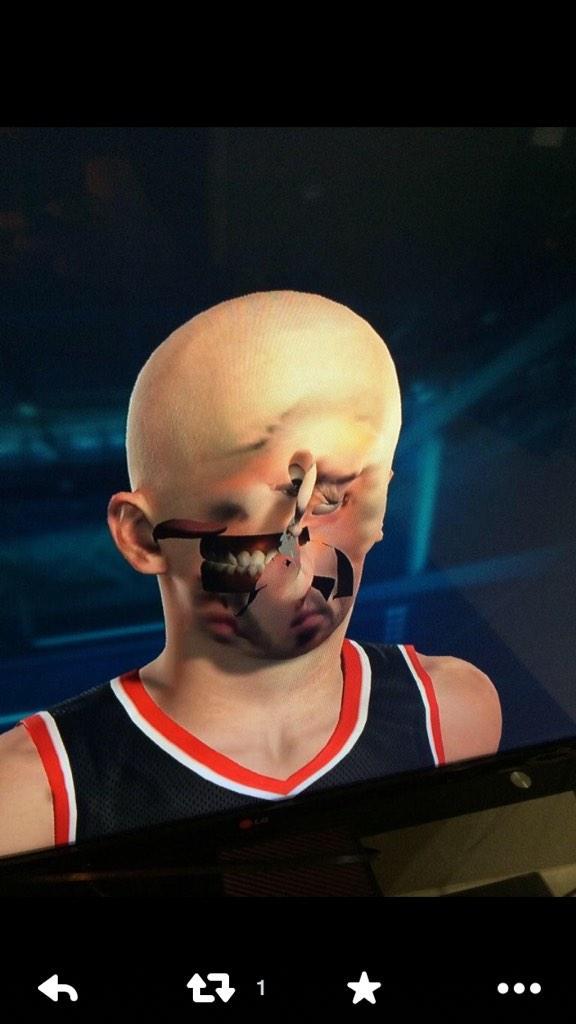

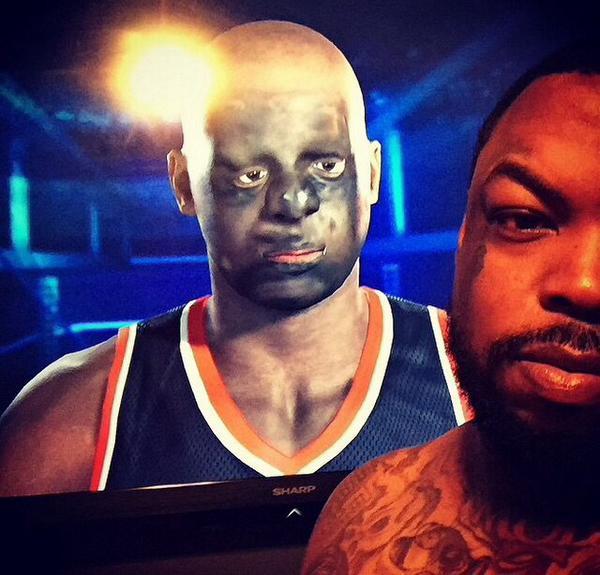

Something that I have noticed while looking at all the NBA 2K15 face scans & reading the comments is that most of the scans that came out right are from the PS4 & I'm yet to see a good scan that's from the Xbox One. Maybe it's a software issue but it seems that the bad scans from the PS4 camera is user error & after getting better instructions people are able to get good scans but with Kinect no one has figured out how to make it work right.

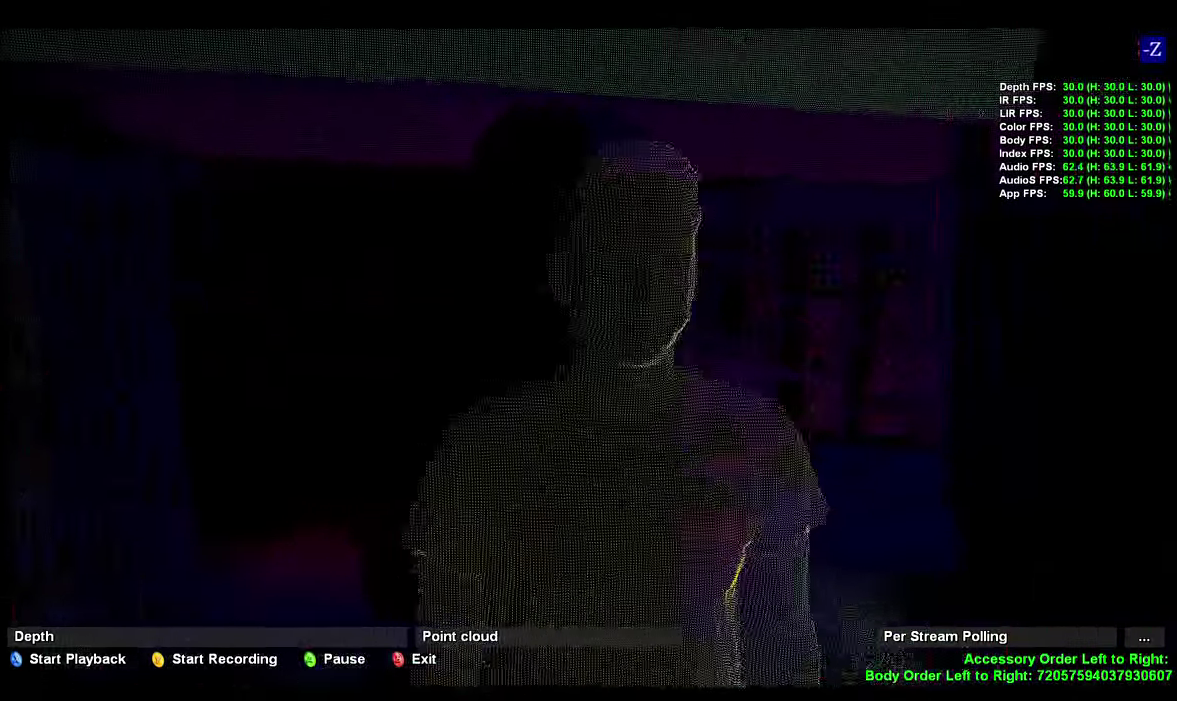

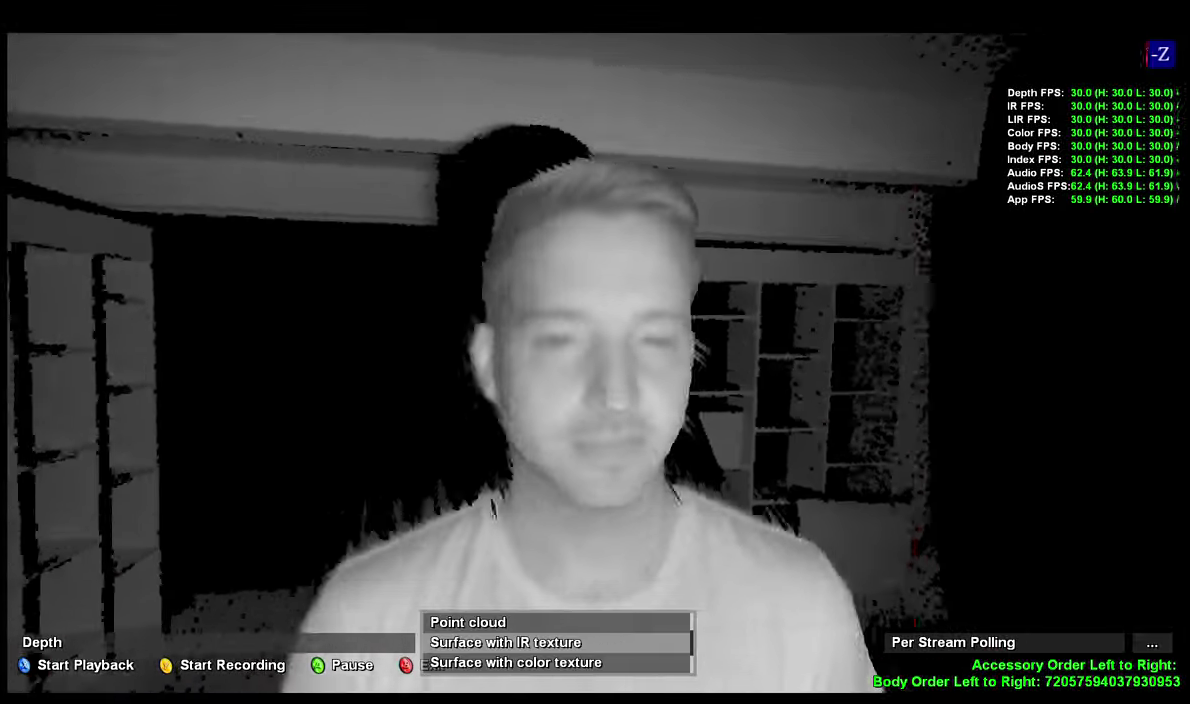

If I had to guess I would say it's because Kinect 2.0 isn't able to do 3D scanning at close range so it's causing problems when people try to hold the camera close to their face. Also there is the fact that PS4 camera's 3D is 1280 x 800 while Kinect 2.0's 3D is 512×424 while it's RGB camera is 1920 x 1080 which might be harder to calculate than using 2 of the same cameras for depth and color.

If I had to guess I would say it's because Kinect 2.0 isn't able to do 3D scanning at close range so it's causing problems when people try to hold the camera close to their face. Also there is the fact that PS4 camera's 3D is 1280 x 800 while Kinect 2.0's 3D is 512×424 while it's RGB camera is 1920 x 1080 which might be harder to calculate than using 2 of the same cameras for depth and color.