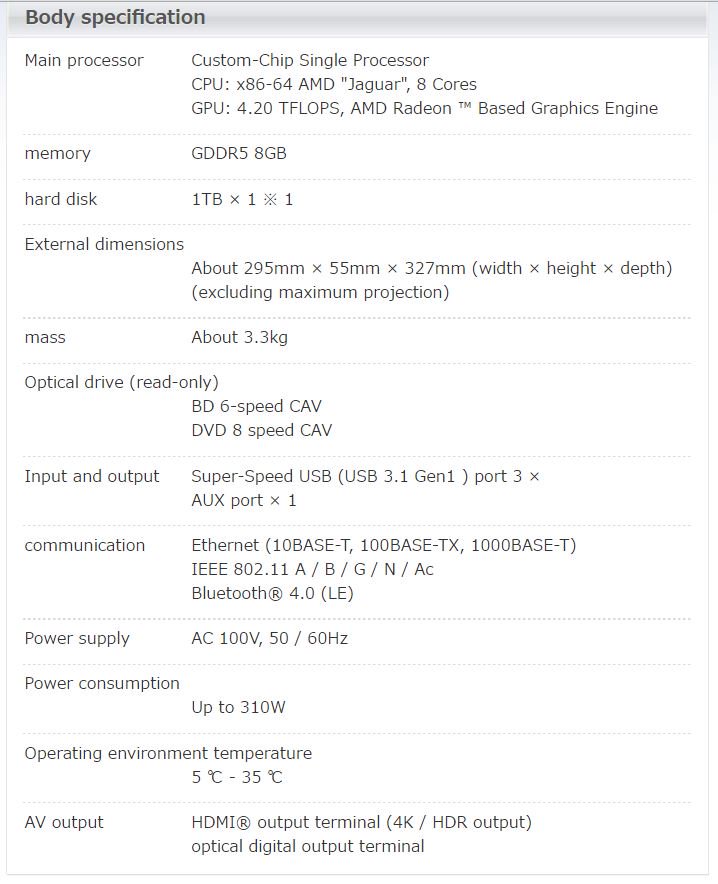

Where the extra 60W over the launch PS4 comes from is an item of curiosity for me.

A little over is something I could understand, but what would take it that much over?

My earlier assumptions were premised on something comparable to the 14nm Polaris 10 GPU, which does have certain assumptions built in. With the Pro described as an APU that has elements taken from Polaris, there are potential assumption-breakers ranging from process, the GPU architecture, APUs not always achieving optimal implementations for both the CPU and GPU regions, possibly restricted turbo/downclocking for consistency reasons, and the yield tolerances when one can be binned more so than the other.

That is a lot of assumptions, granted, but seemed plausible to get that plus the share of power producers besides the GPU+GDDR5 in without another 60W. There are some items in the system that were bumped like the number of potentially powered USB ports, but that contribution seems too modest.

I'm still not sure how much Neo was able to derive from Orbis. Some discussion of it being Polaris "and beyond" actually might be the persistence of the Tensilica block that Polaris dumped. Possibly, items like compression and front end changes could move into a revision of Orbis without overly complicating matters.

I'm not sure about the GCN CUs themselves, or the ISA since those do not appear to mesh well with the PS4 IP level.