It was far more than 10%

http://www.anandtech.com/show/2977/...x-470-6-months-late-was-it-worth-the-wait-/19

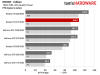

And that's coming 6 months after 5870 hit the market. The GTX 480 consumed roughly 60-100% more power than 5870. I can't remember the site that did power consumption through the PCIE slot and power connectors back then which had more detailed power usage breakdowns. In some games it was faster in some it was slower.

Fermi was just a really bad chip compared to AMD's cards at the time, unless you absolutely needed compute. Of course, back then the dialog from most Nvidia users on this very forum was that perf/watt wasn't important. So interesting how times have changed.

It wasn't until Nvidia started to castrate compute on their consumer cards that they caught up to AMD in perf/watt again. And with the last generation the roles ended up reversed with Nvidia having far greater perf/watt.

So, while it may seem impossible for AMD to catch up or even surpass them, it's never impossible. It's unlikely certainly, and until they do it people shouldn't claim they will do it. But it's also not wise to say they can't do it. In general it's pretty rare for there to be a huge disparity between AMD (ATI) and Nvidia. The 9700 pro and the 5800 was one such occasion. The Geforce 8xxx series and the ATI 2xxx series was another one. The AMD 5xxx series and the Geforce 4xx series another one. And now the Geforce 9xx series and 10xx series versus the AMD parts. Otherwise things have generally been pretty similar between the two.

Regards,

SB

Perf/watt

the hd 4870 wasn't like that, and yeah the hd 5870 did, correct. but even that wasn't as great as this, it was the price of those cards that really hurt nV, Fermi had problems out of the gate hence the 6 months delay. And I have always stated if you EVER see more than one quarter difference between launch of cards from either IHV you better get ready for disappointment, something went wrong. But still the 5870 series started to loose traction soon after its Fermi's V2 release, which those problems were rectified some what.

Guess what Vega is.....

And doesn't matter about tapeouts and all the other stuff, because in recent history, both of these companies ALWAYS have launched close to each other within a quarter, as they are prepared to do so. The only time they couldn't do it is when they knew they couldn't match up with something and it would hurt them.

PS keep this in mind, Tape out of Vega was q2 of this year, So why is it taking 3 or more quarters for it to come out? Why wasn't Vega also on the same time schedule as Polaris, was AMD not interested to go into the performance or enthusiast segment, the performance segment is by far the largest segment by volume and over all profits.

Do we soon forget the reasoning AMD gave for Polaris's launch (How about the r600, the FX series, Fermi V1, Fiji), and we now know why it took them longer to come out, something didn't go right. Every time we see these companies have to give reason for something that is delayed more than 2 q's over a competitor's product, that reason is most likely BS, there has been the underlying cause of "we are F'ed"

Then you look at multiple design teams, usually when you have multiple design teams you have one team working on what is coming out soon, and its iterations and the second team working on future architectures which won't see day of light for a while, yet we see the same design team working on Vega and Polaris with a staggered release, that is something we have never seen before if they are truly that much different. We all know the most that these companies can do when fast tracking a project like these its a quarter up, that's it. They can't move mountains and push up timetables of future products with this kind of complexity.

So lets say Vega was fast tracked, that means since Polaris's release, AMD was not expecting to come into the performance market for a year + another quarter to against nV? Does that sound like anything that is remotely possible, to give away so much money and the entire market segment for essentially an entire generation? That is a lot of money, around 5 billion dollars to say we are not interested in so we didn't plan for it. All the while they were so in tune with LLAPI's that they couldn't plan for future products that supported LLAPI's better then their competition? I see a disconnect there if that was the case.

More things to add, when ever either of the IHV's had a delay in current products, that never changed the time tables of future products, so we can't say Fiji's delay had something to do with Vega's delay because they are not bound to one another.