This is a benefit to HFR that I never even considered.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Next Generation Hardware Speculation with a Technical Spin [post E3 2019, pre GDC 2020] [XBSX, PS5]

- Thread starter DavidGraham

- Start date

-

- Tags

- john sell

- Status

- Not open for further replies.

We had this exact discussion in another thread, but good to see it verified by sebbbi hereThis is a benefit to HFR that I never even considered.

I think some of the leaks indicated there were some lower-level API commands for the Xbox One that allowed developers to tweak items like CU allocation patterns. It's possible other low-level settings like giving a certain number of CUs for one part of the workload or allowing the GPU to allocate as much as it can for a given shader type.That shouldn't be the case at all. GPU commands are dispatched and dealt with by the hardware schedulers. No code should have any idea what the CU configuration is and that should never impact whether code runs or not.

It's possible there are synchronization points that were coded with certain assumptions about how many CUs could churn through the workload before reaching a barrier, or intermediate targets whose code assumed but did not enforce a certain number of simultaneous wavefronts or workgroups. Counters and CU masks might have had issues if their values are being driven higher than expected, or the hardware's doubling means there are bit masks or values that are ambiguous or incomplete with more CUs.

Within a workgroup, this may not matter as much, although there may be instances where a CU ID could be accessible to code and might give an unexpected value on a double-width GPU.

Higher clocks, absent downsides like power consumption and feeling memory latency more acutely, would be a more generally useful value to scale since additional clock benefits serial and parallel algorithms.Just as there's advantage in faster, fewer CPU cores than more, slower cores, is there a case for GPU workloads, especially compute, where higher clocks and less parallelism is better? Perhaps they chose 2GHz and fewer CUs because they can, for the same total throughput as 1.5 GHz and 4/3 times as many CUs but faster individual thread (warp) execution. Also the schedulers and everything else will be running that much faster. [/theory]

Fixed-function elements would benefit, and the geometry portion of the pipeline is significantly less parallel than the pixel processing portion. The work the geometry portion does precedes the amplification of work items that becomes the pixel back-end, so it has fewer wavefronts to absorb latency and hiccups with the primitive stream or the FIFOs in the geometry processor translate into many more pixels whose launch is delayed.

With primitive shaders, there's additional math and conditional evaluation inserted into the shaders, and while it may save wasted work later it's additional serial execution up-front. There may be some elements like workgroup processing mode, the faster spin-up, and narrower SIMD of wave32 that may help push individual workgroups through faster.

Backwards compatibility might be another area, although the alleged clocks match existing hardware. It's not clear at this point if there are elements that need to be maintained for backwards compatibility that might be slower on Navi. Also unclear is whether there's some overhead from emulating elements that Navi dropped (certain branch types, skip instructions, shfits), even as it restored Sea Islands encodings for a wide swath of others.

Silent_Buddha

Legend

Yes, exactly as I'd mentioned in another thread. What you lose in spatial resolution you more than regain through temporal resolution. Since most games have motion of some sort in them (especially if the camera moves), temporal resolution is far more important than spatial resolution, IMO.

This is a benefit to HFR that I never even considered.

I'm hurt as I'd mentioned this before.

This basically means that temporal reconstruction gets better and better the higher the frame rate is. Hence 30 Hz is pretty horrible for temporal reconstruction while 60 Hz is the bare minimum for acceptable quality, IMO. And as Sebbbi mentioned, 120 Hz would be a really good place to be for temporal reconstruction to really shine.

Regards,

SB

cheapchips

Veteran

So for a 60Hz display would be you still render 120fps and have more data for reconstruction?

D

Deleted member 13524

Guest

I think if you simply joined two frames of a 120Hz flow into one for 60Hz you'd only blur things out, not bring any more detail.So for a 60Hz display would be you still render 120fps and have more data for reconstruction?

If you have a 120Hz panel then with 1440p + temporal you'll get 120Hz motion and 4K "perception".

Otherwise if you have a regular 60Hz panel you're probably better off running at true 4K because the performance demands between 1440p120 and 4k60 might be similar.

Silent_Buddha

Legend

So for a 60Hz display would be you still render 120fps and have more data for reconstruction?

I'm not sure at that point. That basically means that you accumulate 2 120 Hz "frames" - display the results, accumulate another 2 120 Hz "frames" - display the result, etc. I'm don't think that would be better than just rendering 2 temporally different 60 Hz frames. Temporally different in this case meaning that they aren't just sequentially different (I may not be using the correct terminology here.

Regards,

SB

Accumulating 2 120hz frames into one 60hz one would create ghosting artifacts. Unless they do uneven timestepping between frames keeping them both within the bounds of a 60Hz render with reasonable shutter speed for it's moblur. In that case, 60Hz monitors would essetially get super sampled motion blur, which sounds so high fidelity my legs shake like I'm a highschool freshman girl being asked to prom by Jeff, a senior and team captain of the school's winning football team.

Would that high fidelity motion blur offer more or less detail than your prom fantasy just did?Accumulating 2 120hz frames into one 60hz one would create ghosting artifacts. Unless they do uneven timestepping between frames keeping them both within the bounds of a 60Hz render with reasonable shutter speed for it's moblur. In that case, 60Hz monitors would essetially get super sampled motion blur, which sounds so high fidelity my legs shake like I'm a highschool freshman girl being asked to prom by Jeff, a senior and team captain of the school's winning football team.

Would that high fidelity motion blur offer more or less detail than your prom fantasy just did?

Definetly more. A 4k60 game will look way sharper than my night with Jeff, which would all seem like a blur. Time would feel to be passing both fast, and in slow motion simultaneously. it's difficult to describe. But on a game, that would be considered stuttery and bad for gameplay, so more detail.

https://semiengineering.com/dram-tradeoffs-speed-vs-energy/

That's an interesting metric: GB/s per mm of die edge.

Hbm2e = 60GB/s per mm

Gddr6 = 10GB/s per mm

Lpddr5 = 6GB/s per mm

Assuming currently available speeds, which is 410GB/s per stack, gddr6 at 16gbps, lpddr5 at 6400Mbps, it gives an idea of how much edge space is consumed to fit a certain width of memory.

2 stack = 14mm

256bit gddr6 = 51mm

384bit gddr6 = 77mm

256bit lpddr5 = 34mm

Basically, without interposers or fanout tricks, it's about 12 connections per mm.

While gddr5 isn't mentionned, from the signals list it had about 20% fewer lines required per chip than gddr6. So this gen would be:

256bit gddr5 = 41mm

384bit gddr5 = 62mm

There has to be enough space left for pcie channels and all the rest other than the memory.

As an example, with a wild guess of 75% of the edge for memory and 25% for eveything else, and a chip of 360mm2 (19mm x 19mm, total edge space is 76mm), 384bit would not fit, 320bit would be borderline. It also becomes clear that having a split memory could only work using HBM for one of them.

If you have HBM2E, you can get on the order of 60+ gigabytes per second per millimeter of die edge. You can only get about a sixth of that for GDDR6. And I can only get about a tenth of that with LPDDR5. So if you have a very high bandwidth requirement, and you don’t have a huge chip, you don’t have a choice. You’re going to run out of beachfront on your SoC if you put down anything but an HBM interface

That's an interesting metric: GB/s per mm of die edge.

Hbm2e = 60GB/s per mm

Gddr6 = 10GB/s per mm

Lpddr5 = 6GB/s per mm

Assuming currently available speeds, which is 410GB/s per stack, gddr6 at 16gbps, lpddr5 at 6400Mbps, it gives an idea of how much edge space is consumed to fit a certain width of memory.

2 stack = 14mm

256bit gddr6 = 51mm

384bit gddr6 = 77mm

256bit lpddr5 = 34mm

Basically, without interposers or fanout tricks, it's about 12 connections per mm.

While gddr5 isn't mentionned, from the signals list it had about 20% fewer lines required per chip than gddr6. So this gen would be:

256bit gddr5 = 41mm

384bit gddr5 = 62mm

There has to be enough space left for pcie channels and all the rest other than the memory.

As an example, with a wild guess of 75% of the edge for memory and 25% for eveything else, and a chip of 360mm2 (19mm x 19mm, total edge space is 76mm), 384bit would not fit, 320bit would be borderline. It also becomes clear that having a split memory could only work using HBM for one of them.

Last edited:

So for a 60Hz display would be you still render 120fps and have more data for reconstruction?

I believe it's simple motion interpolation. Most modern LED TVs are capable of motion smoothing (i.e., Motionflow, TruMotion, etc.) which can simulate higher refresh rates (i.e, 120Hz, 240Hz, etc) on 60Hz panel TVs by injecting prior (or future) frames into the overall picture motion. However, films captured in 24fps or 30fps can look off-putting with motion smoothing being engaged. Giving it a cheap production feel of watching a live soap opera, rather than a smooth film experience. And since most current generation game console titles are 30fps, they can look quite bad (i.e., ghosting, frame latency, input lag, etc.) with most motion smoothing methods. Hence, the reason why most TV manufacturers offer a "game mode" on disabling this feature.

That being said, material filmed in 60fps or games rendering at 60fps can look quite good with motion smoothing on, giving the impression of a smoother experience (or faster framerate). AMD/Sony/MS could have integrated some type of motion interpolation logic similar to LED TVs or how Nvidia handles frame reconstruction (or AFR) with motion smoothness in SLI setups, but more so in a single GPU fashion towards reconstructing multiple frames. With some form of bespoke logic (far beyond Pro's ID buffer) towards aiding CBR with motion interpolation, rather than solely relying upon the GPU clocks/speeds during frame reconstruction, this 'new logic' could relieve the constant burden on the GPU on keeping consistent high framerates, even with CBR methods being applied.

So in theory, this bespoke motion interpolation logic would aid CBR rendering of 2 x 1440p frames @60Hz and give the feel / look beyond 60fps (giving the impression of 120Hz gaming) without brute forcing such high framerates. Sooooooooooooooo... when Sony and MS are taunting 120fps gaming, I'm pretty sure their just using the same PR messaging TV manufacturers are using when describing their sets being 120Hz/240Hz, when in reality it's 60Hz panels using motion interpolation methods.

Last edited:

https://semiengineering.com/dram-tradeoffs-speed-vs-energy/

That's an interesting metric: GB/s per mm of die edge.

Hbm2e = 60GB/s per mm

Gddr6 = 10GB/s per mm

Lpddr5 = 6GB/s per mm

Assuming currently available speeds, which is 410GB/s per stack, gddr6 at 16gbps, lpddr5 at 6400Mbps, it gives an idea of how much edge space is consumed to fit a certain width of memory.

2 stack = 14mm

256bit gddr6 = 51mm

384bit gddr6 = 77mm

256bit lpddr5 = 34mm

While gddr5 isn't mentionned, from the signals list it had about 20% fewer lines required per chip than gddr6. So this gen would be:

256bit gddr5 = 41mm

384bit gddr5 = 62mm

There has to be enough space left for pcie channels and all the rest other than the memory.

As an example, with a wild guess of 75% of the edge for memory and 25% for eveything else, and a chip of 360mm2 (19mm x 19mm, total edge space is 76mm), 384bit would not fit, 320bit would be borderline. It also becomes clear that having a split memory could only work using HBM for one of them.

Hmm i think you need to take in account of memory clocks.

We also have hard figures for die edge for a GDDR6 phy controller.

on a 360mm2 die you can fit two more controllers on the left side.

Last edited:

Are you sure about that? Sounds wrong to me. Motion smoothing of lower-framerate content up to 60 fps is possible, but motion smoothing of 60fps material to a virtual 120 Hz can't be done, and all you can do is add more motion blur the represent movement during the 1/60th second interval.I believe it's simple motion interpolation. Most modern LED TVs are capable of motion smoothing (i.e., Motionflow, TruMotion, etc.) which can simulate higher refresh rates (i.e, 120Hz, 240Hz, etc) on 60Hz panel TVs.

120 Hz downsampled to 60 Hz would add a small bit of motion blur that'd potentially aid smoothness although on really faster content you'll just get ghosting. And that's all you can do. You can only present a 60th timeslice on a 60 fps display. Any more temporal information than that will be blur/ghosting, simulating a shutter open for longer. Not worth rendering double pixels in that case. What you could do though is jitter the sampling and get 2x reconstruction info, so better AA and reconstructed hyper-resolution I guess, approaching 2x supersampling (at it's simplest, and better than 2x SSAA with better algorithms).

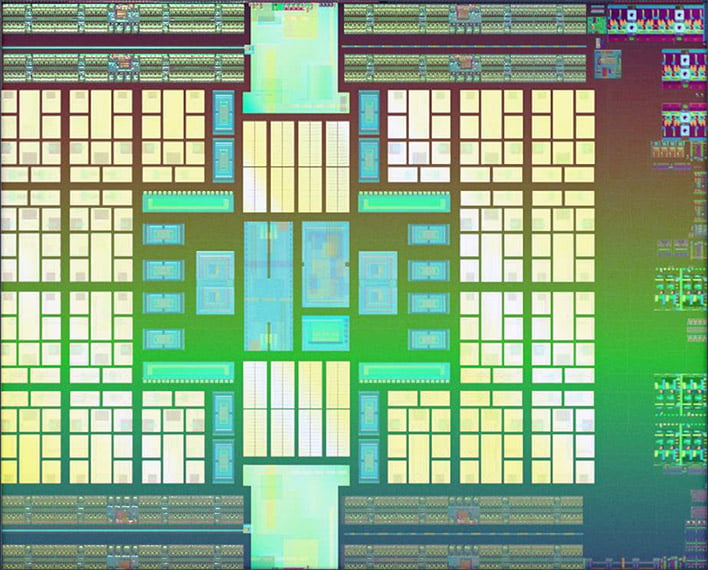

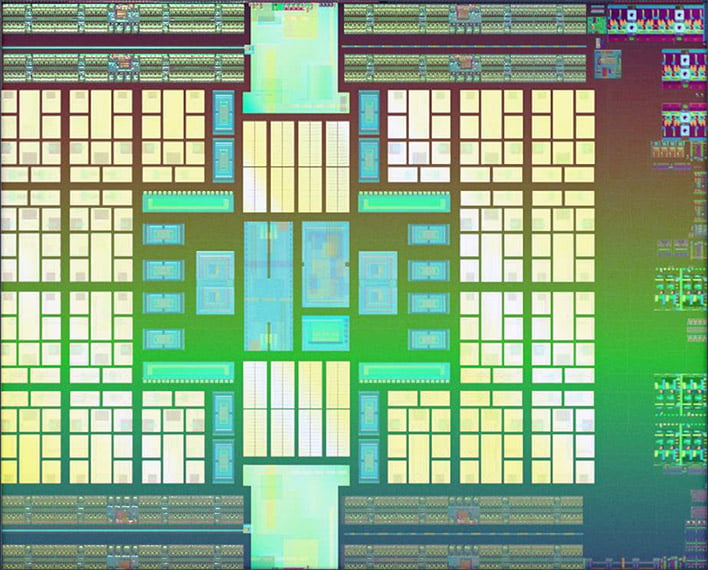

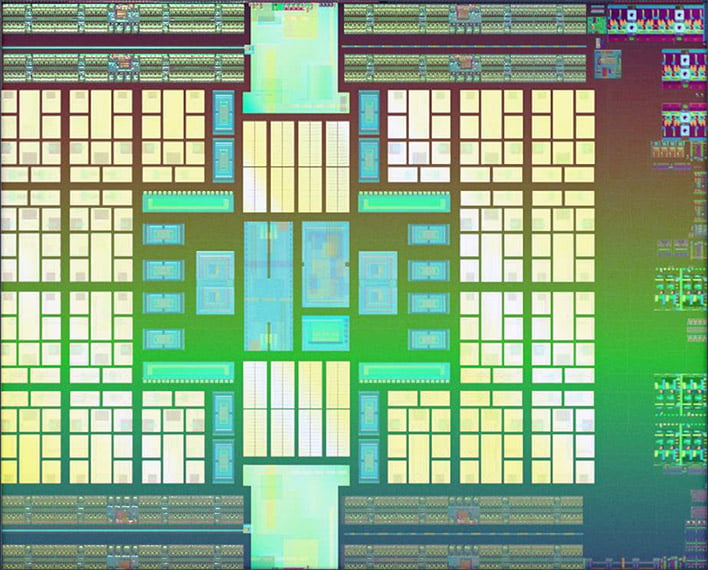

Why there is plenty of black stuff on the right of the die? Is there something that shouldn't be needed in a console ?Hmm i think you need to take in account of memory clocks.

We also have hard figures for die edge for a GDDR6 phy controller.

on a 360mm2 die you can fit two more controllers on the left side.

EDIT: There is already one HDMI controller

Last edited:

D

Deleted member 13524

Guest

Die edge measurements don't say how much total PCB area is needed for each implementation though. GDDR6 is demanding with the trace lengths AFAIK, so the actual PCB area it takes is significantly larger than HBM or even LPDDR.https://semiengineering.com/dram-tradeoffs-speed-vs-energy/

That's an interesting metric: GB/s per mm of die edge.

Hbm2e = 60GB/s per mm

Gddr6 = 10GB/s per mm

Lpddr5 = 6GB/s per mm

Assuming currently available speeds, which is 410GB/s per stack, gddr6 at 16gbps, lpddr5 at 6400Mbps, it gives an idea of how much edge space is consumed to fit a certain width of memory.

2 stack = 14mm

256bit gddr6 = 51mm

384bit gddr6 = 77mm

256bit lpddr5 = 34mm

There has to be enough space left for pcie channels and all the rest other than the memory.

As an example, with a wild guess of 75% of the edge for memory and 25% for eveything else, and a chip of 360mm2 (19mm x 19mm), 384bit would not fit, 320bit would be borderline. It also becomes clear that having a split memory could only work using HBM for one of them.

Do we know there is such direct association between the width of the controllers circuitry and how they are routed to the edge?Hmm i think you need to take in account of memory clocks.

We also have hard figures for die edge for a GDDR6 phy controller.

on a 360mm2 die you can fit two more controllers on the left side.

I was assuming 16gbps, but yeah the 10GB/s per mm figure might have been for 14gbps. So that would be 67mm for 384bit. Their comment were not meant to be exact since they were talking about 6 or 10 times more than hbm.

Last edited:

Are you sure about that? Sounds wrong to me. Motion smoothing of lower-framerate content up to 60 fps is possible, but motion smoothing of 60fps material to a virtual 120 Hz can't be done, and all you can do is add more motion blur the represent movement during the 1/60th second interval.

IIRC, the motion interpolation methods used in LED TVs take whatever the captured framerate (i.e, 24, 30, 60, etc.) and add the prior or future frame on matching the 120Hz or 240Hz method. Although 60fps capture already matches the TV's native refresh-rate of 60Hz, it still can benefit from prior frames (more so) being introduced. As long as the TV can support 120 frames (120Hz) or 240 frames (240Hz), through it's motion interpolation logic, the original film/video framerate capture will recieve the same treatment (as long as it's under 120fps or 240fps).

On PS4 and Pro they use the bus to the southbridge to communicate with the ARM + DDR3 memory in order to alleviate stuff from the Jaguar. the problem is that that bus is slow and has high latency so only limited stuff can be done that way.

On PS5 could they use the PCI-e 4.0 bus to the rumored DDR4 memory pool and make it fully usable by the CPU for the OS ? That could be a way to dedicate the precious GDDR6 memory only for the game.

On PS5 could they use the PCI-e 4.0 bus to the rumored DDR4 memory pool and make it fully usable by the CPU for the OS ? That could be a way to dedicate the precious GDDR6 memory only for the game.

Xbox One X runs Overwatch already at 60 fps at 4K.... 4x faster GPU + 8 core Zen 2 would easily achieve 120 fps...

Hmmm has something just slipped ?

- Status

- Not open for further replies.

Similar threads

- Replies

- 22

- Views

- 7K

- Replies

- 12

- Views

- 2K

- Replies

- 601

- Views

- 88K

- Locked

- Replies

- 486

- Views

- 73K

- Replies

- 2K

- Views

- 190K