Alucardx23

Regular

I want to dedicate this thread to how machine learning will help to greatly improve image quality at a relatively low performance cost. We can start with Google's RAISR. Here are some claims from Google:

-High Bandwidth savings

"By using RAISR to display some of the large images on Google+, we’ve been able to use up to 75 percent less bandwidth per image we’ve applied it to."

-So fast it can run on a typical mobile device

"RAISR produces results that are comparable to or better than the currently available super-resolution methods, and does so roughly 10 to 100 times faster, allowing it to be run on a typical mobile device in real-time."

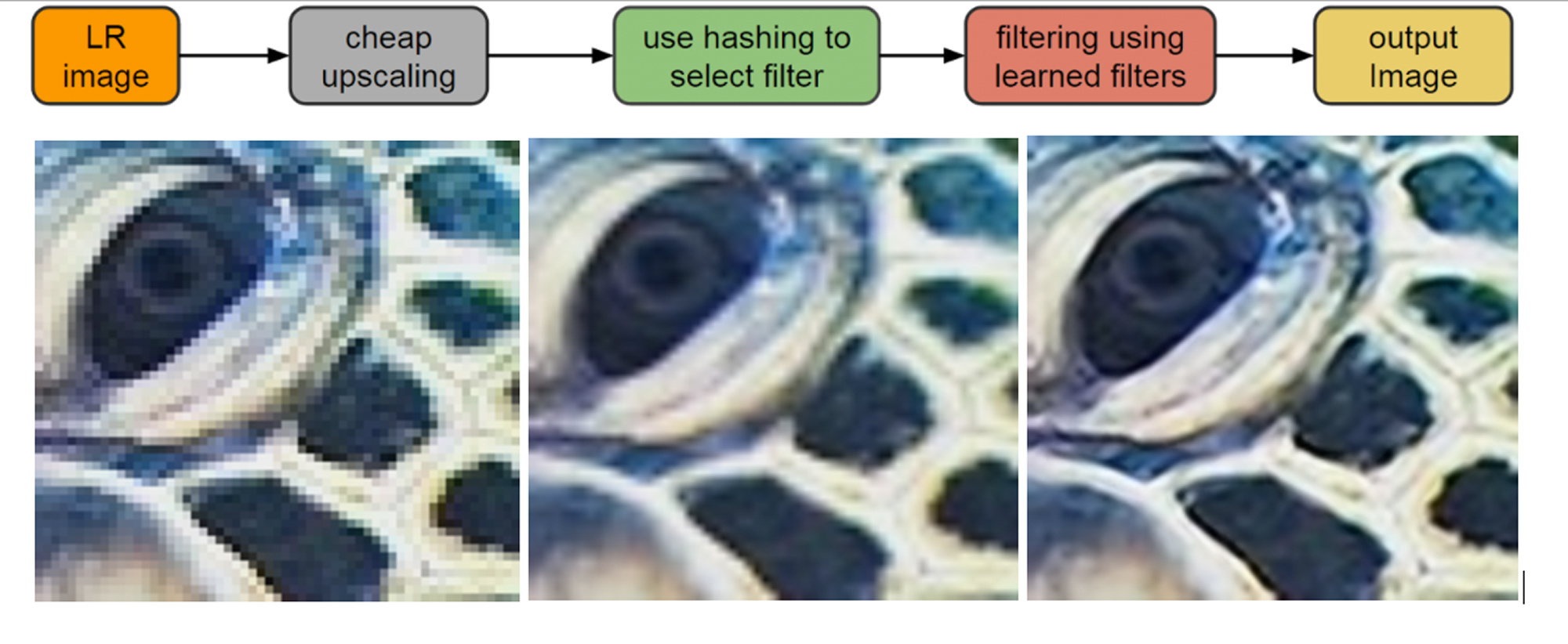

-How it works

"With RAISR, we instead use machine learning and train on pairs of images, one low quality, one high, to find filters that, when applied to selectively to each pixel of the low-res image, will recreate details that are of comparable quality to the original. RAISR can be trained in two ways. The first is the "direct" method, where filters are learned directly from low and high-resolution image pairs. The other method involves first applying a computationally cheap upsampler to the low resolution image and then learning the filters from the upsampled and high resolution image pairs. While the direct method is computationally faster, the 2nd method allows for non-integer scale factors and better leveraging of hardware-based upsampling.

For either method, RAISR filters are trained according to edge features found in small patches of images, - brightness/color gradients, flat/textured regions, etc. - characterized by direction (the angle of an edge), strength (sharp edges have a greater strength) and coherence (a measure of how directional the edge is). Below is a set of RAISR filters, learned from a database of 10,000 high and low resolution image pairs (where the low-res images were first upsampled). The training process takes about an hour."

Comments:

We are talking about a neural network that learns the best way to upscale images, based on a data base of thousands of compared images at different resolutions. As an example, you have developer X trying to develop a game that has a target of 1080P/60fps on the PS4 hardware, in theory you could let a neural network compare a bunch of images for hours/days of your game, running at 720P VS 1080P, and it will get better finding the best custom upscaling method to simulate a 1080P image, based on a 720P framebuffer.

I have seen several examples of AA methods that work wonders on one game, but don't work as good on others, since a lot has to do with the game aesthetics. This means that with this method every game can have their own custom AA filters that no other "One size fits all AA technique" can compete with at the same performance level.

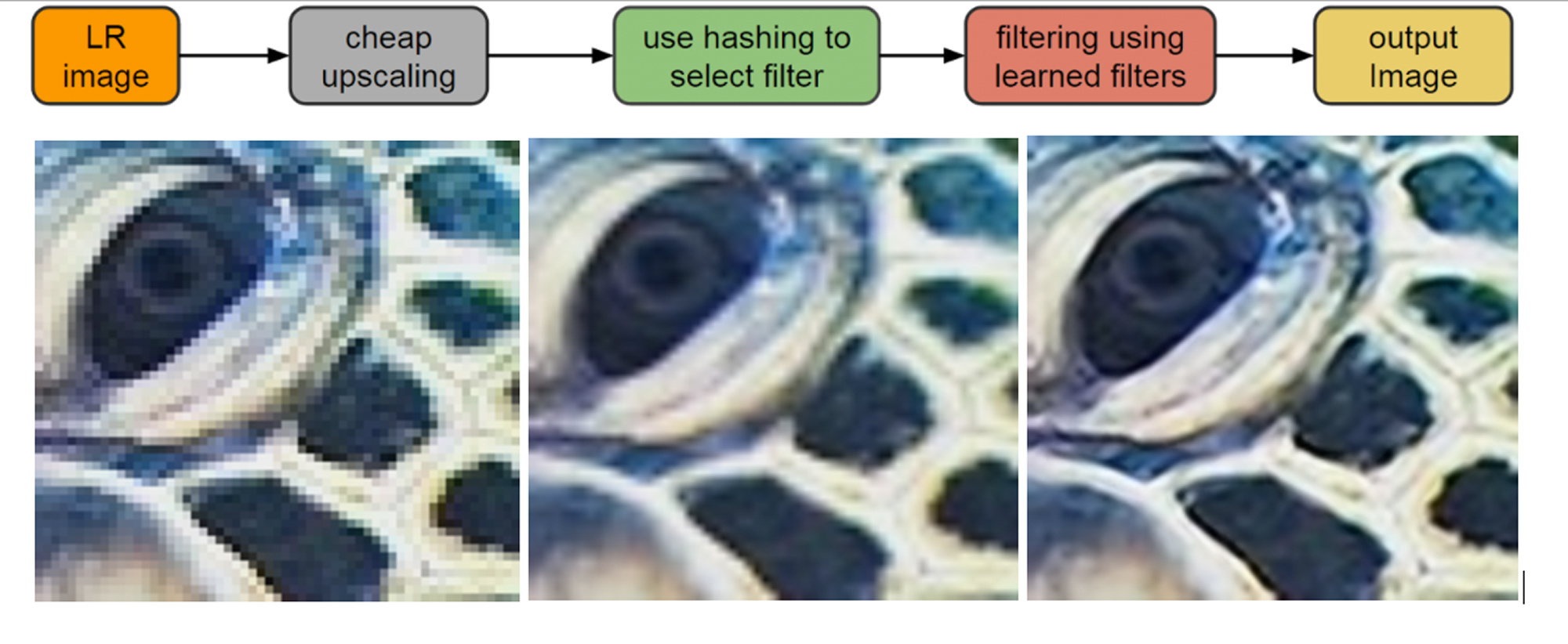

RAISR Upscaling examples:

Source material:

Saving you bandwidth through machine learning

https://blog.google/products/google-plus/saving-you-bandwidth-through-machine-learning/

Enhance! RAISR Sharp Images with Machine Learning

https://research.googleblog.com/2016/11/enhance-raisr-sharp-images-with-machine.html

-High Bandwidth savings

"By using RAISR to display some of the large images on Google+, we’ve been able to use up to 75 percent less bandwidth per image we’ve applied it to."

-So fast it can run on a typical mobile device

"RAISR produces results that are comparable to or better than the currently available super-resolution methods, and does so roughly 10 to 100 times faster, allowing it to be run on a typical mobile device in real-time."

-How it works

"With RAISR, we instead use machine learning and train on pairs of images, one low quality, one high, to find filters that, when applied to selectively to each pixel of the low-res image, will recreate details that are of comparable quality to the original. RAISR can be trained in two ways. The first is the "direct" method, where filters are learned directly from low and high-resolution image pairs. The other method involves first applying a computationally cheap upsampler to the low resolution image and then learning the filters from the upsampled and high resolution image pairs. While the direct method is computationally faster, the 2nd method allows for non-integer scale factors and better leveraging of hardware-based upsampling.

For either method, RAISR filters are trained according to edge features found in small patches of images, - brightness/color gradients, flat/textured regions, etc. - characterized by direction (the angle of an edge), strength (sharp edges have a greater strength) and coherence (a measure of how directional the edge is). Below is a set of RAISR filters, learned from a database of 10,000 high and low resolution image pairs (where the low-res images were first upsampled). The training process takes about an hour."

Comments:

We are talking about a neural network that learns the best way to upscale images, based on a data base of thousands of compared images at different resolutions. As an example, you have developer X trying to develop a game that has a target of 1080P/60fps on the PS4 hardware, in theory you could let a neural network compare a bunch of images for hours/days of your game, running at 720P VS 1080P, and it will get better finding the best custom upscaling method to simulate a 1080P image, based on a 720P framebuffer.

I have seen several examples of AA methods that work wonders on one game, but don't work as good on others, since a lot has to do with the game aesthetics. This means that with this method every game can have their own custom AA filters that no other "One size fits all AA technique" can compete with at the same performance level.

RAISR Upscaling examples:

Source material:

Saving you bandwidth through machine learning

https://blog.google/products/google-plus/saving-you-bandwidth-through-machine-learning/

Enhance! RAISR Sharp Images with Machine Learning

https://research.googleblog.com/2016/11/enhance-raisr-sharp-images-with-machine.html

Last edited: