DegustatoR

Veteran

/cdn.vox-cdn.com/uploads/chorus_asset/file/23930132/newsroom_arcpro_overview.jpg)

Intel launches Arc Pro GPUs that are designed for workstations and pro apps

These Arc Pro GPUs are tuned for creator and pro apps.

/cdn.vox-cdn.com/uploads/chorus_asset/file/23930132/newsroom_arcpro_overview.jpg)

The byline to the headline of that article cracks me up:/cdn.vox-cdn.com/uploads/chorus_asset/file/23930132/newsroom_arcpro_overview.jpg)

Intel launches Arc Pro GPUs that are designed for workstations and pro apps

These Arc Pro GPUs are tuned for creator and pro apps.www.theverge.com

Maybe that's what all the engineers have been working on?The byline to the headline of that article cracks me up:

"Intel is ready to take on the pro GPU market"

No, no they are not.

And maybe they'll get all the bugs out of their drivers/cards before the A750 release, but I tend to doubt it.Maybe that's what all the engineers have been working on?

I wanted Intel to do well with their GPU, I really did. I think I bought in to the hype early because of the team they were putting together and all of the people I knew on it. I should have seen this coming over a year ago and didn't which has gotten me angry with myself for falling for the hype, because this feels EXACTLY like the Vega launch did to me and I'm seeing tons of similarities in the way they're rolling it out.

I don't want Intel to fail at GPUs, I want a third competitor, I just don't think Arc is going to do it and I don't think Intel is gonna put up with the shenanigans they pulled launching it and will nix the Battleaxe series.

And I include XeSS with that.If they can get their drivers over the LOL hump.

Please no, Intel sunk lotta money into other useless vanity projects beforehand.I just hope intel keeps up with it.

Navi thirty-three says hello!they could at least be more competitive in the lower end

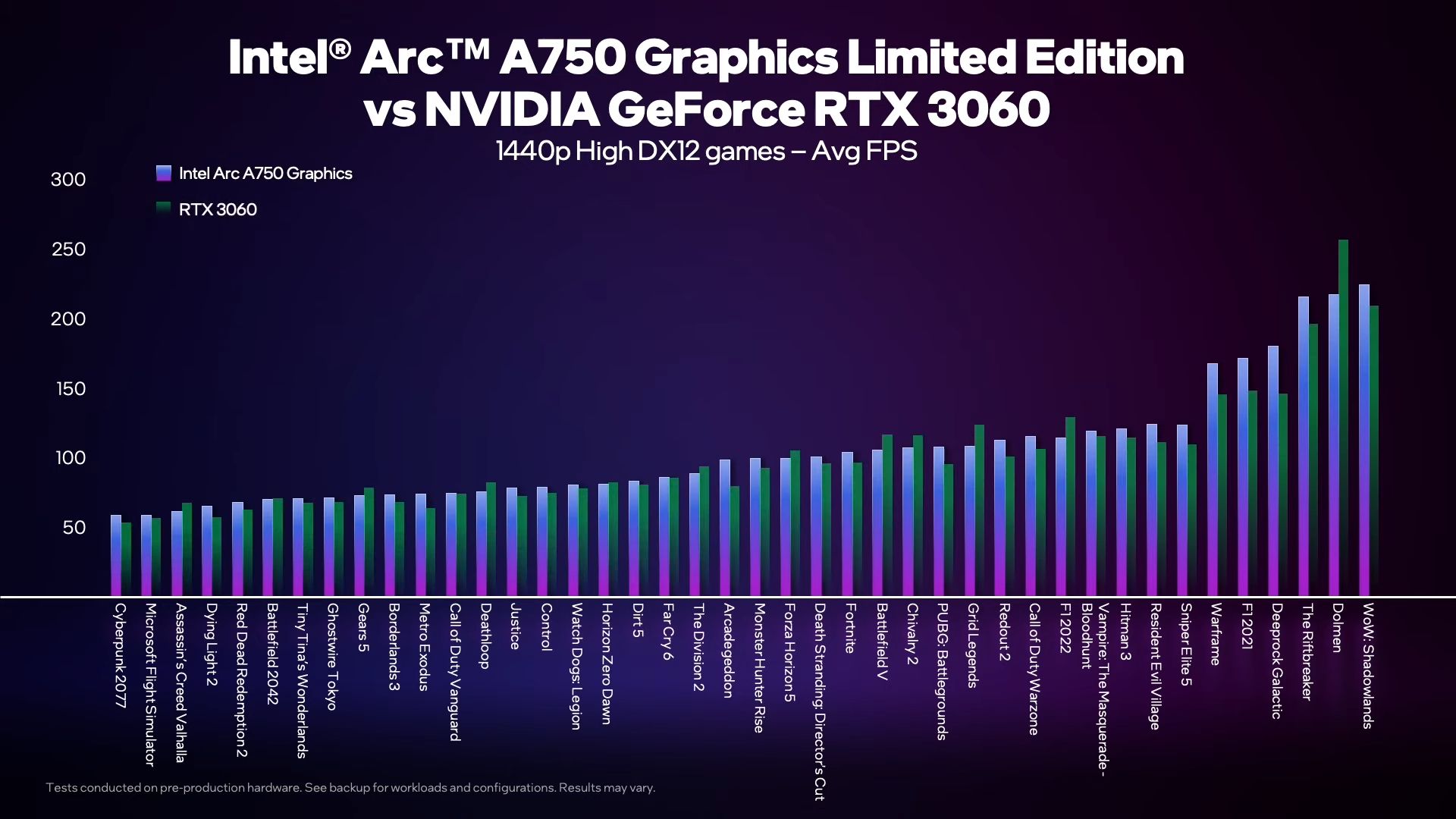

Only in select DX12 tites, the rest is horrendously bad.I mean it looks competitive with the 3060

Select? over 40 games is quite a bit more than just "select titles" and it included several games which haven't been properly optimized (according to Intel) yet.Only in select DX12 tites, the rest is horrendously bad.

Also you don't evaluate this product based on performance alone, as many outlets already discovered, frame pacing in games is bad, visual bugs and artifacts run rampant, games refuse to even launch, not to mention the myraids of game breaking bugs. RT performance is also not mentioned by Intel, implying a problem there as well.

If they can get their drivers over the LOL hump.

Man, Intel fucked up way harder than I thought they would before diving into the trenches near Izyum.

This is worse than Vega.

Please no, Intel sunk lotta money into other useless vanity projects beforehand.

We need good Xeons, not shit GPUs.

Navi thirty-three says hello!

Low-end is all about having the best raw PPA possible, and Intel has the worst (156mm^2 G11 versus 203mm^2 N33 hahahaha holy shit same node too) out there.

Only in select DX12 tites, the rest is horrendously bad.

Also you don't evaluate this product based on performance alone, as many outlets already discovered, frame pacing in games is bad, visual bugs and artifacts run rampant, games refuse to even launch, not to mention the myraids of game breaking bugs. RT performance is also not mentioned by Intel, implying a problem there as well.

They specifically talked about how they mainly optimized the most important 100 games (popular, used by revieweres for benchmarking, etc). So yes 40 DX12 games are a drop in the ocean, and they ARE cherrypicked, so "select" is the right word.over 40 games is quite a bit more than just "select titles" and it included several games which haven't been properly optimized (according to Intel)

RT performance has never been shown in any Intel marketing material, whether for low end or otherwise, why do you think so?RT performance has been benchmarked already,

They specifically talked about how they mainly optimized the most important 100 games (popular, used by revieweres for benchmarking, etc). So yes 40 DX12 games are a drop in the ocean, and they ARE cherrypicked, so "select" is the right word.

RT performance has never been shown in any Intel marketing material, whether for low end or otherwise, why do you think so?

AMD had to do a complete mgmt overhaul at Radeon and they brought some Zen people in to get where they are now, and where they'll be by EOY.Vega had a lot of issues , RDNA fixed a bunch and then RDNA 2 got them back into the race with feature parity and performance parity at least in traditional rasterization and only falling behind in ray tracing.

Obviously but Intel has a huge bowl of problems to solve and they're all kinda more important than being a 3rd GPU player.I rather have 3 players than 2 players is my personal opinion.

Zen people did pretty much nothing except custom fit the caches to my understandingAMD had to do a complete mgmt overhaul at Radeon and they brought some Zen people in to get where they are now, and where they'll be by EOY.

They did a whole lot of methodology work and cultural brainwashing (i.e. the same stuff Intel needs to do everything to get back on track).Zen people did pretty much nothing except custom fit the caches to my understanding