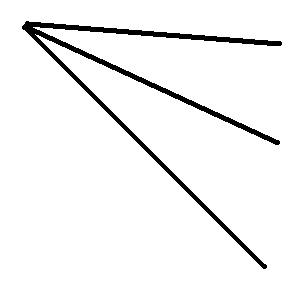

I just uploaded a demo of GPAA, a post-processing antialiasing technique that is using the actual geometric information to blend pixels with neighbors along geometric edges. The best case is actually near horizontal or near vertical edges, with the worst case being diagonal lines, although those are handled pretty well too.

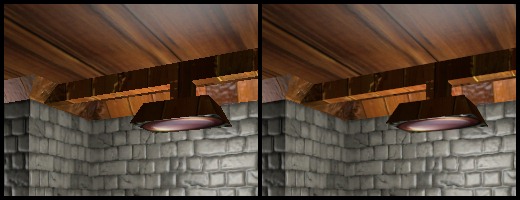

Comparison with and without GPAA:

Downloadable from my website.

Toggle GPAA with F5.

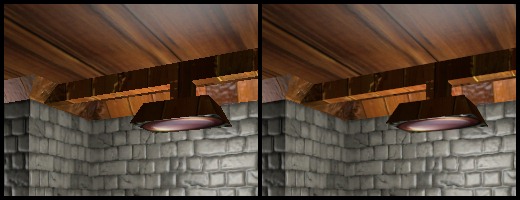

Comparison with and without GPAA:

Downloadable from my website.

Toggle GPAA with F5.