GCN is all 3 MIMD, SIMD, & SMT

...

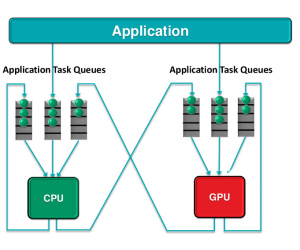

As I said, the claim for MIMD on AMD GCN is based on running multiple programs on the same GPU. The hardware gets partitioned to reserve hardware resources for each program, effectively creating two virtual GPUs, hence a MIMD execution model. That's how I understand it. A single program(game) dispatching compute jobs to ACEs is not MIMD. The execution model for a typical game on any GCN hardware is what they call SMT or SIMT (not totally clear on the difference between the two).

I'm not using it for ACE I'm using it because the PS4 APU can run 8 compute pipelines, I never seen them AMD slides before.

There is nothing different about the ACEs on PS4. It is the same compute model as any other GCN GPU. At the time it came out, it had more ACEs than Xbox One. I'm not sure if PC parts had 8 ACEs at the time, but they do now.

Last edited: