http://gametomorrow.com/blog/index.php/2006/06/19/ray-tracing-receiving-new-focus/

If I remember correctly, Philipp Slusallek work to implement a real time raytracing renderer on CELL with IBM helps, I'm not sure. He works on Saarcor FPGA before. I hope that Barry Minor present some works on a ray tracing renderer on cell.

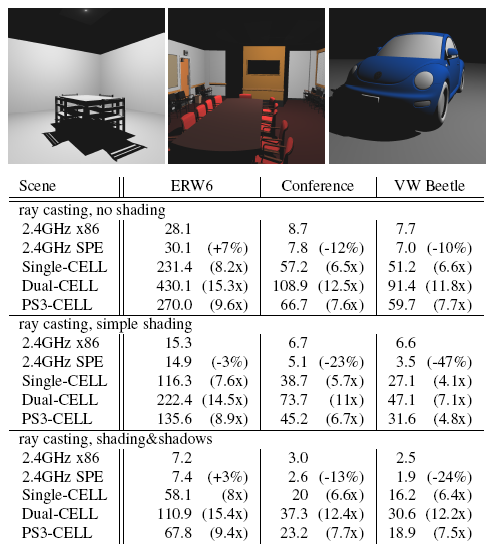

Ray-tracing has always been the algorithm of choice for photorealistic rendering. Simple and mathematically elegant, ray-tracing has always generated lots of interest in the software community but its computationally intensive nature has limited its success in the interactive/real-time gaming world. However while the rendering time of traditional polygon rasterization techniques scales linearly with scene complexity, ray-tracing scales logarithmically. This is becoming increasing important as gamers demand larger more complex virtual worlds. Ray-tracing also scales very well on today’s multi-core “scale-out” processors like Cell. It falls into the category of “embarrassingly parallel” and therefore scales linearly with the number of compute elements

http://www.sci.utah.edu/RT06/

We plan to participate as we feel this topic is very important to the future of gaming and graphics in general.

If I remember correctly, Philipp Slusallek work to implement a real time raytracing renderer on CELL with IBM helps, I'm not sure. He works on Saarcor FPGA before. I hope that Barry Minor present some works on a ray tracing renderer on cell.

Last edited by a moderator: