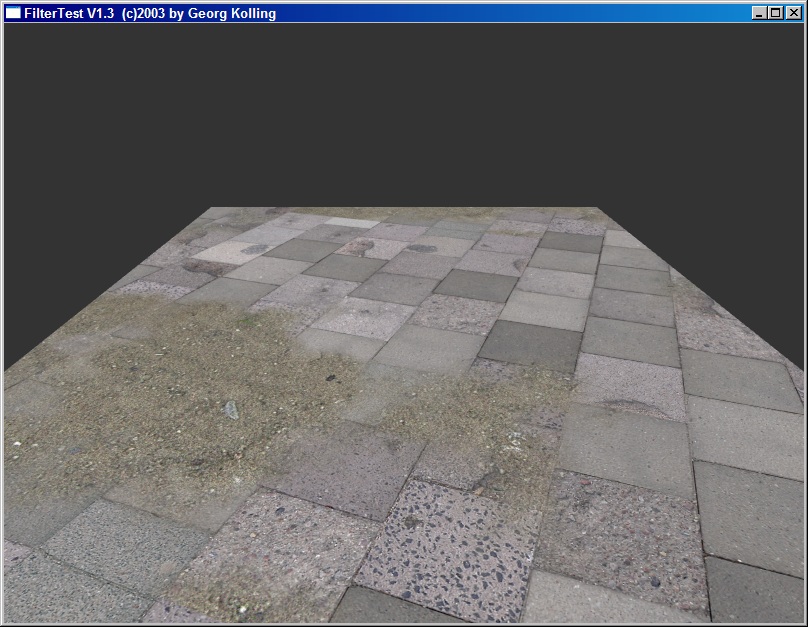

aths said:Matt B said:interesting work, just thought id say that you have some serious scale inconsistencies on your dirtmap version

What is that supposed to mean? The dirtmap shows sand. Since there is no infinite resolution its hard to show every single grain.

Sorry, Its not an important point, just that the sand texture looks like its at least twice as big as it should be, if you look at the size of the rocks and pebbles and stuff on the sand they are much bigger than the rocks and pebbles on the pavement, also the sand looks much blurrier than the pavement. This would be fixed by just scaling down the sand texture.