The commonality between developers is that DX12/Vulkan is too much work.

Stardock is the one of the first ever developers (along with Dice) to champion DX12/Vulkan, with their Ashes of Singularity game, they pushed it so hard, that they seem to have staked the future of the entire company on DX12. Then after that? Nothing. None of their games after that came with DX12. They made 4 games after Ashes and all of them relied on DX11 primarily.

They made an official statement about this. Guess what was their reasons?

DX12/Vulkan triples your QA testing, too many things can easily go wrong, which causes instant artifacts and instant crashes, this directly translated to a hugely inflated QA budget for DX12/Vulkan. They simply ran out of budget just to investigate the crashes.

Putting aside the theoretical power of Vulkan and DirctX 12 when compared to DirectX 11 how do they do in practice? The answer, not surprising is, it depends.

www.gamedeveloper.com

Nowadays, after DXR, developers have to code for two separate paths, a raster one with no Ray Tracing, and a Ray Tracing one, sometimes even a Path Tracing one. They also have to support several upscaling techniques, internal solutions (UE's TSR/Checkerboarding/Dynamic Resolution/UbiSoft Temporal Upscalers/Insomniac GTI, .. etc),

and external solutions, FSR2 or DLSS or XeSS, or DLAA, or DLSS3 or all of them combined, that's simply too much work, supporting all of that with DX12 on two separate render paths is too much work, all of these featured lead to a ballooning budget. Again, developers on PC simply lack the time/budget/knowledge to do all of that. That's the reality of the situation that was overlooked when these APIs were developed, which Khronos admitted several times in their latest blog.

In my field this is called the disconnect between pharmacologists and clinicians, pharmacologists develop and test the drug, after the trials they deem it safe to be publicly available worldwide. A few years later clinicians in the field scream outloud that the drug has too many side effects to be useful, and is also cumbersome to use and is expensive compared to older drugs. Yet instead of listening to them pharmacologists ignore all of that and insist that they developed a "better" drug, leading to suffering across the board, or leading to clinicians abandoning the drug (like Stardock abandoned DX12). I find this to be an extremely fitting analogy to the current API situation.

Another UbiSoft developer advised against going into DX12 expecting performance gains, as according to them achieving performance parity with DX11 is hard and resources intensive.

Tiago Rodrigues, 3D programmer at Ubisoft Montreal, hosted a talk on the Advanced Graphics Tech card at GDC last week, covering the company’s experiences porting over their AnvilNext engine to DirectX 12. The Advanced Graphics Tech prefix alone should have been the first hint that much of the low-

www.pcgamesn.com

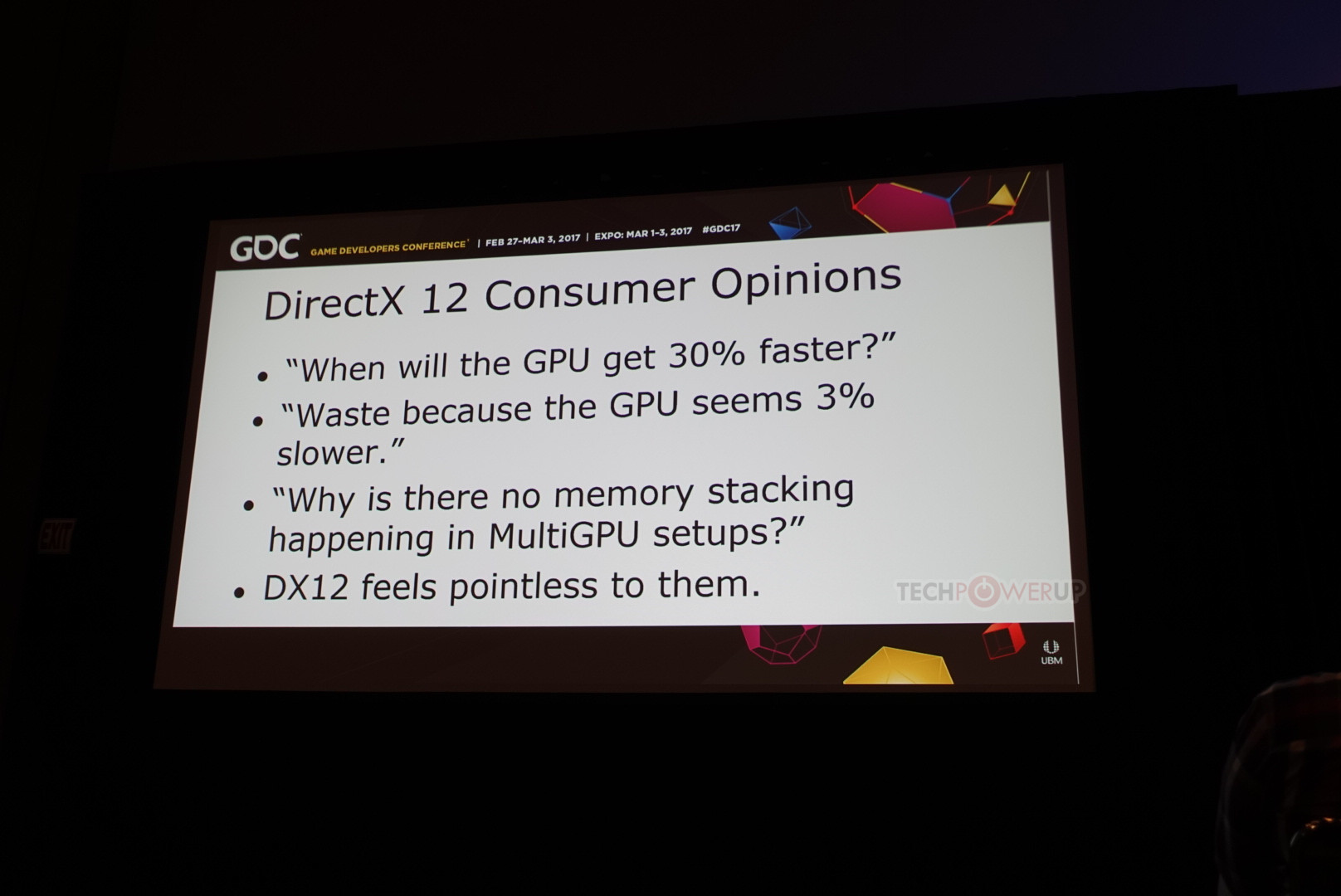

Another veteran developer (Nixxez) states that developing for DX12 is hard, and can be worth it or not depending on your point of view, the gains from DX12 can easily be masked on high end CPUs running maxed out settings and you end up with nothing. They also call Async Compute inconsistent, and too hardware specific which makes it cumbersome to use. They also state the DX12 driver is still very much relevant to the scene, and with some drivers complexity goes up! VRAM management is also a pain in the ass.

We are at the 2017 Game Developers Conference, and were invited to one of the many enlightening tech sessions, titled "Is DirectX 12 Worth it," by Jurjen Katsman, CEO of Nixxes, a company credited with several successful PC ports of console games (Rise of the Tomb Raider, Deus Ex Mankind...

www.techpowerup.com