NoYou don't alt tab while loading do you? That happened to me if I alt tab while the game is loading.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Current Generation Games Analysis Technical Discussion [2023] [XBSX|S, PS5, PC]

- Thread starter BRiT

- Start date

- Status

- Not open for further replies.

Okay so, this is just a general observation. Maybe if someone has some connections to folks at unreal/windows, they might report it.

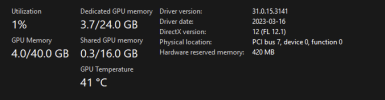

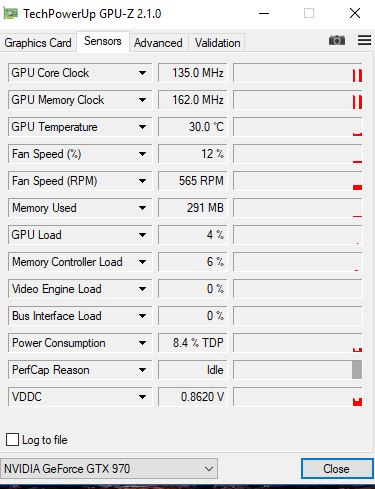

I think most UE games and some other games are simply bugged when it comes to VRAM consumption, or that I interpret things wrong.

So here's the problem. This Jedi Survivor game, if I have zero minimal bloat in the background, will at max. use 6.2-6.4 GB dedicated game usage, and a total of 7.3-7.4 GB allocation. Now okay. Let's agree that game does that so background applications can have some free VRAM to use to (a whopping 800-900 mb of vram unused). At least that's what you would LOGICALLY assume. The game only reserves a percentage of VRAM so other apps can have their way in.

Let's assume that. Let's put some stuff that has "500-600 mb" worth of VRAM usage then. So I can use my GPU for multitasking. After all, game refuses to utilize free VRAM. So I might as well use that FREE VRAM for other apps?

And what happens? Oh my god, game's VRAM usage is reduced by 500 mb once you open 500 mb worth of VRAM-using applications . And total maximum system allocation is still around 7.3-7.4 GB. It ALMOST feels like game or system "thinks" your system has a total VRAM of 7.2 GB, and ADJUSTS its usage accordingly. So...why? This practically makes the remainder free VRAM useless for both the system and the game.

I just feel like there's a huge miscommunication between VRAM management, game developers, game engines and Windows. There really is no reason why noticable amount of resources should go unused. Games like Last of Us, Cyberpunk and Halo Infinite can use VRAM to its maximum capability, allowing you to push graphics to their edge if you have minimal background.

With UE4 games like Hogwarts and Jedi Survivor, you still need minimal amount of background use JUST so that game can use 7 GB, but the system as a whole will also refuse to use remainder of free VRAM. So what gives?

Notice how game now utilizes 500 MB worth of VRAM when no other application is open? (Chrome+Discord+Geforce experience). b

The whole bulk of system simply refuses to utilize the remainder free VRAM. Even when the game, at max, taps out at 7.2 GB VRAM, the more appplication you open, it eats from that 7.2 GB budget instead of the total 8 GB you have.

Maybe someone can explain it. Maybe there is indeed something buggy. I really had to get this off my chest because I hate seeing this happen. I have to literally go HERMIT mode if I want the game to use 6.3-6.4 GB out of a total 8 GB. And then, even if I want to utilize "free" VRAM for OTHER things, then game starts to use 5.7-5.8 GB AS IF my total VRAM budget is 7.2 gb and not 8 gb.

Some games how the opposite behaviour where they can use all the VRAM they can find. But in this case, problem is even worse. Even if you want to utilize free VRAM for other things, you still end up get REDUCED VRAM resources for the game itself somehow.

Edit:

Here's a better visualization of the issue.

We really need a program to manage VRAM smartly. This is just weird behaviour.

In short: system has free 800-900 MB of VRAM.

Game uses 6.2 GB of VRAM.

I open Chrome that eats 600 MB of VRAM.

Instead of using the free VRAM, game reduces its own VRAM usage to 5.6 GB.

I think most UE games and some other games are simply bugged when it comes to VRAM consumption, or that I interpret things wrong.

So here's the problem. This Jedi Survivor game, if I have zero minimal bloat in the background, will at max. use 6.2-6.4 GB dedicated game usage, and a total of 7.3-7.4 GB allocation. Now okay. Let's agree that game does that so background applications can have some free VRAM to use to (a whopping 800-900 mb of vram unused). At least that's what you would LOGICALLY assume. The game only reserves a percentage of VRAM so other apps can have their way in.

Let's assume that. Let's put some stuff that has "500-600 mb" worth of VRAM usage then. So I can use my GPU for multitasking. After all, game refuses to utilize free VRAM. So I might as well use that FREE VRAM for other apps?

And what happens? Oh my god, game's VRAM usage is reduced by 500 mb once you open 500 mb worth of VRAM-using applications . And total maximum system allocation is still around 7.3-7.4 GB. It ALMOST feels like game or system "thinks" your system has a total VRAM of 7.2 GB, and ADJUSTS its usage accordingly. So...why? This practically makes the remainder free VRAM useless for both the system and the game.

I just feel like there's a huge miscommunication between VRAM management, game developers, game engines and Windows. There really is no reason why noticable amount of resources should go unused. Games like Last of Us, Cyberpunk and Halo Infinite can use VRAM to its maximum capability, allowing you to push graphics to their edge if you have minimal background.

With UE4 games like Hogwarts and Jedi Survivor, you still need minimal amount of background use JUST so that game can use 7 GB, but the system as a whole will also refuse to use remainder of free VRAM. So what gives?

Notice how game now utilizes 500 MB worth of VRAM when no other application is open? (Chrome+Discord+Geforce experience). b

The whole bulk of system simply refuses to utilize the remainder free VRAM. Even when the game, at max, taps out at 7.2 GB VRAM, the more appplication you open, it eats from that 7.2 GB budget instead of the total 8 GB you have.

Maybe someone can explain it. Maybe there is indeed something buggy. I really had to get this off my chest because I hate seeing this happen. I have to literally go HERMIT mode if I want the game to use 6.3-6.4 GB out of a total 8 GB. And then, even if I want to utilize "free" VRAM for OTHER things, then game starts to use 5.7-5.8 GB AS IF my total VRAM budget is 7.2 gb and not 8 gb.

Some games how the opposite behaviour where they can use all the VRAM they can find. But in this case, problem is even worse. Even if you want to utilize free VRAM for other things, you still end up get REDUCED VRAM resources for the game itself somehow.

Edit:

Here's a better visualization of the issue.

We really need a program to manage VRAM smartly. This is just weird behaviour.

In short: system has free 800-900 MB of VRAM.

Game uses 6.2 GB of VRAM.

I open Chrome that eats 600 MB of VRAM.

Instead of using the free VRAM, game reduces its own VRAM usage to 5.6 GB.

Last edited:

That's because you're thinking about OS/Hardware reserve vram as a pool the pool of ram to be used which is wrong. You should think of it more as emergency savings. As in, under normal circumstances, that vram will not be used. As an example, my 4090 reserves 420mb of VRAM. That being said, I don't know why people are fighting so hard to salvage 8gb of vram when they should just upgrade their GPU. 8gb is dead, just let it rest in peace.Okay so, this is just a general observation. Maybe if someone has some connections to folks at unreal/windows, they might report it.

I think most UE games and some other games are simply bugged when it comes to VRAM consumption, or that I interpret things wrong.

So here's the problem. This Jedi Survivor game, if I have zero minimal bloat in the background, will at max. use 6.2-6.4 GB dedicated game usage, and a total of 7.3-7.4 GB allocation. Now okay. Let's agree that game does that so background applications can have some free VRAM to use to (a whopping 800-900 mb of vram unused). At least that's what you would LOGICALLY assume. The game only reserves a percentage of VRAM so other apps can have their way in.

Let's assume that. Let's put some stuff that has "500-600 mb" worth of VRAM usage then. So I can use my GPU for multitasking. After all, game refuses to utilize free VRAM. So I might as well use that FREE VRAM for other apps?

And what happens? Oh my god, game's VRAM usage is reduced by 500 mb once you open 500 mb worth of VRAM-using applications . And total maximum system allocation is still around 7.3-7.4 GB. It ALMOST feels like game or system "thinks" your system has a total VRAM of 7.2 GB, and ADJUSTS its usage accordingly. So...why? This practically makes the remainder free VRAM useless for both the system and the game.

I just feel like there's a huge miscommunication between VRAM management, game developers, game engines and Windows. There really is no reason why noticable amount of resources should go unused. Games like Last of Us, Cyberpunk and Halo Infinite can use VRAM to its maximum capability, allowing you to push graphics to their edge if you have minimal background.

With UE4 games like Hogwarts and Jedi Survivor, you still need minimal amount of background use JUST so that game can use 7 GB, but the system as a whole will also refuse to use remainder of free VRAM. So what gives?

Notice how game now utilizes 500 MB worth of VRAM when no other application is open? (Chrome+Discord+Geforce experience). b

The whole bulk of system simply refuses to utilize the remainder free VRAM. Even when the game, at max, taps out at 7.2 GB VRAM, the more appplication you open, it eats from that 7.2 GB budget instead of the total 8 GB you have.

Maybe someone can explain it. Maybe there is indeed something buggy. I really had to get this off my chest because I hate seeing this happen. I have to literally go HERMIT mode if I want the game to use 6.3-6.4 GB out of a total 8 GB. And then, even if I want to utilize "free" VRAM for OTHER things, then game starts to use 5.7-5.8 GB AS IF my total VRAM budget is 7.2 gb and not 8 gb.

Some games how the opposite behaviour where they can use all the VRAM they can find. But in this case, problem is even worse. Even if you want to utilize free VRAM for other things, you still end up get REDUCED VRAM resources for the game itself somehow.

Edit:

Here's a better visualization of the issue.

We really need a program to manage VRAM smartly. This is just weird behaviour.

In short: system has free 800-900 MB of VRAM.

Game uses 6.2 GB of VRAM.

I open Chrome that eats 600 MB of VRAM.

Instead of using the free VRAM, game reduces its own VRAM usage to 5.6 GB.

Silent_Buddha

Legend

Okay so, this is just a general observation. Maybe if someone has some connections to folks at unreal/windows, they might report it.

I think most UE games and some other games are simply bugged when it comes to VRAM consumption, or that I interpret things wrong.

So here's the problem. This Jedi Survivor game, if I have zero minimal bloat in the background, will at max. use 6.2-6.4 GB dedicated game usage, and a total of 7.3-7.4 GB allocation. Now okay. Let's agree that game does that so background applications can have some free VRAM to use to (a whopping 800-900 mb of vram unused). At least that's what you would LOGICALLY assume. The game only reserves a percentage of VRAM so other apps can have their way in.

Let's assume that. Let's put some stuff that has "500-600 mb" worth of VRAM usage then. So I can use my GPU for multitasking. After all, game refuses to utilize free VRAM. So I might as well use that FREE VRAM for other apps?

And what happens? Oh my god, game's VRAM usage is reduced by 500 mb once you open 500 mb worth of VRAM-using applications . And total maximum system allocation is still around 7.3-7.4 GB. It ALMOST feels like game or system "thinks" your system has a total VRAM of 7.2 GB, and ADJUSTS its usage accordingly. So...why? This practically makes the remainder free VRAM useless for both the system and the game.

Keep in mind that Windows itself uses an amount of VRAM for graphics compositing (desktop, open windows, etc.). So any given application can never ever access all of the VRAM on a graphics card. And if it does then things can get wonky in Windows itself (well, not disasterously so, as it just spills into main memory causing slower GPU memory access).

This was first introduced for the Windows Desktop back in Vista. Back then you could enable or disable it, you can no longer disable (AFAIK) it as that is how Windows renders everything you see on screen now.

What Is Desktop Composition - How To Enable Or Disable It ? [SOLVED]

Do you want to know about how to enable or disable desktop composition, you can read this article to gather detailed information about the same

I haven't experimented with it, but it's possible that having a single display at low resolution would result in the lowest VRAM system reservation for compositing versus say my setup which is 2x 4k displays.

Here's a thread where users share how much VRAM Windows reserves on their system even when there are no applications open.

Windows 10 uses too much VRAM

Nothing open and Windows uses 800MB of VRAM. That's a lot because the GTX 1050 only has 2GB of VRAM and it's pissing me off. Does anyone has a solution for this?

linustechtips.com

linustechtips.com

There was a Windows blog post quite a few years ago that talked about Windows graphics compositing for the desktop, but I can find it. It's possible the blog post by the Windows team member doesn't even exist anymore.

Regards,

SB

As I said, if this was the case, Cyberpunk/TLOU wouldn't be able to utilize 7500 mb of raw dedicated memory usage. And nothing happened to Windows while playing them.Keep in mind that Windows itself uses an amount of VRAM for graphics compositing (desktop, open windows, etc.). So any given application can never ever access all of the VRAM on a graphics card. And if it does then things can get wonky in Windows itself (well, not disasterously so, as it just spills into main memory causing slower GPU memory access).

This was first introduced for the Windows Desktop back in Vista. Back then you could enable or disable it, you can no longer disable (AFAIK) it as that is how Windows renders everything you see on screen now.

What Is Desktop Composition - How To Enable Or Disable It ? [SOLVED]

Do you want to know about how to enable or disable desktop composition, you can read this article to gather detailed information about the samedigicruncher.com

I haven't experimented with it, but it's possible that having a single display at low resolution would result in the lowest VRAM system reservation for compositing versus say my setup which is 2x 4k displays.

Here's a thread where users share how much VRAM Windows reserves on their system even when there are no applications open.

Windows 10 uses too much VRAM

Nothing open and Windows uses 800MB of VRAM. That's a lot because the GTX 1050 only has 2GB of VRAM and it's pissing me off. Does anyone has a solution for this?linustechtips.com

There was a Windows blog post quite a few years ago that talked about Windows graphics compositing for the desktop, but I can find it. It's possible the blog post by the Windows team member doesn't even exist anymore.

Regards,

SB

I mean, if you really want to, I can simply get my older GPU, put it in, and show you despite all free VRAM available to the game, the game will still refuse to use upwards of 7.2 GB and will start to reduce its own usage if I assign another VRAM consuming app on it. I have a spare GT 1030 and I did experiments before with Forspoken (another game that strangles itself to 7.2 GB allocation + 6.4 GB dedicated VRAM usage [so the hard %80 cap rule these games appear to "use"] no matter how much free VRAM you have). I've did this experiment with Forspoken where the game was only using a maximum of 7.2 GB. I attached my monitor GT 1030, which pushed all DWM.exe and compositor VRAM load to GT 1030. So, practically I had 0.0/8.0 GB usage on the 3070, completely free, used by literally nothing, and game still refuse to use upwards of 7.2 GB. And once I assigned Chrome to the 3070, the game reduced its vram to 6.7 GB VRAM similar to what happens above.

I really don't feel like it putting it back again. But I can, if you really don't believe me. Reason I don't use them together is because it affects temps of 3070 highly when put next to each other sadly.

Even if I show that behaviour with double GPU, I'm pretty sure it will amont to nothing / mean nothing as I can't get myself heard anyways.

I found the post with the experiment:

Forspoken (Project Athia) [PS5, PC]

No more than 7GB use on my 4070ti on ultra RT settings Congratulations on your 4070Ti, curious about your enhanced experiences over your previous 3060Ti.

You can literally assign an entirely free buffer of 8 GB solely, purely, only, exclusively to game and these games will still refuse to use over 7.2 GB and even reduce their usage if you actually do open extra applications...

I can do the same experiment for Jedi Survivor too, no problem, if you really want to. (and go one step further and show that even on a free, non-Windows compositor loaded GPU, it will still reduce its own VRAM usage the moment I put an extra app on it, and still restrict itself to 7.2 GB regardless).

It is really free VRAM. I too had my suspicions, doubts, but I literally went extremes to prove otherwise. I wouldn't make a fuss about it if I wasn't sure it was free VRAM. It is.

I personally wouldn't have any beef, or rather, came to accept with 7.2 GB limitation (so that remainder VRAM can be used for other stuff). Problem here is that, the other stuff still ends up reducing the 7.2 GB budget anyways. That's my problem... 1 GB worth of VRAM going unused seems like super wasteful to me. If TLOU/Cyberpunk/HALO/ACvalhalla can use it without any problems or whatsoever, the most popular engine Unreal Engine should too, to ensure most people get the best performance.

Last edited:

Flappy Pannus

Veteran

Christ, you wonder why shader stuttering never got much attention before DF with reasoning like this. How do you know what was the source of the stutters before that you're comparing against?

You clear your shader cache, and you compare against the same sections you played previously to make a determination if stuttering has improved. This is really basic stuff.

You clear your shader cache, and you compare against the same sections you played previously to make a determination if stuttering has improved. This is really basic stuff.

@Flappy Pannus That's a pretty terrible tweet for a reviewer

It's not always the big bad publisher.

@Remij I should start re-reading Game Engine Architecture. I feel like a lot of this stuff is in there. There's a lot more to games than APIs. Concurrency is a hard problem, but there's a lot of knowledge out there about what's good and what's obviously bad.

And threading system is different between console and PC too.

@Remij I should start re-reading Game Engine Architecture. I feel like a lot of this stuff is in there. There's a lot more to games than APIs. Concurrency is a hard problem, but there's a lot of knowledge out there about what's good and what's obviously bad.

The knowledge is out there but doesn’t easily pass down to the next generation. We’ve had multi-threaded applications for decades yet I still see silly and very dangerous mistakes like storing state in singletons. The reality is that a lot of people don’t actually know what they’re doing.

Oooof. Cardinal sin.

That was the biggie even making an indie. Don’t allocate memory at run time.

Oooof. Cardinal sin.

That was the biggie even making an indie. Don’t allocate memory at run time.

Lets hope the devs can do something with this info to patch the game. And maybe hire that guy!

And people were getting upset when I called Jedi Survivor problems a "Dev Skill Issue" and now the proof is out. It was so very obvious to see how a game so thoroughly unimpressive can be so demanding. Like I said earlier, these "new gen devs" Saints Row, Jedi Fallen Order, etc pale in comparison to the PS360 devs. So very sad.

And people were getting upset when I called Jedi Survivor problems a "Dev Skill Issue" and now the proof is out. It was so very obvious to see how a game so thoroughly unimpressive can be so demanding. Like I said earlier, these "new gen devs" Saints Row, Jedi Fallen Order, etc pale in comparison to the PS360 devs. So very sad.

I'm actually very surprised that a AAA budget Star Wars game released by EA, and by a respected game developer (Respawn) would have these kinds of mistakes. I know a lot of people are leaving game companies to go into research or to work at Nvidia or UE where they don't have to be tied into game crunch and release cycles, so maybe some of these big studios really are losing their technical expertise.

EA has been bleeding high end talent for a while. Look at Dice, the current devs there are complete amateurs while the old heads went and opened up the studio making ARC raiders/The finals. Look at their Madden Devs, thoroughly incompetent. Look at their Fifa dev, they're too afraid to alter legacy code. Again, all of this just indicates skill issues. I mean look at Battlefront 1 released by Dice on XB1 and then try to tell me that what the Respawn devs are doing on a console with 6x more power is impressive? 648p, FSR, its just embarrassing. Just shit programming and you gotta call a spade a spade.I'm actually very surprised that a AAA budget Star Wars game released by EA, and by a respected game developer (Respawn) would have these kinds of mistakes. I know a lot of people are leaving game companies to go into research or to work at Nvidia or UE where they don't have to be tied into game crunch and release cycles, so maybe some of these big studios really are losing their technical expertise.

It's not just EA either, a lot of third party AAA devs have lost a bunch of talent. Look at Saints Row, Gotham Knights, etc. Suddenly a lot of games are doing way less with more? It can't be a mere coincidence? Only a few studios and publishers are making it a priority to keep their most skilled talent which imo is everything in the games industry.

Last edited:

Inuhanyou

Veteran

This cut off is somewhat misleading. He was trying to be candid about what it would take for them to catch up to Sony in market share which there are other factors at work besides just the slate of software you have for a few years.This is kind of off-topic but, it's in relation to Redfall. I don't know if anyone had the opportunity to catch Phil's interview today but, following the insane things he was saying, he should be fired. The end of his interview was actually imo worse than Matrick, "we have a product for them, it's called the 360" moment.

Of course it is contradicted by them immediately making most Bethesda games exclusive and claiming many Activision games outright as exclusive if the deal goes through. Of course exclusive games would force people to their corner and ecosystem, that's the content wars.

Calling for people to be fired has no part for technical discussions.

The problem is that he's wrong and with an attitude like that, he should be fired. Lets not forget that Nintendo went from the Wii to the Wii U. So 100 mil consoles sold to 15 million sold and they still managed to recover from it. I understand what he's saying with digital libraries and sunk cost fallacy is a thing. Here's the issue though, in the Games business, content is King period. If you don't make good games, nothing else matters. When Sony was struggling with the ps3, they took huge risks on new IPs to revitalize the system. Again, people have shown that they'll gladly abandon their library to get experiences that they couldn't get elsewhere. That's why the 360 was successful. You could play your ps2 games on ps3 but, most did not care. When you get a new console, you want "next gen vibes" and a new experience. However, they launched a new console with the same UI, same controller, and there was no next gen vibes about it. Imagine giving the green light to that as the head of Xbox, complete insane.This cut off is somewhat misleading. He was trying to be candid about what it would take for them to catch up to Sony in market share which there are other factors at work besides just the slate of software you have for a few years.

Of course it is contradicted by them immediately making most Bethesda games exclusive and claiming many Activision games outright as exclusive if the deal goes through. Of course exclusive games would force people to their corner and ecosystem, that's the content wars.

Frankly, I was of the mindset that Phil should have been fired half way through the XBOX One generation because it was obvious that he didn't get it. Going out in public and basically telling people not to buy your console is insane. Combine that with the fact that he can't manage his way out of a parking lot, he should be fired asap.

- Status

- Not open for further replies.

Similar threads

- Replies

- 96

- Views

- 8K

- Replies

- 1K

- Views

- 80K

- Replies

- 3K

- Views

- 274K

- Replies

- 5K

- Views

- 465K