@Kaotik Yah, could be. I think the play for the 7900 xtx is to get the cheapest AIB card, as long as the cooler is bigger/better than the stock one. Then undervolt the card at a minimum to see if the max clocks go up. Buying the super expensive models is probably dumb, but maybe a slightly more expensive one if you can get a 400W limit. And obviously if you want to overclock you definitely do not want the reference model.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

AMD RX 7900XTX and RX 7900XT Reviews

- Thread starter DavidGraham

- Start date

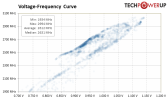

Part of the problem isn't variability between samples, it's variability between loads. I've seen some examples throttle way back down to 1900mhz and below in super compute-heavy workloads, but also seen some happily clock way up to 3ghz and beyond with the right workload.There's some wide wide variety between samples, TPU for example got pretty good sample

View attachment 7882

Unless the various reviewers are showing typical clocks with the exact same workload and exact same title, comparisons between them are going to be nearly meaningless for RDNA3, which hasn't been the case with most previous cards, where different titles might show a 100-200mhz variance.

Even just looking at TPU, their 7900XTX sample has a 1000+mhz spread between min and max clocks, and those are just samples from their 1440p game benchmarking suites. Synthetic tests have even more variability.

Last edited:

AMD Radeon RX 7900 XT & Radeon RX 7900 XTX Creator Review

AMD has just launched its newest top-end Radeons - the RDNA3-based RX 7900 XT and RX 7900 XTX - and for creators, there's a lot to be intrigued by. That includes the fact that AMD is delivering the least-expensive 24GB GPU we've seen to date. In this article, we'll explore performance of both...

techgage.com

techgage.com

Part of the problem isn't variability between samples, it's variability between loads. I've seen some examples throttle way back down to 1900mhz and below in super compute-heavy workloads, but also seen some happily clock way up to 3ghz and beyond with the right workload.

Unless the various reviewers are showing typical clocks with the exact same workload and exact same title, comparisons between them are going to be nearly meaningless for RDNA3, which hasn't been the case with most previous cards, where different titles might show a 100-200mhz variance.

Even just looking at TPU, their 7900XTX sample has a 1000+mhz spread between min and max clocks, and those are just samples from their 1440p game benchmarking suites. Synthetic tests have even more variability.

View attachment 7883

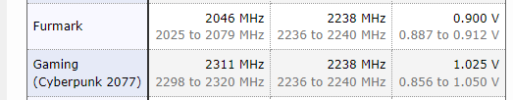

Furmark does seem to be one of the worse case compute loads that really makes RDNA3 upset.

For example, a reference 6950XT has a ~250mhz spread between Furmark and a real world game load like Cyberpunk 2077. Not too bad and about what you'd expect.

7900XTX though... 850-900mhz spread, all the way down to sub-1.6ghz.

RDNA3 is also strange in its V/F curve behaviour overall.

It's almost like certain workloads trigger a higher voltage for the same overall displayed or reported clockrates, kind of like you see with Intel CPUs getting hammered with AVX2 or AVX512.

Last edited:

Jawed

Legend

A GPU is a whole bunch of modules working together, each drawing their own power according to load. And then the GPU scales clocks according to thermals and available current.

With "front end clock" decoupled from "shader clock" there's more opportunity for each module to run at the required power for its workload.

It's worth contemplating that "front end clock" may be something of a misnomer, i.e. everything in the GPU that isn't the shader ALUs might be running at that clock, e.g. texturing hardware, ray accelerators and ROPs.

As to whether there's bugs causing extreme power consumption, well it seems people have forgotten about all the classic synthetics...

With "front end clock" decoupled from "shader clock" there's more opportunity for each module to run at the required power for its workload.

It's worth contemplating that "front end clock" may be something of a misnomer, i.e. everything in the GPU that isn't the shader ALUs might be running at that clock, e.g. texturing hardware, ray accelerators and ROPs.

As to whether there's bugs causing extreme power consumption, well it seems people have forgotten about all the classic synthetics...

gamervivek

Regular

Not so much a "review" as someone being incredibly unhappy with their card.

The core to hotspot delta on his card is ridiculous, almost 60C. And it'll most likely get worse with time.

It gets 15% more Power and it scales 15% in Tompraider. That is impressiv! But Cyperpunk is more Impressive 15% Power uplift and 25% Perfromance gain? Are you sure this is not fake?

21% in Cyberpunk. It's don't think it's fake. The architecture is power hungry. The reference cards have a 350W limit. That card has stock 400W (at least that's what it's hitting in the overlay) and it still scales up to 460W. They made a big deal about not having to buy a new case, and it would be a direct swap for the 6950xt, but that was an absolutely dumb decision. I'm not sure how they convinced themselves that marketing this thing as a small efficient gpu was a good idea. They should have made the reference card bigger and consume more power. The people spending $1k+ for a gpu don't care. They'll spend more, replace a case etc. They should have just released the card in the best light possible with a 450W limit stock, or something. They could have kept the XT model at a more reasonable 350W.

davis.anthony

Veteran

They should have chucked a AIO on it like they did with Fury X.

DavidGraham

Veteran

Overlays don't read the whole TDP of AMD cards, in that video that card is probably hitting 520w at least.That card has stock 400W (at least that's what it's hitting in the overlay)

DegustatoR

Veteran

A direct power comparison against 4090 could've been even less flattering for N31. But I agree that the whole "bigger isn't always better" was a very strange stance to make - because as it is bigger actually is better and would very likely be better for the 7900 reference designs too.21% in Cyberpunk. It's don't think it's fake. The architecture is power hungry. The reference cards have a 350W limit. That card has stock 400W (at least that's what it's hitting in the overlay) and it still scales up to 460W. They made a big deal about not having to buy a new case, and it would be a direct swap for the 6950xt, but that was an absolutely dumb decision. I'm not sure how they convinced themselves that marketing this thing as a small efficient gpu was a good idea. They should have made the reference card bigger and consume more power. The people spending $1k+ for a gpu don't care. They'll spend more, replace a case etc. They should have just released the card in the best light possible with a 450W limit stock, or something. They could have kept the XT model at a more reasonable 350W.

You can write the same about the efficiency blog post a few months back:

community.amd.com

community.amd.com

We’re thrilled with the improvements we’re making with AMD RDNA™ 3 and its predecessors, and we believe there’s even more to be pulled from our architectures and advanced process technologies, delivering unmatched performance per watt across the stack as we continue our push for better gaming.

Advancing Performance-Per-Watt to Benefit Gamers

By: Sam Naffziger, Senior Vice President, Corporate Fellow, and Product Technology Architect The demand for immersive, realistic gaming experiences is constantly pushing the boundaries of technology, driving enhancements to support features like raytracing, variable rate shading, and advanced...

DavidGraham

Veteran

They were obviously in the dark about the whole Ada lineup, they thought it would be power hungry, I think they probably underestimated Ada's performance too.

DegustatoR

Veteran

I find it hard to believe. All IHVs tend to know very well of what's coming from their competitors long before this is unveiled to the public. They can easily change the cooler designs and power limits at least.They were obviously in the dark about the whole Ada lineup, they thought it would be power hungry, I think they probably underestimated Ada's performance too.

It might shift a bottleneck, remember that shaders and frontend has separate clock domainsBut in the law of thermodynamics, how can you put in 15% power and get out 21% Power? Did AMD built a perpetual gpu?

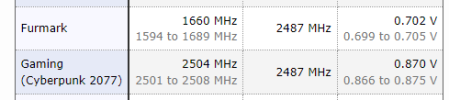

It looks like that also 4090 have issues to keep maximum clock in all games. There are a lot of games running at 2200 MHZ.

7900xtx

4090:

www.techpowerup.com

www.techpowerup.com

www.tomshardware.com

www.tomshardware.com

7900xtx

4090:

ASUS Radeon RX 7900 XTX TUF OC Review - Amazing Overclocking

The ASUS Radeon RX 7900 XTX offers fantastic overclocking potential. Thanks to a triple 8-pin power input, the XTX is no longer power-limited, and the amazing quad-slot cooler ensures the card stays cool and quiet at all times. After manual overclocking, the performance uplift to RTX 4080 was an...

AMD Addresses Controversy: RDNA 3 Shader Pre-Fetching Works Fine

These aren't the bugs you're looking for.

Similar threads

- Replies

- 6

- Views

- 1K

- Replies

- 589

- Views

- 67K

- Replies

- 255

- Views

- 18K

- Replies

- 4K

- Views

- 468K