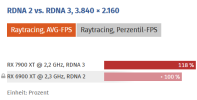

It'd be interesting if they specifically chose 300W for the figure if [1] that's below the knee of the power curve for 7900 XTX and [2] above the knee of the power curve for 6900 XT. That'd be a classic case of manipulating the numbers while simultaneously using real data which isn't terribly relevant to the shipped product as it's spec'd as a 355W part.

Although I still don't think that'd necessarily change things much. I'm just guessing something went horribly horribly wrong and the shipping product ended up being something other than what they used for the testing.

Regards,

SB