DegustatoR

Veteran

Do we consider HBM 2.5D integration "chiplets"?There's little doubt NVidia will be using chiplets at some point...

Do we consider HBM 2.5D integration "chiplets"?There's little doubt NVidia will be using chiplets at some point...

Is such a thing a GPU?Do we consider HBM 2.5D integration "chiplets"?

A GPU can't work without memory and even has it on die.Is such a thing a GPU?

Yep, eventually NVidia will put its IP across multiple chiplets to construct a GPU.A GPU can't work without memory and even has it on die.

So it has to be made with IP from one company? Then it's chiplets?Yep, eventually NVidia will put its IP across multiple chiplets to construct a GPU.

I'm talking about a GPU (for consumers, by the way), you appear to be trying to redefine a GPU to be something imaginary - good luck with thatSo it has to be made with IP from one company? Then it's chiplets?

I'm asking if HBM being integrated through an interposer (which is a more complex and advanced form of die to die interconnect) and itself being a 3D design made of several stacked dies is considered a "chiplet" design in general. It's not clear to me why it shouldn't be such really.I'm talking about a GPU (for consumers, by the way), you appear to be trying to redefine a GPU to be something imaginary - good luck with that

Yep, eventually NVidia will put its IP across multiple chiplets to construct a GPU.

Yes, agreed there's a variety of ways for a product to be a chiplet based product. e.g. Xenos can be described as a chiplet product, it's also a chiplet GPU. The pair of chips implements GPU functionality. You can say that Voodoo2:I'm asking if HBM being integrated through an interposer (which is a more complex and advanced form of die to die interconnect) and itself being a 3D design made of several stacked dies is considered a "chiplet" design in general. It's not clear to me why it shouldn't be such really.

And the point I'm trying to make is that there are more than one way to do something as "chiplets". This can also be an area where one company would get an upper hand simply through choosing a better design for it's "chiplet" product.

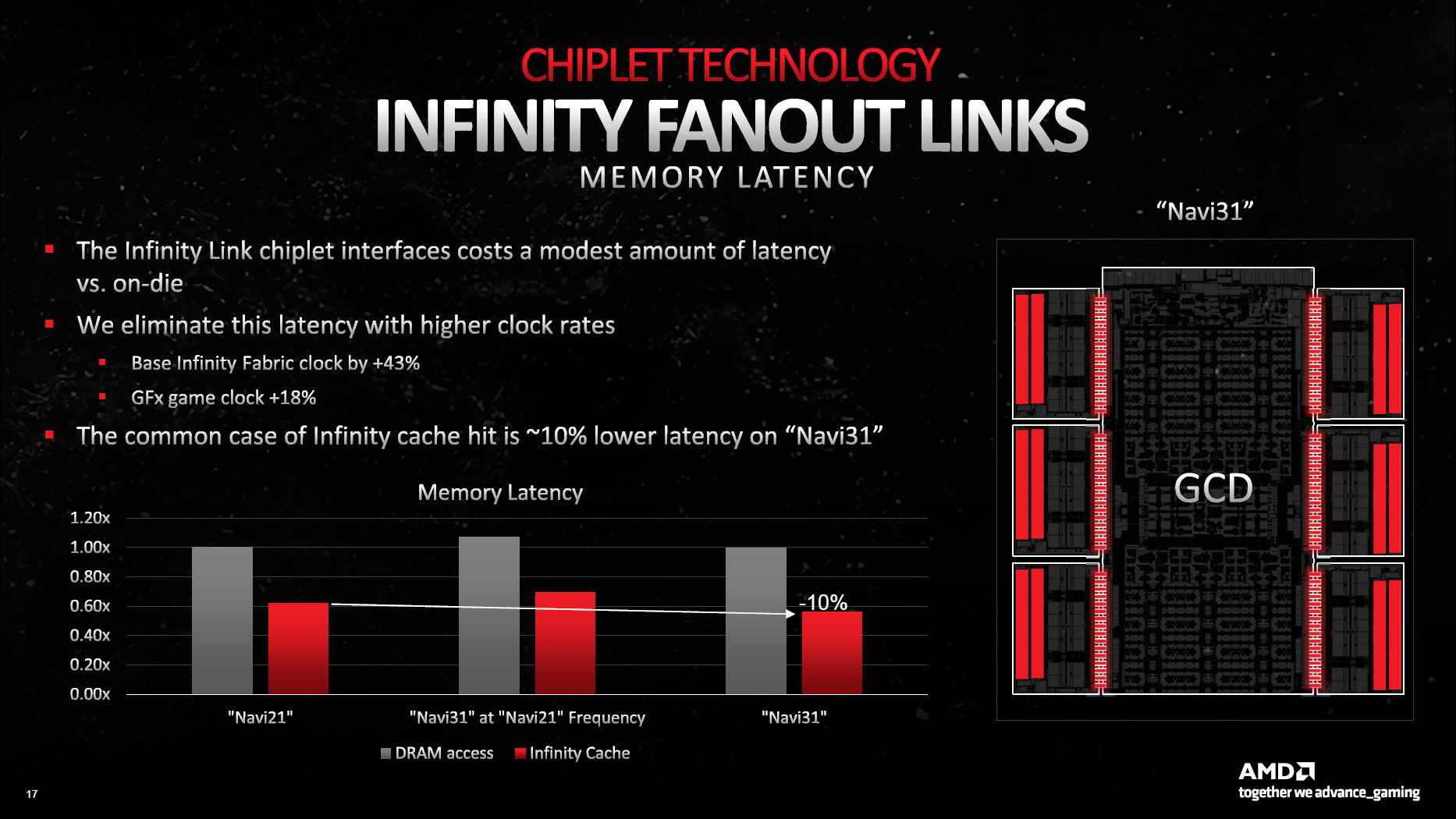

Probably but will it happen soon? According to AMD they were motivated by the diminishing returns of manufacturing cache and interfaces on advanced nodes. The question though is what did they have to sacrifice? Infinity cache latency benchmarks will be interesting.

www.techspot.com

www.techspot.com

That lack of demand and abundance of stock is evident on eBay, where many RTX 4080 cards are selling for around or just over their official store prices, a far cry from the bad times when GPUs were being scalped for three or four times their MSRP. VideoCardz reports that one scalper is offering six RTX 4080s from various manufacturers for MSRP. The seller writes that the "Market isn't what I thought.

It's a weird situation in the UK at the moment. Seems to be loads of really well priced stock of 3070Ti and below, then nothing at all in the 3080/3090 range. Then tons of 4080's at around MRSP, then no 4090's.

Looks like people can see it for the rip off it is.

The Newegg screenshot is accurate but the editorialized comment is misleading. A specific Gigabyte 4080 variant is the top-selling model (in whatever time scale they use, I'm not sure). To claim that the 4080 is the best selling GPU you would have to calculate the summation across all brands/models. Still, I suppose the fact that there are 3 4080 models in the top 10 means the card is competing well vs. popular midrange offerings (3060, 6600 etc.).Is this even true???

Given that the cheapest 4090 you can buy has been at least 40% higher than MSRP since not long after launch I could see this being true.