With an HDD not virtualisation of geometry, this will be impossible to do. No available GPU is able to render exactly like this this scene with traditionnal method not a 3090. It means tons of memory, a GPU running at a very low utilisation because of the huge number of polygons. After no continuous LOD like in Nanite need to have multiple LOD of each asset.

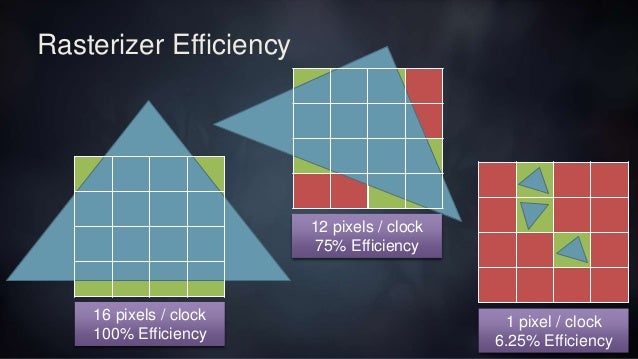

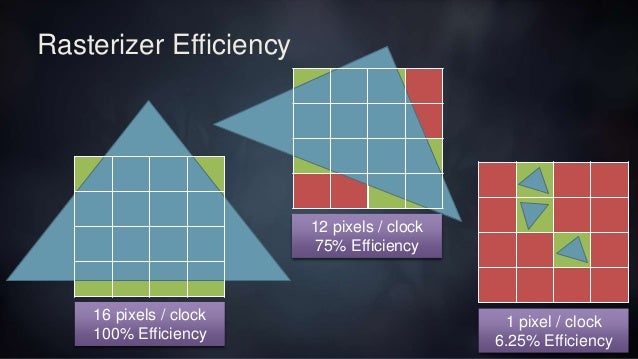

1 polygon per pixel means only 6,25% efficiency of the GPU shading, this is coming from a DICE presentation "optimizing the graphics pipeline with compute". If you want to render the same scene with better efficiency without Nanite, need to use more normal maps non casting shadows, lost details and so on shitty manual LOD. It would not have the same look at all and it would ask more work from the artist. Or you have another choice is to let all assets in RAM with a compute rasterizer but at the end it is probably to do a full game even with reduce complexity because each time you will change of biome or landscape you need to refill the full memory.

You need everything an NVME SSD is now a must have, Direct Storage will be a must have. The biggest gap between the two generation of consoles are the SSD and I/O system and it is

essential.

Without SSD this level of geometry is impossible.

EDIT: Imagine for each level you need to fill 64 GB of data with an HDD. This is 10 minutes loading time with a fast HDD without compression maybe 5 minutes with compression and 2 minutes with a SATA SSD or 1 minutes with compression. This is 11 seconds with PS5 SSD without compression and 5,5 second with compression. And no possibilty for the game designer to do a portal with high end graphics, no Doctor Strange game or like the rumor Doctor Strange in Spiderman 2.