You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

No DX12 Software is Suitable for Benchmarking *spawn*

- Thread starter trinibwoy

- Start date

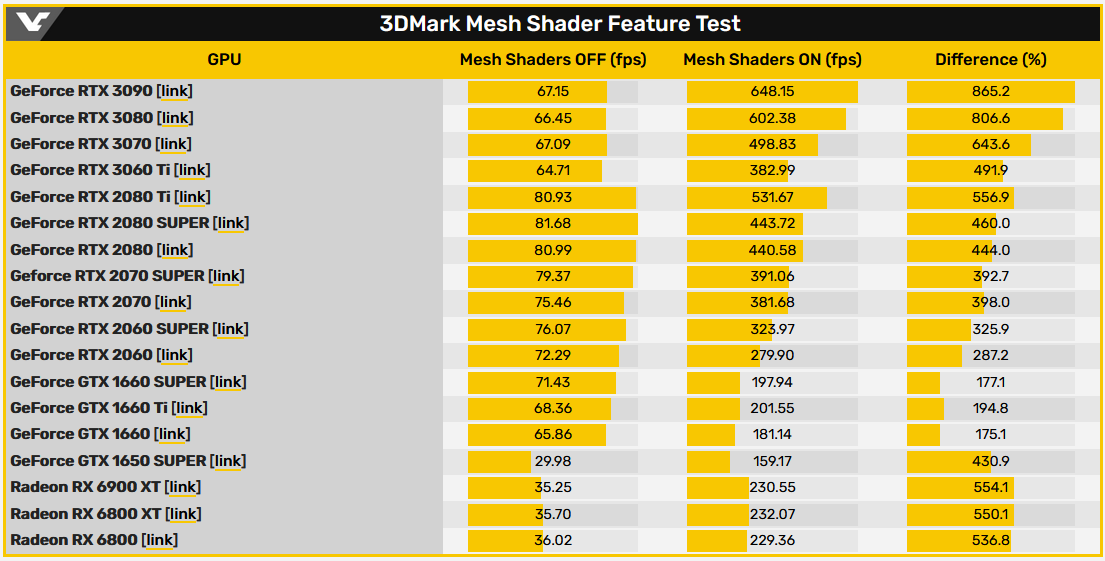

The off results are very odd for all GPUs. Hopefully some explanation is uncovered.

The most likely explanation is that the benchmark was quickly thrown together with no optimization. With mesh shader off the card is seemingly idle for long periods of time with none of the functional units doing any work.

With mesh shader on the viewport culling hardware and caches are seeing the most action. The VPC does "viewport transform, frustum culling, and perspective correction of attributes".

@trinibwoy That's pretty much what you'd expect though, isn't it? I'm assuming the non mesh-shader is kind of written worst case where you load in a bunch of vertex data, run your vertex shader and then let the fixed raster units do all of the culling. The vertex shader pipeline is not good for taking advantage of the parallelism of gpus because of the way vertices are indexed and output by the input assembler, or something like that. The mesh shader pipeline also removes the need to write out some index buffers to pass between shader stages. This test is probably written so it's not really doing much other than processing geometry and the mesh shaders do it way faster. AAA games would do more culling with compute shaders to get some similar benefits, but I don't know how you'd compare.

@trinibwoy That's pretty much what you'd expect though, isn't it? I'm assuming the non mesh-shader is kind of written worst case where you load in a bunch of vertex data, run your vertex shader and then let the fixed raster units do all of the culling. The vertex shader pipeline is not good for taking advantage of the parallelism of gpus because of the way vertices are indexed and output by the input assembler, or something like that. The mesh shader pipeline also removes the need to write out some index buffers to pass between shader stages. This test is probably written so it's not really doing much other than processing geometry and the mesh shaders do it way faster. AAA games would do more culling with compute shaders to get some similar benefits, but I don't know how you'd compare.

I would still expect to see a lot of vertex attribute fetch and fixed function culling activity with mesh shaders off. Instead there's nothing.

https://support.benchmarks.ul.com/e...erview-of-the-3dmark-mesh-shader-feature-testof vertex attribute fetch and fixed function culling activity with mesh shaders off. Instead there's nothing.

"

The test runs in two passes. The first pass uses the traditional geometry pipeline to provide a performance baseline. It uses compute shaders for LOD selection and meshlet culling. This reference implementation illustrates the performance overhead of the traditional approach.

The second pass uses the mesh shader pipeline. An amplification shader identifies meshlets that are visible to the camera and discards all others. The LOD system selects the correct LOD for groups of meshlets in the amplification shader. This allows for a more granular approach to LOD selection compared with selecting the LOD only at the object level. The visible meshlets are passed to the mesh shaders, which means the engine can ignore meshlets that are not visible to the camera.

"

The "traditional" baseline performance test is just compute shaders with some weird bottleneck along the way and the traditional geometry pipeline is essentially ignored and used just as passthrough to triangle setup and scan conversion for static geometry.

Probably VPC does minimal amount of work here because culling had already been done prior to it in compute shaders and bottleneck is very likely somewhere in the CS too or in CS <--> traditional pipeline interop.

As for the vertex attribute fetch, probably it's done in vertex shader in the same way it's implemeted in anvil next engine.

I think a really important part of this article is the following: "Do note that we chose the best or close to best scores that were available at the time of writing. It is by no means an accurate representation of each architecture performance. It is only meant to provide a basic understanding of how fast each DirectX12 architecture might be."

IOW, they used UL database results, meaning totally not comparable systems, limitations, drivers and/or maybe even setting. As nice as a quick overview is to have, I would not put much stock into this batch of results when trying to understand the behaviours of different architectures.

DavidGraham

Veteran

Latest drivers for both Mesh Shaders Off, Mesh Shaders On :

RTX 3080: 67.32 fps, 584.09 fps

RX 6800 XT: 28.09 fps, 468.77 fps

https://www.pcgamer.com/nvidias-rtx-3080-destroys-amds-rx-6800-xt-in-new-3dmark-test/

RTX 3080: 67.32 fps, 584.09 fps

RX 6800 XT: 28.09 fps, 468.77 fps

https://www.pcgamer.com/nvidias-rtx-3080-destroys-amds-rx-6800-xt-in-new-3dmark-test/

Latest drivers for both Mesh Shaders Off, Mesh Shaders On :

RTX 3080: 67.32 fps, 584.09 fps

RX 6800 XT: 28.09 fps, 468.77 fps

https://www.pcgamer.com/nvidias-rtx-3080-destroys-amds-rx-6800-xt-in-new-3dmark-test/

PC Gamer said:To be honest, it doesn't look all that incredible

Couldn’t have said it better myself.

Silent_Buddha

Legend

Couldn’t have said it better myself.

This quote from the article seems more appropriate for most people.

PC Gamer said:Honestly, I just want to be able to actually buy one of them.

Regards,

SB

How should mesh shading be possible on RDNA1? It's a GPU hardware feature only available on current gen cards and Turing. RDNA1 uses primitive shaders AFAIK.

Maybe he means running mesh shaders in software, similar to Raytracing on Pascal cards?

Actually, it can be potentially hardware accelerated on RDNA ...

It's important to remember that mesh shaders are just a software defined stage in the the API's model of the graphics pipeline. Primitive shaders on RDNA/RDNA2 on the other hand is a real hardware defined stage in their graphics pipeline. An implementation of mesh shaders on RDNA doesn't necessarily have to be a 1:1 mapping between software and hardware so a portion of mesh shaders as a SW stage can be handled with RDNA's own native HW stage while the rest can be done in software (emulation) ...

On PS5 GNM, they expose similar functionality with respect to mesh shaders but we don't see them attempting to advertise this capability at all ...

It’s been in the works for a long time, just the focus was on the perf of the mesh shader path, especially as one of the first uses of it on PC. The “off” case leans heavily on ExecuteIndirect, in a non-idiomatic way to use that to drive the GPU, so it’s not as interesting. Nice EI test, but not how a production renderer would do anything.The most likely explanation is that the benchmark was quickly thrown together with no optimization. With mesh shader off the card is seemingly idle for long periods of time with none of the functional units doing any work.

DavidGraham

Veteran

The problem now lies in the non mesh shader path, as it doesn't represent the best possible use of resources for the traditional old ways, thus the speed ups with the Mesh path are now unrealistic?

With UE5 seemingly bypassing mesh shaders in favour of a pure compute path and achieving higher performance for the most part (with obviously stunning results), I'm wondering how much use we'll see of mesh shaders this generation. The same question would apply to the RT hardware vs Lumen which seemingly does something quite similar with less performance hit and without having to rely on the RT hardware.

Similar threads

- Replies

- 13

- Views

- 5K

- Replies

- 91

- Views

- 16K

- Replies

- 98

- Views

- 32K