@Frenetic Pony 8gb at what resolution? I don't feel like combing a 16 minute video to figure it out.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Nvidia's 3000 Series RTX GPU [3090s with different memory capacity]

- Thread starter Shortbread

- Start date

-

- Tags

- nvidia

Since people were asking for 8gb+ vram usage... well here it is. Call of Duty Black Ops... whatever number (Cold War) using over 8gb even without raytracing:

See, ok, that's done. There's been other examples all around this forum of course. So the 3060ti and 3070 aren't perfectly ideal GPUs, nor perhaps is the 3080 as that's getting pretty close to above 10gb already.

8GB+ VRAM usage, but that is not a good indicator of the game "requiring" over 8GB. I have seen that game using over 16GB of VRAm on 3090 on particular scenes but 3080 has no problem handling that scene with mere 10GB of VRAM.

uh.. i just looked at RTX 3000 prices, yikes! ridiculously high! Heck, all GPU got price hike. My gtx 1660 super was 3.4mill IDR brand new, now brand new its 4.6mill IDR......

yep, using more than 8GB ram doesn't automatically means requiring over 8GB. Some games automatically hogs VRAM, maybe to improve streaming.8GB+ VRAM usage, but that is not a good indicator of the game "requiring" over 8GB. I have seen that game using over 16GB of VRAm on 3090 on particular scenes but 3080 has no problem handling that scene with mere 10GB of VRAM.

Since people were asking for 8gb+ vram usage... well here it is. Call of Duty Black Ops... whatever number (Cold War) using over 8gb even without raytracing:

See, ok, that's done. There's been other examples all around this forum of course. So the 3060ti and 3070 aren't perfectly ideal GPUs, nor perhaps is the 3080 as that's getting pretty close to above 10gb already.

But then, we don't live in an ideal world. After all its not like you can buy any of these new GPUs anyway, or a console for that matter. Heck maybe by the time you can, if you want to go to the extra expense and such cards as the 3080ti and 3070ti even exist, you can buy one of those. My only hope is to give the best buying advice I can to whoever comes around here being curious. Hell maybe AMD will suddenly put out better GPUs as well, and Intel's new CEO will somehow make their GPUs worthwhile. We'll wait and see.

Thats certainly good to know, and more can only be better, all other things equal, however as others have noted, that doesn't necessarily mean the game needs that much vram. That's demonstrated here at about 3:25 with the 3070 matching the 6800's performance at 4K without RT. With RT it beats the 6800 with ease but RT is skewing the comparison. That said, looking at the DF video, the game is allocating over 10GB at 4K RT and yet the 8GB GPU is comfortably beating even the 16GB 6800XT in average and minimum framerates.

Reminds me a bit of the time when Windows Vista was released and people were complaining it would suck up all RAM in the system it could find. No more free RAM *doh*

Yup, unused ram is wasted RAM...we really need a segmentation of the VRAM like with System RAM (used, cached etc.)

DegustatoR

Veteran

No, it's not. Call of Duty games are well known to use VRAM to cache streaming assets, that hardly means that they need that much VRAM.See, ok, that's done.

In Cold War there's a setting which allow you to directly control the percentage of VRAM which the game will allocate.

And from what benchmarks there is I see zero indication that it actually require more than 8GB of VRAM, even with RT.

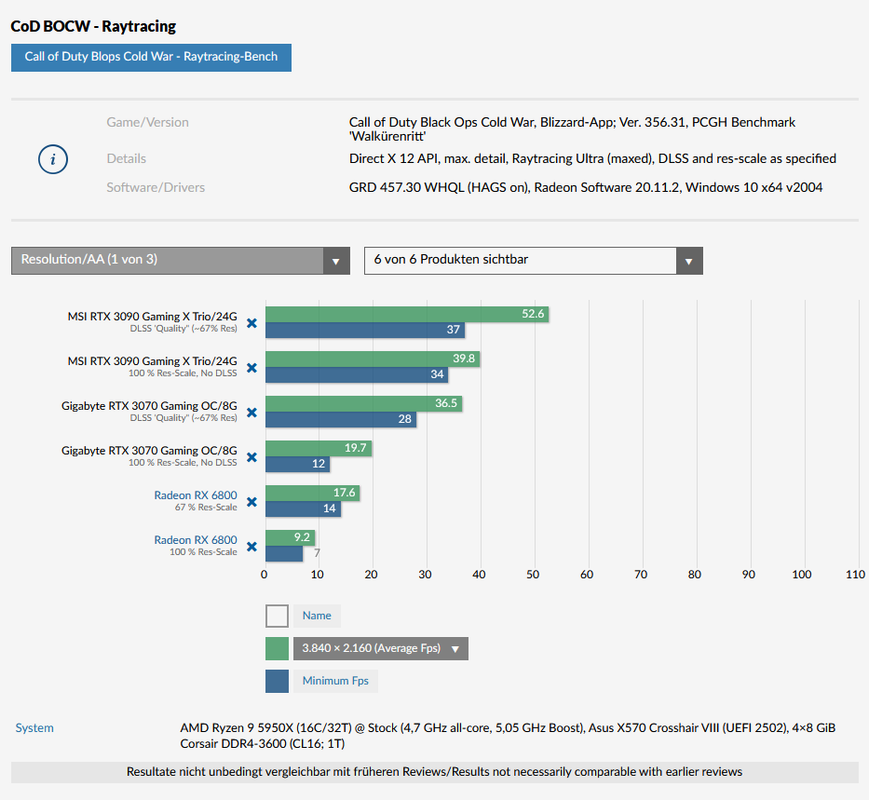

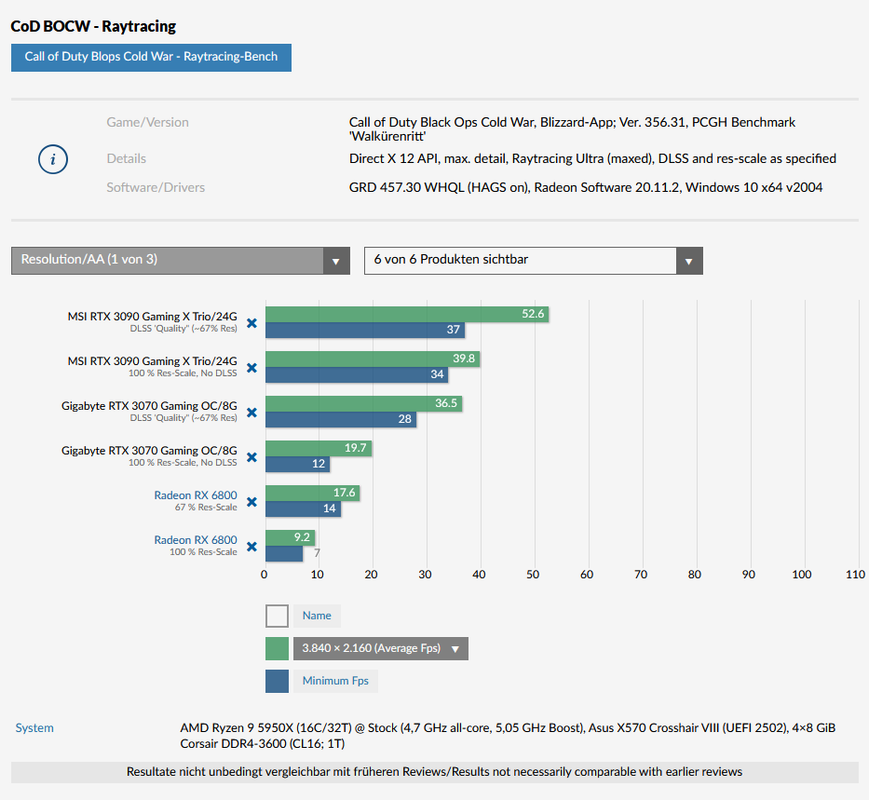

Example one:

https://www.pcgameshardware.de/Call...-Cold-War-Benchmark-Release-Review-1362383/2/

Example two:

https://www.overclock3d.net/reviews...r_pc_performance_review_optimisation_guide/11

It does seem to require more than 6GB in 4K with RT Ultra. But considering that you get <60 fps in such mode even on a 2080Ti and even with DLSS that seems like a non-issue.

Bring on the 5000 series. Then I can finally play last year's games properly at actual 4K. I bet they have more RAM too.

Maybe call them the Geforce RTFX 5000 so I can reminisce about that fine old series of the past too. Perhaps AMD can launch a new Radeon 9700 by then as well.

Maybe call them the Geforce RTFX 5000 so I can reminisce about that fine old series of the past too. Perhaps AMD can launch a new Radeon 9700 by then as well.

Last edited:

DegustatoR

Veteran

Doubtful. Next gen is more than a year away.We will have next gen before we can buy current gen

Rootax

Veteran

Doubtful. Next gen is more than a year away.

I was not 100% serious

No, it's not. Call of Duty games are well known to use VRAM to cache streaming assets, that hardly means that they need that much VRAM.

In Cold War there's a setting which allow you to directly control the percentage of VRAM which the game will allocate.

And from what benchmarks there is I see zero indication that it actually require more than 8GB of VRAM, even with RT.

Example one:

Code:https://i.postimg.cc/7ZfGNFnH/Screenshot-2021-01-23-144224.png

https://www.pcgameshardware.de/Call...-Cold-War-Benchmark-Release-Review-1362383/2/

Example two:

Code:https://overclock3d.net/gfx/articles/2020/11/19113412366l.jpg

https://www.overclock3d.net/reviews...r_pc_performance_review_optimisation_guide/11

It does seem to require more than 6GB in 4K with RT Ultra. But considering that you get <60 fps in such mode even on a 2080Ti and even with DLSS that seems like a non-issue.

Looking at the first example at least, the cards' 1st percentile of fps go down to 60% of avg. on the 8 GByte card and only to 85% on the 24 GByte card. Without memory consumung raytracing in your second example, 8 GByte cards seem to fare a bit better.

DegustatoR

Veteran

Not really if you consider the difference in their launch dates.The difference between 3090 and 3060 Ti is strangely low...

Puget Systems Professional application reviews:

DaVinci Resolve Studio - NVIDIA RTX A6000 48GB Performance

Written on January 28, 2021 by Matt Bach

Adobe Premiere Pro - NVIDIA RTX A6000 48GB Performance

Written on January 27, 2021 by Matt Bach

Adobe After Effects - NVIDIA RTX A6000 48GB Performance

Written on January 26, 2021 by Matt Bach

Adobe Photoshop - NVIDIA RTX A6000 48GB Performance

Written on January 25, 2021 by Matt Bach

DaVinci Resolve Studio - NVIDIA RTX A6000 48GB Performance

Written on January 28, 2021 by Matt Bach

Adobe Premiere Pro - NVIDIA RTX A6000 48GB Performance

Written on January 27, 2021 by Matt Bach

Adobe After Effects - NVIDIA RTX A6000 48GB Performance

Written on January 26, 2021 by Matt Bach

Adobe Photoshop - NVIDIA RTX A6000 48GB Performance

Written on January 25, 2021 by Matt Bach

A100 vs V100 Deep Learning Benchmarks

January 28, 2021

A100 vs V100 Deep Learning Benchmarks | Lambda (lambdalabs.com)

NVIDIA RTX A6000 Deep Learning Benchmarks

January 4, 2021

RTX A6000 Deep Learning Benchmarks | Lambda (lambdalabs.com)

January 28, 2021

A100 vs V100 Deep Learning Benchmarks | Lambda (lambdalabs.com)

NVIDIA RTX A6000 Deep Learning Benchmarks

January 4, 2021

RTX A6000 Deep Learning Benchmarks | Lambda (lambdalabs.com)

Best GPUs For Workstations: Viewport Performance of CATIA, SolidWorks, Siemens NX, Blender & More – Techgage

February 5, 2021

February 5, 2021

We’ve tested a total of 17 graphics cards for this performance look. While many developers behind much of this software would quickly suggest using a Radeon Pro or Quadro over a gaming GPU, there are many cases when those gaming GPUs offer the better bang-for-the-buck. It’s up to you or your company whether or not you’ll suffice with the gaming counterparts, but the professional models feature advanced support, sometimes ECC memory, and in some cases, driver optimizations that propel viewport performance forward in CAD suites (some of which will be seen here).

Similar threads

- Replies

- 9

- Views

- 851

- Replies

- 66

- Views

- 6K

- Replies

- 3

- Views

- 1K

- Replies

- 4

- Views

- 525