Doesn't this make hardware performance comparisons difficult if rt shadows don't have the same quality or intensity in games.I imagine they might end up being a different Version of RT shadows than what UE4 has by default. UE4 has its own RT shadows already...

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Optimizations of PC Titles *spawn*

- Thread starter pjbliverpool

- Start date

That's a lame excuse. I'm sure AMD was in bed with console game developers for years.AMD doesn’t have nearly enough resources to do the same.

Outside of the required work with Sony and Microsoft, I doubt there was much if any collaboration between AMD and console developers. Also, isn’t all of AMD software tech open source? No top secret NDAs required like with Nvidia?That's a lame excuse. I'm sure AMD was in bed with console game developers for years.

Surprizing that it runs much slower only on Nvidia's hardwareSo it's not surprising it runs slower.

Odyssey - https://tpucdn.com/review/evga-gefo.../images/assassins-creed-odyssey-2560-1440.png

RTX 2080 - 63 FPS

RX 5700 XT - 55.4 FPS

Valhalla - https://tpucdn.com/review/assassins...nce-analysis/images/performance-2560-1440.png

RTX 2080 - 55.1 FPS

RX 5700 XT - 53.3 FPS

AC Valhalla is the first attempt to port Anvil to DX12, it seems to be heavily skewed to RDNA simply because AMD was working with them.

Wonder why they didn't leave DX11. Also, scaling is worse in DX12 for nvidia GPUs as well, RTX 2080 Ti just 15% faster than RTX 2080, while on avarage it's 21% faster in 1440p.

Is there any evidence of the better lighting?One can say Valhalla has more complex scenes

I actually like it more than native + TAA in WDL because it's much more temporarily stable in the game.and judging by Watch Dogs is more an hit and miss

Default TAA in WDL misses tons of shimmering and edges, but that's simply a trade off with TAA in the game, they could have made it much more stable at the cost of additional blurriness.

DLSS should not be adjusted for the TAA appearance in any game, it has it's own strengths.

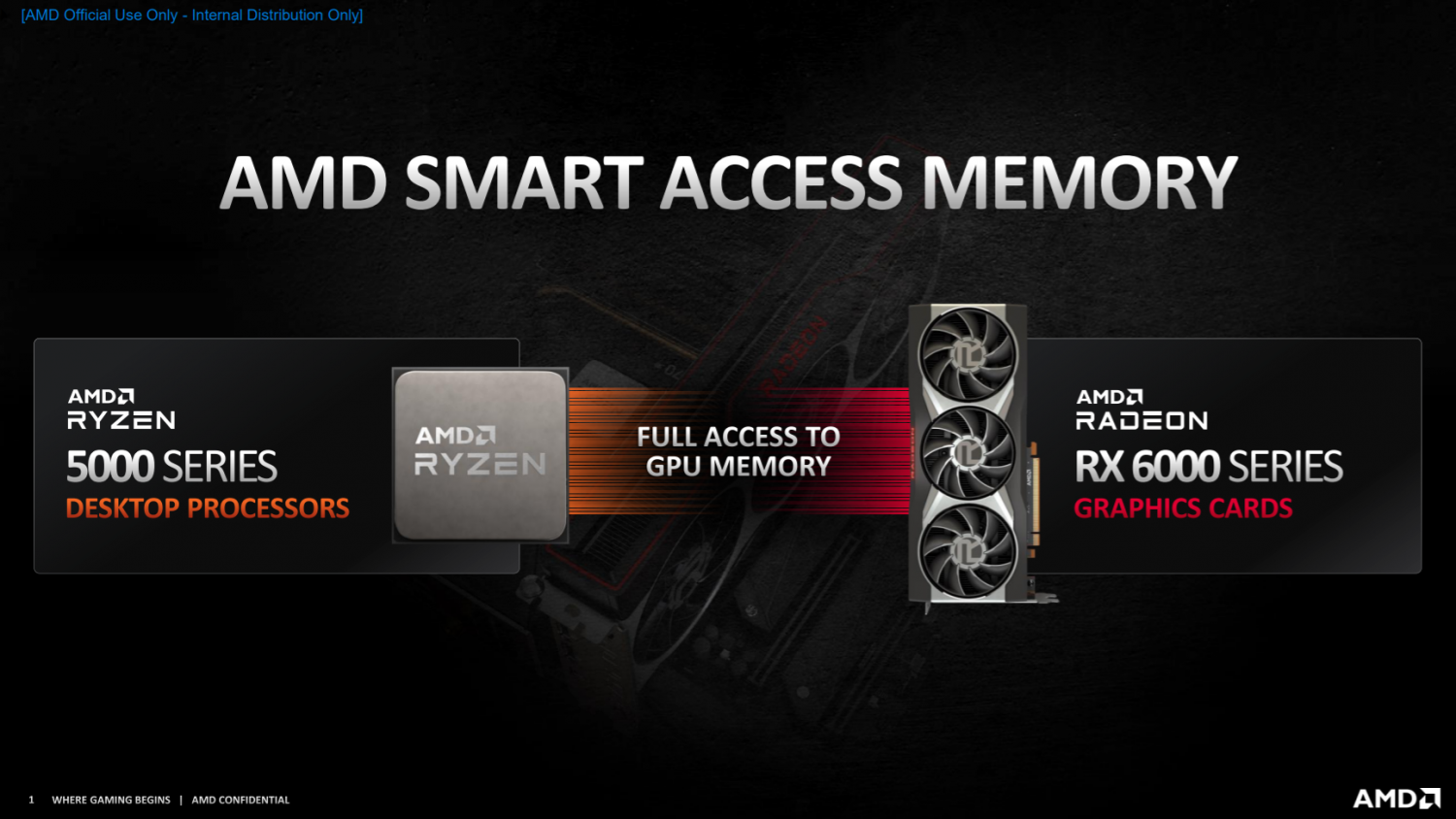

No, Radeon Rays is not Open Source. Smart Access Memory is also intended to be a exclusive solution, but did not turn out so.Also, isn’t all of AMD software tech open source? No top secret NDAs required like with Nvidia?

I’m referring to the tech available to game developers. FidelityFX array of technologies, TressFX, PureHair etc. Radeon Rays isn't even for games and SAM is something separate.No, Radeon Rays is not Open Source. Smart Access Memory is also intended to be a exclusive solution, but did not turn out so.

Leoneazzurro5

Regular

Surprizing that it runs much slower only on Nvidia's hardware

Odyssey - https://tpucdn.com/review/evga-gefo.../images/assassins-creed-odyssey-2560-1440.png

RTX 2080 - 63 FPS

RX 5700 XT - 55.4 FPS

Valhalla - https://tpucdn.com/review/assassins...nce-analysis/images/performance-2560-1440.png

RTX 2080 - 55.1 FPS

RX 5700 XT - 53.3 FPS

AC Valhalla is the first attempt to port Anvil to DX12, it seems to be heavily skewed to RDNA simply because AMD was working with them.

Wonder why they didn't leave DX11. Also, scaling is worse in DX12 for nvidia GPUs as well, RTX 2080 Ti just 15% faster than RTX 2080, while on avarage it's 21% faster in 1440p.

Again, what is your point? That developers prioritize optimizing for their sponsors first? It was the same, or worse, with Nvidia.

I put here something:

https://www.nvidia.com/it-it/geforce/games/

This is the VERY LONG list of Nvidia sponsored titles, the vast majority of them ran quite badly on AMD hardware at the beginning. And some still run quite better on Nvidia cards, without any reason, as you say. Just look at TPU reviews. So again, why is this OK for you but not the opposite?

Just look at the reviews, or at the game.Is there any evidence of the better lighting?

Radeon Rays ... Custom AABB, GPU BVH Optimization, API backends.I’m referring to the tech available to game developers

Huh? Why would it cause that? All cards using RT acceleration will use that library, not just AMDs.Doesn't this make hardware performance comparisons difficult if rt shadows don't have the same quality or intensity in games.

What he meant is that they're not using UE4's built-in library, but their own. They could have named it SuperLaserTracer9000.dll, but decided to go with amdrtshadows.dll (it's probably straight from AMD and I wouldn't be surprised to see it pop up at GPUOpen sooner or later)

It was never meant to be "exclusive solution", but they can't advertise others and at the moment they've only validated it to work with Ryzen5000+500+RX6000 -combo, and no other vendors had at the time communicated on planning on implementing Resizable BAR support, thus it at the time of the press release is/was exclusive to that comboNo, Radeon Rays is not Open Source. Smart Access Memory is also intended to be a exclusive solution, but did not turn out so.

If that's how you want to view how they advertised the feature, okay. There is definitely more neutral interpretations on what was said.It was never meant to be "exclusive solution", but they can't advertise others and at the moment they've only validated it to work with Ryzen5000+500+RX6000 -combo, and no other vendors had at the time communicated on planning on implementing Resizable BAR support, thus it at the time of the press release is/was exclusive to that combo

https://www.tomshardware.com/news/nvidia-amd-smart-access-memory-tech-ampereNvidia says its hardware already supports the feature, though it will need to be enabled. Any PCIe-compliant CPU, be it either Intel or AMD, should also be able to use the tech with Nvidia's graphics cards.

That seemingly takes the shine off of AMD's requirement of an AMD GPU, CPU, and high-end X570 motherboard, especially given that Nvidia plans to enable its competing (yet similar) functionality on all platforms - Intel, AMD, and PCIe 3.0 motherboards included.

Nvidia says that its early testing shows similar performance gains to AMD's SAM and that it will enable the feature through future firmware updates. However, the company hasn't announced a timeline for the updates.

It certainly feels like Nvidia is trying to steal AMD's thunder. If Nvidia's Ampere silicon experiences similar gains from the Smart Access Memory-like tech, it will definitely complicate matters for AMD's push to create a walled all-AMD gaming PC garden.

You're calling that "more neutral interpretation"? One needs quite a stretch of imagination to think AMD is pushing for "walled all-AMD gaming PC garden" by enabling standard PCIe-feature they've finally validated on a consumer card too.If that's how you want to view how they advertised the feature, okay. There is definitely more neutral interpretations on what was said.

https://www.tomshardware.com/news/nvidia-amd-smart-access-memory-tech-ampere

It's not like AMD would have been silent about working towards enabling Resizable BAR support, hell, they implemented the support for it in Linux. And they of course know that every other company knows about the feature, it's benefits etc too. Why no-one else did it before, who knows. Perhaps there's a reason why it's been so far validated on only one platform.

Yeah, it does take quite a stretch.You're calling that "more neutral interpretation"? One needs quite a stretch of imagination to think AMD is pushing for "walled all-AMD gaming PC garden" by enabling standard PCIe-feature they've finally validated on a consumer card too.

https://www.tweaktown.com/news/76238/nvidia-has-its-own-smart-access-memory-like-amds-new-rdna-2-coming/index.htmlAMD's new Smart Access Memory (SAM) requires a new RDNA 2-based Radeon RX 6000 series graphics card, mixed with the Zen 3-based Ryzen 5000 series processors and X570 motherboard. You can read all about AMD Smart Access Memory right here.

That's not prioritization, that's crippling performane for 100% of high end HW and 80% of discrete GPUs users with API, where there are literally infinity ways to make things slow without any visible benefit and opportunity to fix the broken stuff in driver since devs chose to care about the minority of their audience for whatever reason.That developers prioritize optimizing for their sponsors first?

And it's certainly not the same with Nvidia. I don't see how slow tesselation, geometry processing and looks like ray-tracing now on AMD hardware are Nvidia's faults. Nobody blamed AMD for slow computer shaders in the Dirt and other titles with forward+ renderers due to shared memory atomics usage instead of prefix sum (which Nvidia suggested as optimization for Keplers), it was future proof architecture of GCN.

Leoneazzurro5

Regular

That's not prioritization, that's crippling performane for 100% of high end HW and 80% of discrete GPUs users with API, where there are literally infinity ways to make things slow without any visible benefit and opportunity to fix the broken stuff in driver since devs chose to care about the minority of their audience for whatever reason.

And it's certainly not the same with Nvidia. I don't see how slow tesselation, geometry processing and looks like ray-tracing now on AMD hardware are Nvidia's faults. Nobody blamed AMD for slow computer shaders in the Dirt and other titles with forward+ renderers due to shared memory atomics usage instead of prefix sum (which Nvidia suggested as optimization for Keplers), it was future proof architecture of GCN.

Sorry to say, but you are simply saying -again- that if a developer optimizes for an architecture and that architecture is Nvidia - it's a legit optimization - if it's AMD - it's unfair competition. Nvidia has its history of "unfair" optimizations (here in this very same thread someone else than me reported such cases and there are a lot more - and no, amplifying tessellation over any reasonable amount only to cripple the competitor's performance without any gain in image quality is NOT a fair optimization by any means). And again, we are not even speaking about optimizations for GCN - the vast majority of the Nvidia sponsored titles I linked runs better on Nvidia cards having lower specs than the comparable RDNA cards "without any reason" for using your words, and RDNA is by no means the same mess GCN is from the optimization point of view.

From RECENT techpowerup reviews:

Control RTX off

https://www.techpowerup.com/review/msi-geforce-rtx-3070-gaming-x-trio/10.html

Divinity Original Sin 2

https://www.techpowerup.com/review/msi-geforce-rtx-3070-gaming-x-trio/14.html

Gears 5 original PC release (and we saw this running quite well on RDNA2 consoles)

https://www.techpowerup.com/review/msi-geforce-rtx-3070-gaming-x-trio/18.html

Hitman2

https://www.techpowerup.com/review/msi-geforce-rtx-3070-gaming-x-trio/19.html

Metro Exodus RTX off

https://www.techpowerup.com/review/msi-geforce-rtx-3070-gaming-x-trio/20.html

Strange Brigade

https://www.techpowerup.com/review/msi-geforce-rtx-3070-gaming-x-trio/26.html

These are all Nvidia sponsored titles where the 5700XT runs on par or worse than a 2070, despite having better basic specs (and we are not even putting Ray tracing on the scale here) and not having "any reason" to perform worse - and there are more games where anyway the 5700XT performs significantly worse than the 2070 Super when they have similar specs. Some of these don't have stunning visuals, too.

So in this case "it's all OK" but if something happens that reverses this perception "it's not fair". You seem desperate to imply that RDNA architecture is simply worse than Turing or even Pascal without any evidence of this.

[compacted it a little]From RECENT techpowerup reviews:

Control RTX off: https://www.techpowerup.com/review/msi-geforce-rtx-3070-gaming-x-trio/10.html

Divinity Original Sin 2: https://www.techpowerup.com/review/msi-geforce-rtx-3070-gaming-x-trio/14.html

Gears 5 original PC release (and we saw this running quite well on RDNA2 consoles): https://www.techpowerup.com/review/msi-geforce-rtx-3070-gaming-x-trio/18.html

Hitman2: https://www.techpowerup.com/review/msi-geforce-rtx-3070-gaming-x-trio/19.html

Metro Exodus RTX off: https://www.techpowerup.com/review/msi-geforce-rtx-3070-gaming-x-trio/20.html

Strange Brigade: https://www.techpowerup.com/review/msi-geforce-rtx-3070-gaming-x-trio/26.html

These are all Nvidia sponsored titles where the 5700XT runs on par or worse than a 2070, despite having better basic specs (and we are not even putting Ray tracing on the scale here) and not having "any reason" to perform worse - and there are more games where anyway the 5700XT performs significantly worse than the 2070 Super when they have similar specs. Some of these don't have stunning visuals, too.

So in this case "it's all OK" but if something happens that reverses this perception "it's not fair". You seem desperate to imply that RDNA architecture is simply worse than Turing or even Pascal without any evidence of this.

Not chiming in on the fair-vs-unfair optimization dicussion, but wondering: Are those really all Nvidia sponsored titles? Looked at some and they seems to perform according to general ratings on TPU and Computerbase, where 2070S is 10-ish % faster than 5700 XT.

Also, I was under the impression, AMD even bundled Strange Brigade with their cards (and at least some game out of the Hitman franchise, though I don't remember if it was Hitman 2 specifically). Would they do so with an Nvidia-sponsored title?

Leoneazzurro5

Regular

[compacted it a little]

Not chiming in on the fair-vs-unfair optimization dicussion, but wondering: Are those really all Nvidia sponsored titles? Looked at some and they seems to perform according to general ratings on TPU and Computerbase, where 2070S is 10-ish % faster than 5700 XT.

Also, I was under the impression, AMD even bundled Strange Brigade with their cards (and at least some game out of the Hitman franchise, though I don't remember if it was Hitman 2 specifically). Would they do so with an Nvidia-sponsored title?

These are titles that Nvidia sponsored and that are even linked in their site. I don't know what exactly AMD bundled in the past, but recent bundles with AMD stuff included Rainbox Six: Siege and AC: Valhalla, with one of them not being in the Nvidia sponsorship list and the other being an AMD sponsored title. I own several of the titles in the list and they all show the Nvidia advertising at start. About the ratings: these include exactly these titles in them, so they reflect the status of the optimization (and that's why I feel the claims of "not being fair to Nvidia" are ridicolous). A thing I don't understand is the difference between scores even in the same game taken from different sites, i.e. Techpowerup scores don't add with i.e. recent Hwunboxed tests about the latest Navi10 drivers:

What I can think about is techpowerup and Computerbase using an older WHQL driver (or older numbers) instead of the most recent - i.e. in the reviews I see 20.8.3 WHQL and 20.7.2 while we are atm at 20.11.1 as the latest and 20.9.1 as WHQL.on AMD site - and frankly the 20.9.1 is ther since September (before the Ampere launch).

Jawed

Legend

In real time you have to compromise on the count of rays used. So for example reflections only cover a short distance from the camera (so you get pop-in as the camera moves) and reflections are blurry due to low sample count.What do you mean exactly? To my understanding, those are all effects used in real time. Or are you looking for split-millisecond figures broken down per frame?

It's similar to how there's a limited count of filtered texels that you can fit into a frame (bandwith and filtering throughput restrictions apply as well as quality of latency hiding). Ray casts/bounces are notionally "budgeted" per frame, with additional problems to deal with like BVH build/update.

In using the "ray budget", you then have to decide which effects you want to use. At the same time you have to decide how much quality each effect gets. And how to make quality scalable (low, medium, high settings).

Something like GI, which shows the greatest count of rays in the image you posted, misses a typical real time rendering technique: temporal accumulation. e.g. 1 sample per 25 pixels per frame is enough, and over 5 or 15 frames that will provide a "high quality" result (using jittered sampling, say). That's why I compared ray traced GI with SVOGI in CryEngine, because there are games that already do high quality GI whilst not using hardware accelerated ray tracing.

So this tells us that while the ideal for GI requires a high ray count, in a real time game the ray count would be cut down substantially. And it still looks really excellent.

It seems that reflections have become the focus of ray tracing in most games because they're really easy to show in marketing the game. Though Duke Nukem might have something to say about the history of high quality reflections in games.

The shadows in Call of Duty are really nice and they required a lot of R&D to make them practical. It seems it wasn't as simple as they were hoping. Battlefield reflections have problems with pop-in and depend heavily upon screen space imposters. Watch Dogs: Legion also has problems with pop-in and uses screen space reflections a lot of the time.

What's unclear to me is how developers are currently assessing the ray-budget versus image quality question, when deciding how to use ray tracing in their games.

Techpowerup doesn't always retest all older GPUs with new drivers. HUB always does. You also have to factor in the performance variances due to differing benchmark scenes.These are titles that Nvidia sponsored and that are even linked in their site. I don't know what exactly AMD bundled in the past, but recent bundles with AMD stuff included Rainbox Six: Siege and AC: Valhalla, with one of them not being in the Nvidia sponsorship list and the other being an AMD sponsored title. I own several of the titles in the list and they all show the Nvidia advertising at start. About the ratings: these include exactly these titles in them, so they reflect the status of the optimization (and that's why I feel the claims of "not being fair to Nvidia" are ridicolous). A thing I don't understand is the difference between scores even in the same game taken from different sites, i.e. Techpowerup scores don't add with i.e. recent Hwunboxed tests about the latest Navi10 drivers:

What I can think about is techpowerup and Computerbase using an older WHQL driver (or older numbers) instead of the most recent - i.e. in the reviews I see 20.8.3 WHQL and 20.7.2 while we are atm at 20.11.1 as the latest and 20.9.1 as WHQL.on AMD site - and frankly the 20.9.1 is ther since September (before the Ampere launch).

Similar threads

- Replies

- 124

- Views

- 10K

- Locked

- Replies

- 47

- Views

- 3K

- Replies

- 64

- Views

- 5K

- Locked

- Replies

- 27

- Views

- 2K

- Replies

- 115

- Views

- 12K