I have no idea why in the world anyone would have an agenda regarding 2012 GPUs and 2013 consoles. It's almost if not fully retro hardware by now. How many are actually still on 2012 pc hardware?

If we look back at previous generations, say 6th gen, 7th gen, where even top of the line GPUs at the time of release of those consoles couldnt even keep up for a couple of years. With the 2013 ones, if you bad say a 7950, R270, R280, or even 7850/70, you can hang along an entire generation without needing to upgrade to enjoy those games. Yes some you will have to lower settings regarding 7870 cards that sported only 2gb).

It goes without saying its impressive that such old hardware can play those games at comparable visual quality.

Yes you can start list wars, showing videos where even a 6gb 7970 performs very badly, but then i can find a video where it outmatches the PS4. Thing with pc's is, they are different configurations, settings can wildly vary (in many games, PS4 is actually closer to low then medium) etc. PS4 performance isnt that great anymore either btw, many games dip well below 30, and often settings are below medium. Even resolutions do take hits.

Yes 2012 GPUs like the 7950 dont have perfect performance but so doesnt the PS4 either.

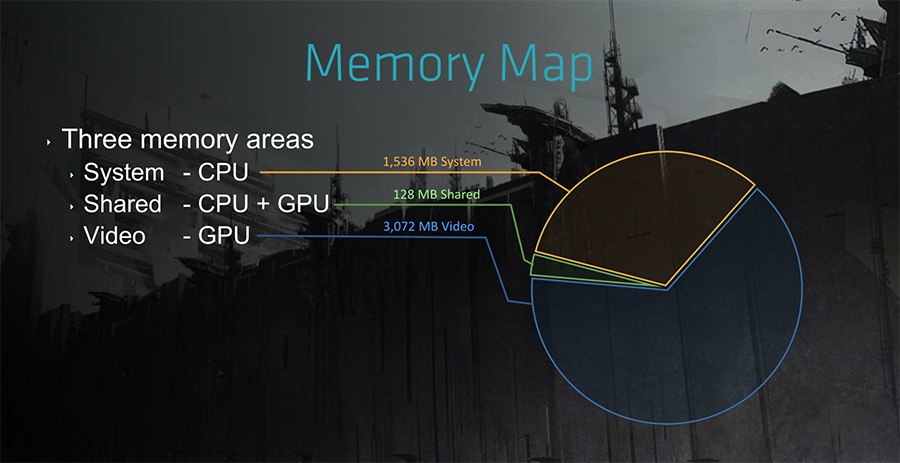

I ment for VRAM. The (base) PS4 is actually limited, most games anyway) to about 3GB (+-500mb) for vram. Most games dont even touch that. I have no source other then what a GG dev told me.