You would lose however many stream processors have INT instructions scheduled. AMD can issue any arbitrary mix of FP and INT instructions as far as i understand it.

Per-work-item INT instructions on AMD entirely block FP instructions. There's a single SIMD that handles INT and FP for per-work-item calculations.

So the argument about "NVidia losing FP32 because of INT sharing" has a simple answer: "well, doh". Nothing new here.

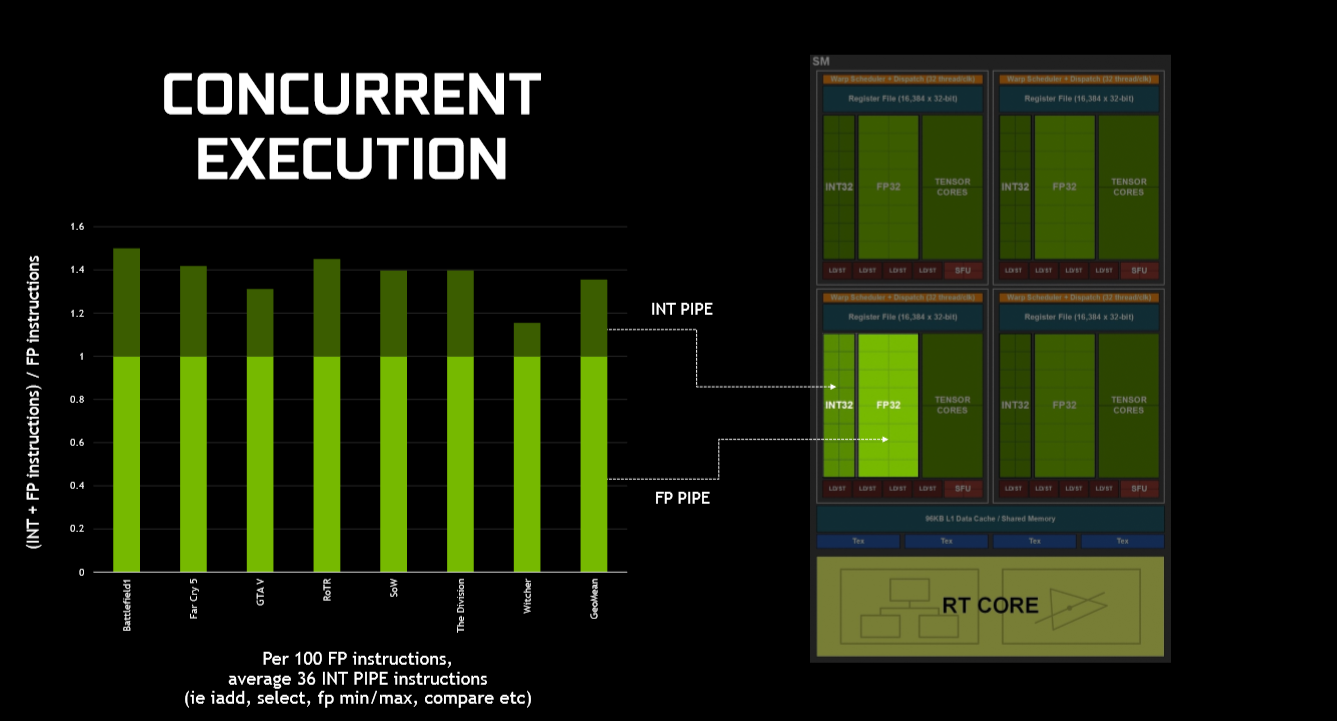

Ampere has a continuously available FP SIMD. The real trick is keeping data ready for it to use. Running INT on another SIMD helps keep data ready. And when INT is not required, there's a chance to get a burst of extra FP goodness.

I'm not sure you do.

You can issue two instructions per clock on two SIMD units at best. It's about as "arbitrary" as it can be.

Ampere can have 2 FP32 instructions or 1 FP32 + 1 INT per clock per each unit in an SM (which there are 4 of in Ampere's SM).

RDNA can have 2 FP32 or 1 FP32 + 1 INT or 2 INTs per clock per each CU in a WGP (which there are 2 of in RDNA WGP).

From this point the only difference is that you can have the same peak INT throughput as that of FP32 on RDNA but only half that on Ampere. Otherwise they are the same.

SMs and WGPs or CUs don't really compare cleanly.

It's best to forget about CU (or WGP) level in RDNA. Each SIMD has its own instruction issue and all the SIMDs are INT/FP., "dual-action". The instruction issue to RDNA SIMDs is not controlled by the CU.

It's clearer to consider instruction-issue and register file. Ampere has dual instruction issue to two SIMDs. RDNA has single instruction issue to only one SIMD.

(The instruction issue of special functions, (TEX) data-loads, per-hardware-thread and branching evaluation all adds lots of complexity - they do affect the progress of work on the SIMDs, but they aren't directly relevant to an INT versus FP throughput discussion).

Ampere's theoretical FP throughput is far far higher than RDNA2 will be. Ensuring that there's work for the SIMDs to do is looking more and more to be the central problem. Complex, math-intensive, shaders are only getting more common - but render-pass count in games is increasing and that hurts SIMD utilisation.