You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Nvidia Ampere Discussion [2020-05-14]

- Thread starter Man from Atlantis

- Start date

-

- Tags

- nvidia

Jawed

Legend

Sorry, I was referring to the Techspot results that trinibwoy linked:Has it? Looking at CB.de results it's +53%/+33% to 2080S/2080Ti in 4K which is lower for 2080S (+60% on average) and about the same for 2080Ti (+31%) when compared to average gains of Ampere over Turing.

Am I missing something? HZD scaling seems to be worse than average here which again points us to the direction of it being badly optimized for NV h/w in the first place - and it seemingly is even worse in utilizing Ampere over Turing.

Horizon Zero Dawn shows the most scaling of all the games tested by them at both 1440p and 4K, comparing 3080 and 2080Ti.Techspot spoke at length about the lack of scaling at 1440p. Their theory is that Ampere is too flops heavy and only gets to spread its wings at 4K. In turn they seem to think this means Ampere isn't a "gaming focused architecture".

It'll be interesting to see how close the 3070 gets with its 6GPCs at 1440p.

Performance is pretty variable depending on the scene. More so than in a typical open world game.Sorry, I was referring to the Techspot results that trinibwoy linked:

Horizon Zero Dawn shows the most scaling of all the games tested by them at both 1440p and 4K, comparing 3080 and 2080Ti.

The 1.9x claim explained ...

September 16, 2020

No, we are talking energy efficiency.

arandomguy

Regular

Does it? I've skimmed through this yesterday but can't say that I remember FP16 being mentioned there at all.

Maybe not, I'm not completely sure if it's the case. I did base it on the assumption that they used it in id Tech 6 and presumed they'd carry over the same techniques over to 7 with Doom Eternal.

I did remember doing some brief searches on this but it was just 3rd party sources saying it was in there which is why I qualified that with a questionable "I believe."

I remember id Software games (Doom and Prey maybe?) and Far Cry 5.

It's extremely hard for AMD to push any type of new technology into the PC market. nVidia doesn't only have over 80% of the discrete GPU market, their infiltration into dev teams is also nothing AMD has or can do.

I'm not entirely sure this would be applicable in this situation. Nvidia with Turing had 2xFP16 over FP32 with Turing and it wasn't until Navi that AMD had broader 2xFP16 (previously only Vega).

Also it might be used more than we think? At least I don't know if this is something that you'd go out of the way to advertise and market as an optimization. Aren't some newer games scaling better on Turing vs Pascal? It could be they are using more advantages one of which could be 2xFP16.

arandomguy

Regular

So it's finally official and I can say: RTX 3070 will be 6 GPC/96 ROPs. This was left out of the virtual techday presentations.

I wonder if GA104 will perform relatively better in some workloads such as rendering due to the GPC count which is something we saw with Turing.

Also in the white paper it says "GA104 retains most of the key new features that were added to NVIDIA’s GA10x Ampere GPU Architecture and ships with GDDR6 memory." I wonder what they're referring to that diverges from GA102 and GA104? I don't think it's specifically mentioned anywhere.

DegustatoR

Veteran

28B xtors GA102 is close to 13.5B TU104 in size so not sure what you mean.It looks like the die sizes didn't drop a lot compared to Turing. I guess because Samsung 8nm is more like a version of 10nm?

From a quick comparison it seems like Ampere has a higher transistor density than RDNA1 btw.

Last edited:

GA104 has no NVLink, AFAIK. I don't know whether or not that's a key feature though, since they're disabling it for the RTX 3080 already.I wonder if GA104 will perform relatively better in some workloads such as rendering due to the GPC count which is something we saw with Turing.

Also in the white paper it says "GA104 retains most of the key new features that were added to NVIDIA’s GA10x Ampere GPU Architecture and ships with GDDR6 memory." I wonder what they're referring to that diverges from GA102 and GA104? I don't think it's specifically mentioned anywhere.

edit: Found a FB-posting from Nvidia's Nick Stam who answered just this question from Klaus from Notebookcheck. Attached for your reference.

Attachments

Last edited:

Pehaps just the fact it has no GDDR6X, and even that more than likely is supported.GA104 has no NVLink, AFAIK. I don't know whether or not that's a key feature though, since they're disabling it for the RTX 3080 already.

NVIDIA hints that RTX 3080 with a different memory layout could come out later.

https://videocardz.com/newz/nvidia-teases-geforce-rtx-3080-with-20gb-memory

It is no secret that NVIDIA is also preparing a 20GB model of the GeForce RTX 3080 graphics card. It has been speculated for weeks now and we had no problems confirming this information with multiple sources. The internal roadmaps clearly state PG132 SKU 20 board design (RTX 3080 20GB) but the launch date has not yet been set.

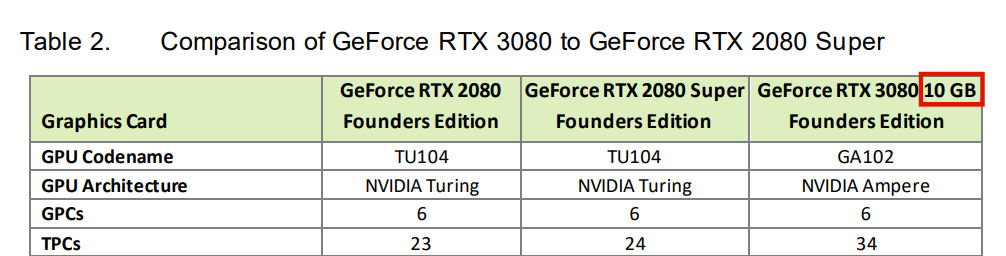

The latest development in RTX 3080 20GB news is the just-released document by NVIDIA. Yesterday NVIDIA released 8nm Ampere (GA102) whitepaper where the RTX 3080 model is listed as ‘GeForce RTX 3080 10GB’. Nowhere else in the document NVIDIA lists memory configuration with SKU name, clearly indicating that there are more versions.

https://videocardz.com/newz/nvidia-teases-geforce-rtx-3080-with-20gb-memory

Last edited by a moderator:

Associated with GDDR6X there is a new feature GDDR6 misses out.

Error Detection and Replay (EDR), see end white paper.

In case a memory data transfer is detected to have an error, the data transfer is retried until there is no error.

The effect as described in some of the reviews, can be observed when overclocking memory.

Due to the retries, at some point, overclocking starts to decrease memory bandwidth instead of increasing it.

Error Detection and Replay (EDR), see end white paper.

In case a memory data transfer is detected to have an error, the data transfer is retried until there is no error.

The effect as described in some of the reviews, can be observed when overclocking memory.

Due to the retries, at some point, overclocking starts to decrease memory bandwidth instead of increasing it.

It looks like the die sizes didn't drop a lot compared to Turing. I guess because Samsung 8nm is more like a version of 10nm?

Let's check the numbers. ga102 die size is 628mm2 and has 28billion transistors. This leads to 44.6M transistors per mm2. tu102 was 754mm2 and 18.6B transistor leading to 24.7M transistor per mm2(tsmc 12nm aka enhanced 16nm?).

GA100 on tsmc 7nm is 826mm2 54B leading to 65.4M transistor per mm2.

navi10 on tsmc7 nm is 251mm2 and 10.3B leading to 41M transistors per mm2.

Samsung 8nm doesn't look that bad density wise. The usual rules of manufacturers not telling how they count transistors, cache versus logic having different density etc. apply. Above numbers should give good ballpark.

Ampere sales launch DDOS:en newegg web page exactly at sales launch time. I suppose there is couple of people trying to buy 3080

Bestbuy's search feature broke temporarily but is now working. I bet it was also due to 3080 purchasers.

edit. Scrap that, best buy search is again broken. I guess people try searching again and again to see which model is in stock

Bestbuy's search feature broke temporarily but is now working. I bet it was also due to 3080 purchasers.

edit. Scrap that, best buy search is again broken. I guess people try searching again and again to see which model is in stock

DegustatoR

Veteran

I honestly don't get this. There's like zero games which need anything like 3080 coming until the end of October. Why are these people in such of a hurry?

I honestly don't get this. There's like zero games which need anything like 3080 coming until the end of October. Why are these people in such of a hurry?

During turing launch it was months and months before supply met demand. If you order in october you don't know if you get the card in october or january.

edit. Newegg pages work now but cards are gone. Everything is out of stock.

Jawed

Legend

Turing was 16nm relabelled as 12nm, versus Ampere 10nm relabelled as 8nm.Let's check the numbers. ga102 die size is 628mm2 and has 28billion transistors. This leads to 44.6M transistors per mm2. tu102 was 754mm2 and 18.6B transistor leading to 24.7M transistor per mm2(tsmc 12nm aka enhanced 16nm?).

GA100 on tsmc 7nm is 826mm2 54B leading to 65.4M transistor per mm2.

navi10 on tsmc7 nm is 251mm2 and 10.3B leading to 41M transistors per mm2.

Samsung 8nm doesn't look that bad density wise. The usual rules of manufacturers not telling how they count transistors, cache versus logic having different density etc. apply. Above numbers should give good ballpark.

28nm was about the last time that it was possible to look at the node's "gate length number" and determine meaningful scaling comparisons against older nodes.

You will probably have to go back about a decade to find a time when transistor density for AMD and NVidia GPUs on the same node were actually meaningfully comparable. So you can't bring AMD into this.

Rootax

Veteran

I honestly don't get this. There's like zero games which need anything like 3080 coming until the end of October. Why are these people in such of a hurry?

It's a simple pleasure to have your new shiny toy ? And, maybe some people were waiting for quit some times now, with older gpu, so now they want to enjoy their new games with max fidelity, etc...

DavidGraham

Veteran

More like a CPU bottleneck. Current CPUs can longer feed such monsters @1080p nor 1440p. It will be way worse for 3090.Techspot spoke at length about the lack of scaling at 1440p. Their theory is that Ampere is too flops heavy and only gets to spread its wings at 4K. In turn they seem to think this means Ampere isn't a "gaming focused architecture".

Jawed

Legend

Is this why they're called zoomers?It's a simple pleasure to have your new shiny toy ? And, maybe some people were waiting for quit some times now, with older gpu, so now they want to enjoy their new games with max fidelity, etc...

Similar threads

- Replies

- 49

- Views

- 7K

- Replies

- 98

- Views

- 32K

- Replies

- 1

- Views

- 7K