Man from Atlantis

Veteran

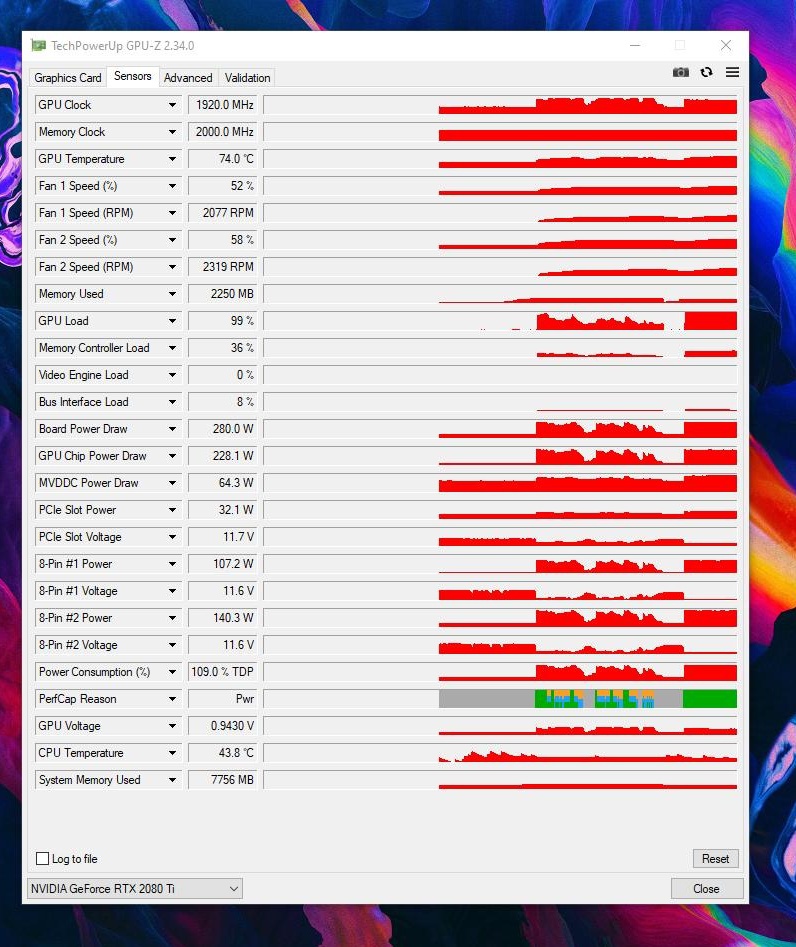

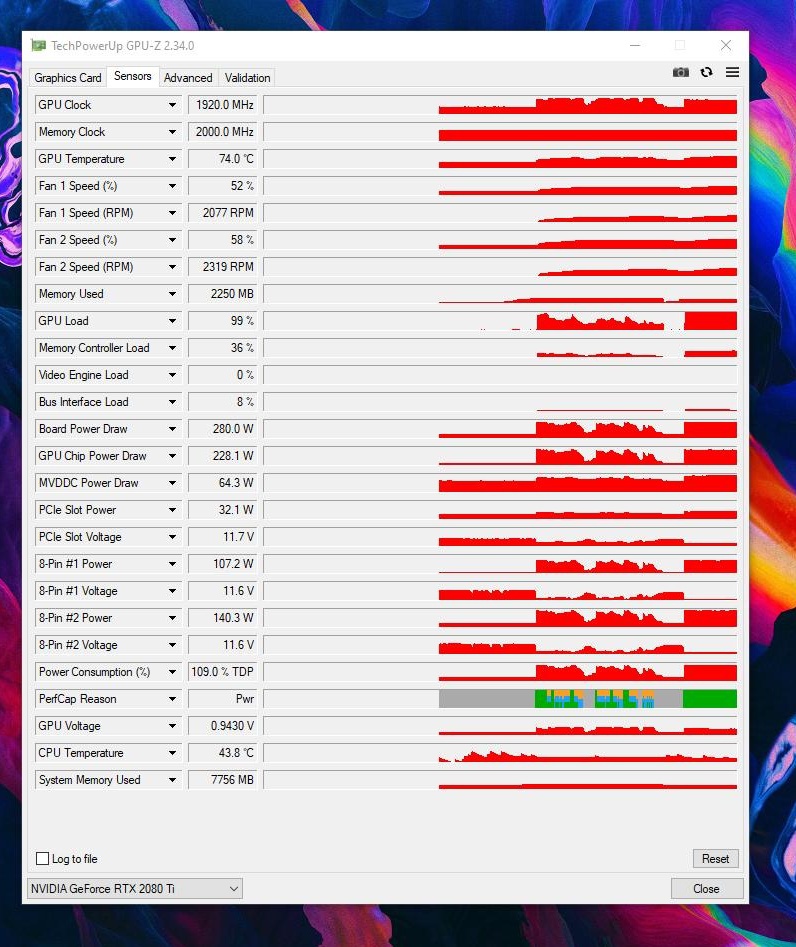

2080Ti Power limit@109%

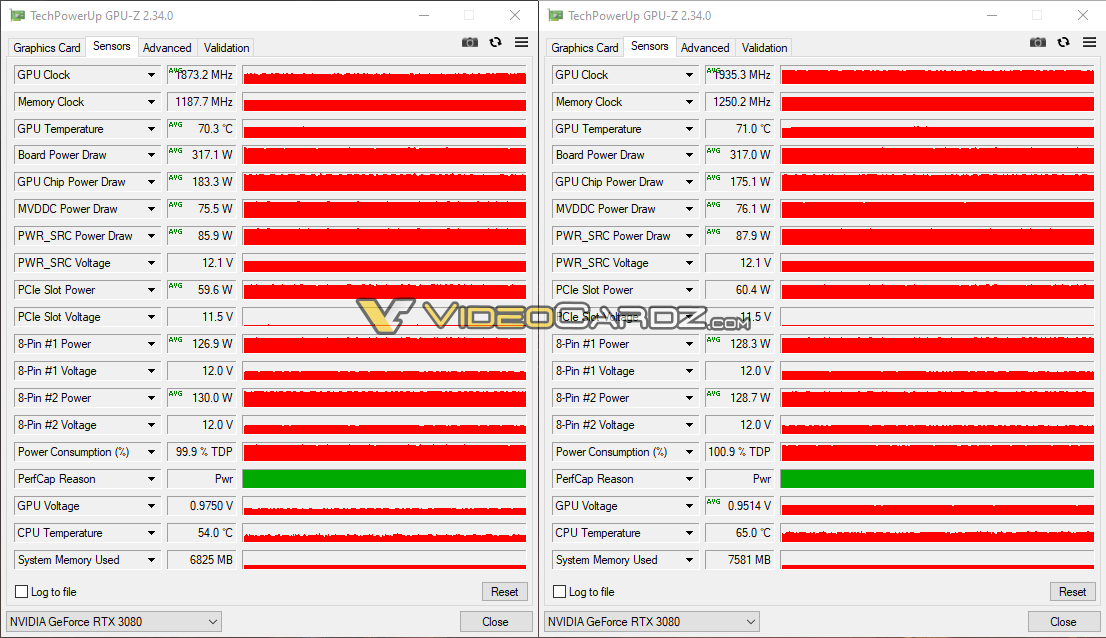

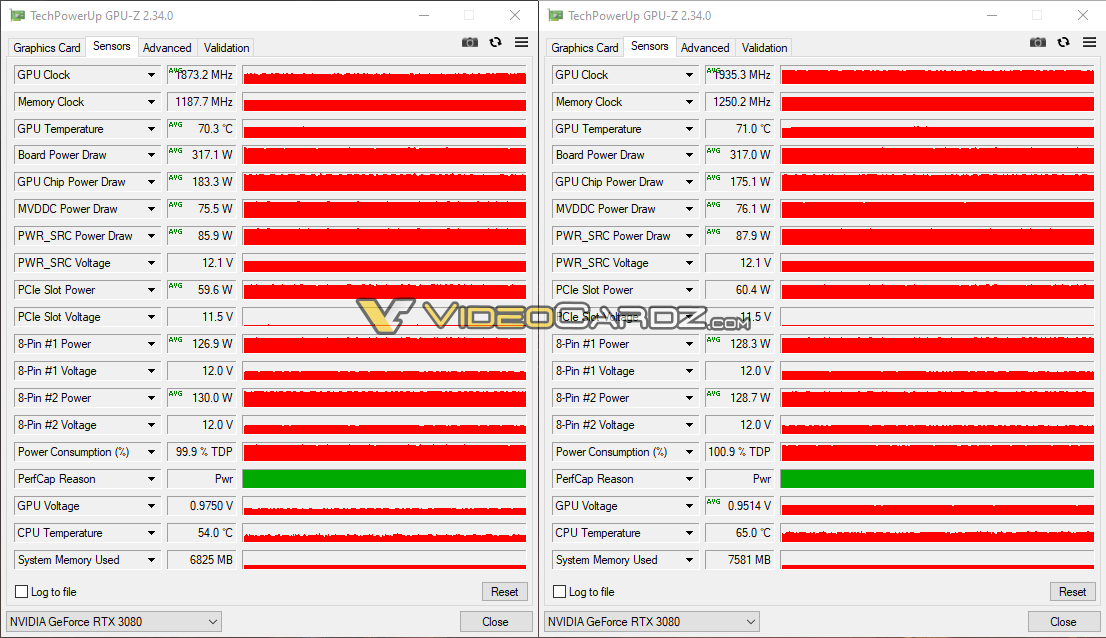

3080

3080

How are we supposed to interpret those numbers? Chip power draw on the 3080 is lower when overclocked? That can’t be right.

The GPU was only overclocked by 70 MHz and memory by 850 MHz.

https://www.hardwareluxx.de/index.p...d-replay-vereinfacht-gddr6x-overclocking.htmlWith the GDDR6(X) storage controller, NVIDIA is introducing a new technology called Error Detection and Replay (EDR). This plays an important role, especially in the interaction with overclocking.

The GDDR6X memory works on the GeForce RTX 3080 Founders Edition with a clock of 1,188 MHz. It is not yet known how far it can be tacted. However, Error Detection and Replay is intended to make overclocking the memory easier, since errors in the transfer of memory are detected (error detection) and the data is transmitted until it arrives (replay). Instead of displaying artifacts, the transfer errors are detected and the storage controller tries to compensate for them. It is a Cyclic Redundancy Check (CRC) and therefore a method that works with a test value for the data. If the check value is not correct, the transmission failed.

...

The GeForce RTX 3080 and GeForce RTX 3090 uses GDDR6X storage. The GeForce RTX 3070, on the other hand, does not. This is where the GA104 GPU is used. It is currently unknown whether the GeForce RTX 3070 will also support Error Detection and Replay.

Big memory OC paired with little GPU OC on a total board power seemingly limited to 317w in both cases. Makes sense to me, that memory is taking from GPU power in this case.

Yeah but avg GPU power is lower while clocks are higher In the second pic. Something is off there.

It is lower, likely because the memory is taking up more and the board is limited. We don't know GPU load of each case, and I don't even mean the 0-100% load metric that GPUz typically shows (tho missing there), I mean actual GPU load across all of its units.

Ampere Details: Error Detection and Replay Simplifies GDDR6X Overclocking

September 13, 2020

https://www.hardwareluxx.de/index.p...d-replay-vereinfacht-gddr6x-overclocking.html

Any idea on how this differs from the error detection and replay present since the HD 5000 series?

https://www.anandtech.com/show/2841/12

So : 0,975V @ 1873MHz by default, and 0,95V @ 1935MHz after slight undervolting...3080

2080Ti Power limit@109%

3080

https://cdn.videocardz.com/1/2020/09/NVIDIA-GeForce-RTX-3080-Memory-OC-Test.png

0.9514V is an average voltage from a longer period of monitoring (small green text says avg). 0.975V is a single reading (missing the green text). Not comparable.So : 0,975V @ 1873MHz by default, and 0,95V @ 1935MHz after slight undervolting...

Yes, they move to a lower point in the voltage frequency curve.Do nvidia GPUs lower clocks when they hit power limit? I’ve never actually had a gpu hit power limit before.

Yes, they move to a lower point in the voltage frequency curve.

All NV GPUs do this since Maxwell. Listed boost clocks can be seen mostly on low end models with insufficient cooling, the rest are running at +100+200 MHz to that as a norm.I don't really know how the boosting behaviour is supposed to work, but I've heard some people say Nvidia gpus will boost the clock above the listed boost clock.

Yeah boost of a standard RTX 2080 Ti as listed by NV is like 1545mhz, when even their stock model under extreme load will be in the 1800s. Other partner cards easily in 1990-2000+ range.All NV GPUs do this since Maxwell. Listed boost clocks can be seen mostly on low end models with insufficient cooling, the rest are running at +100+200 MHz to that as a norm.