You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Nvidia Ampere Discussion [2020-05-14]

- Thread starter Man from Atlantis

- Start date

-

- Tags

- nvidia

Rurouni

Veteran

8 is correct. The other numbers and letters are upside down XDFun fact: The "8" in 3080 is upside down (verified on actual card, too)

View attachment 4560

Tbf, some fonts do write 8 like that, but on 2080 it is a normal 8.

Ampere is an evolutionary architecture over Turing. Such architectures are usually highly concentrated on perf/mm and perf/watt improvements.Flops vs everything else (bandwidth, geometry, RT)

If something was included in Ampere, it likely boosts perf per area and watt. Does it matter whether it's balanced or not?

IMO it doesn't. If 2x FP32 improves perf per mm - add it, people don't care less about the "balanced" metric, they care about perf per $, which is derevative of perf/area and watt.

miners are eyeing the new Ampere cards

https://www.hardwaretimes.com/ether...x-3080-offers-3-4x-better-performance-in-eth/

https://www.hardwaretimes.com/ether...x-3080-offers-3-4x-better-performance-in-eth/

Rootax

Veteran

miners are eyeing the new Ampere cards

https://www.hardwaretimes.com/ether...x-3080-offers-3-4x-better-performance-in-eth/

Same link 8 posts above ; )

It's more Flops because the trend in graphics is "moar compute": For a great many things, there's a more or less costly version available via compute shader. From micropolygons to post-FX.Flops vs everything else (bandwidth, geometry, RT)

Kepler was bad in that regard, yes. But here, I think it's a sensible choice, even though Gaming-Ampere probably cannot keep all its units busy at the same time. But then - it's power draw is high enough as it is, judging from TDP numbers.Kepler: hold my underfed 192 FMA lanes...

Soon you'll look at that $1500 price tag as being a bargain compared to the $2400 or higher... Le Sigh.

For real, geez.

Tarkin1977

Newcomer

Here we go again ...

Ethereum Miners Eye NVIDIA’s RTX 30 Series GPU as RTX 3080 Offers 3-4x Better Performance in Eth

https://www.hardwaretimes.com/ether...x-3080-offers-3-4x-better-performance-in-eth/

How stupid are those miners? thats only about 10% better than a Radeon VII!

It's more Flops because the trend in graphics is "moar compute": For a great many things, there's a more or less costly version available via compute shader. From micropolygons to post-FX.

Kepler was bad in that regard, yes. But here, I think it's a sensible choice, even though Gaming-Ampere probably cannot keep all its units busy at the same time. But then - it's power draw is high enough as it is, judging from TDP numbers.

Indeed. Ampere looks to be an excellent compute GPU for a lot of things and a fairly great gaming card too. I'm looking at 3d rendering first for my use (Octane, Vray maybe Redshift - all CUDA) and some other OpenCL compute, so I'm beyond tempted.

Edit: as the Octane guys are saying: "Yes, we have Octane 2020.1.5 (our next) fully optimized for 3090/Ampere and the results are pretty crazy. I can’t share more until NVIDIA shares the OB scores themselves or the cards are public."

Ampere is an evolutionary architecture over Turing. Such architectures are usually highly concentrated on perf/mm and perf/watt improvements.

If something was included in Ampere, it likely boosts perf per area and watt. Does it matter whether it's balanced or not?

IMO it doesn't. If 2x FP32 improves perf per mm - add it, people don't care less about the "balanced" metric, they care about perf per $, which is derevative of perf/area and watt.

Perf/area maybe in this case. It’s interesting that Nvidia didn’t showcase specific workloads or games that benefit from the change. They had a slide or 3 during the Turing launch showcasing the speed up from the separate INT pipeline.

Strangely Nvidia didn’t talk about raw shader flops much at all. You would think the first consumer GPUS to break 20 and 30 Tflops would be a big deal from a marketing standpoint. That leads me to believe that even Nvidia doesn’t think the inflated numbers are worth talking about.

Man from Atlantis

Veteran

Last edited:

LiXiangyang

Newcomer

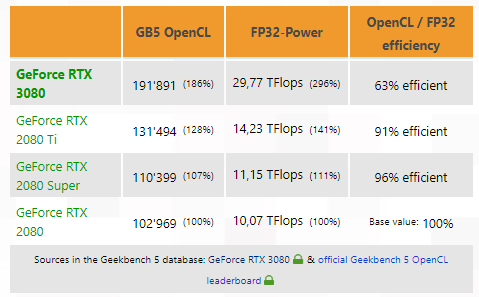

The benchmark may not being able to take advantage of GA102's new FP32 unit if it has not been recompiled with new arch/sm options, so the performance gain here may come from higher boost clock.

LiXiangyang

Newcomer

It's more Flops because the trend in graphics is "moar compute": For a great many things, there's a more or less costly version available via compute shader. From micropolygons to post-FX.

Kepler was bad in that regard, yes. But here, I think it's a sensible choice, even though Gaming-Ampere probably cannot keep all its units busy at the same time. But then - it's power draw is high enough as it is, judging from TDP numbers.

Well, if Volta's TDR is of any indiction, the higher TDR may already take the extra FP32 unit into consideration.

For instance, despite of whatever computing load (large sgemm and Tensor-based hgemm included), the real power draw of my volta rarely reach more than 80% of its TDR unless you do FP64 extensively.

Wouldn't that be the task of the drivers and not the software application?

Yes, the application doesn't know anything about the hardware configuration of the ALUs. Also it's not like the application/warp/thread sees multiple FP32 ALUs anyway. It's just now the dispatcher has a 2nd FP32 slot to issue warps to each clock cycle.

LiXiangyang

Newcomer

The application may not know the hardware configuration much (well, unless you are an informed programmer), but the compiler sure does...

The application may not know the hardware configuration much (well, unless you are an informed programmer), but the compiler sure does...

Yeah, the compiler knows for sure but I'm not sure it matters. The compiler's job is to statically schedule math instructions within a warp. It can do so because it knows how many cycles each math operation will take and when the output of that operation will be available for input to the next math op. The dispatcher then has a bunch of ready warps to choose from each cycle based on hints from the compiler. Presumably, none of that changes with Ampere.

The only thing that changes is that now there are more opportunities for a ready FP32 instruction to be issued each clock by the dispatcher. With Turing those instructions could be blocked because the lone FP32 pipeline was busy.

Similar threads

- Replies

- 49

- Views

- 7K

- Replies

- 98

- Views

- 32K

- Replies

- 1

- Views

- 7K