Infinisearch

Veteran

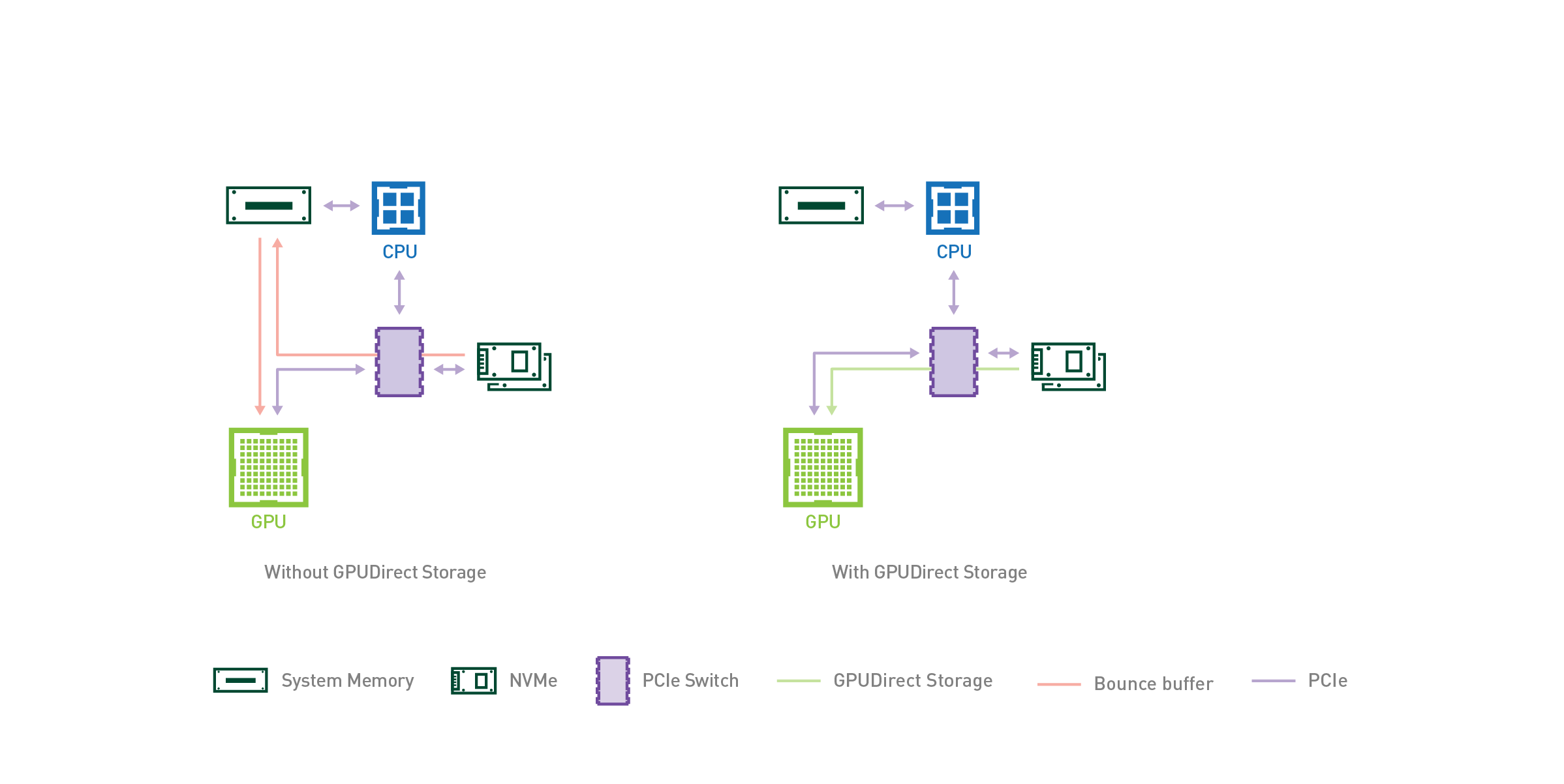

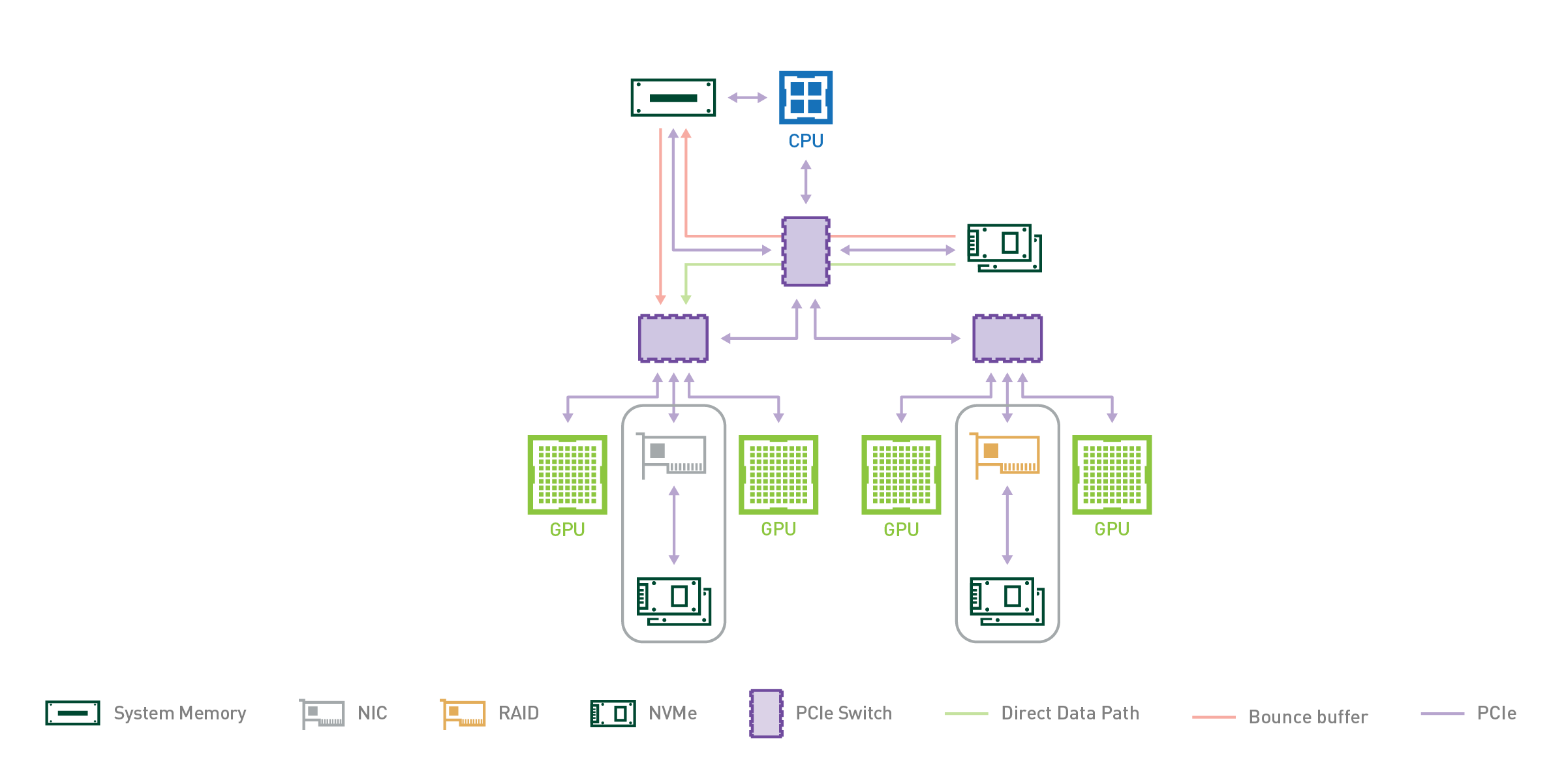

I just remembered a theory I had from the console world. If I pretend that the 6GB of lower speed RAM is 3 channels. Maybe that is dedicated to the OS and a 'bounce buffer' for the gpu to output to the high speed memory. Maybe PC GPU/OS drivers can do the same thing?

edit - xbox series x. 16GB RAM - 10 high speed 6 lower speed.

edit - xbox series x. 16GB RAM - 10 high speed 6 lower speed.

Last edited: