Accord1999

Newcomer

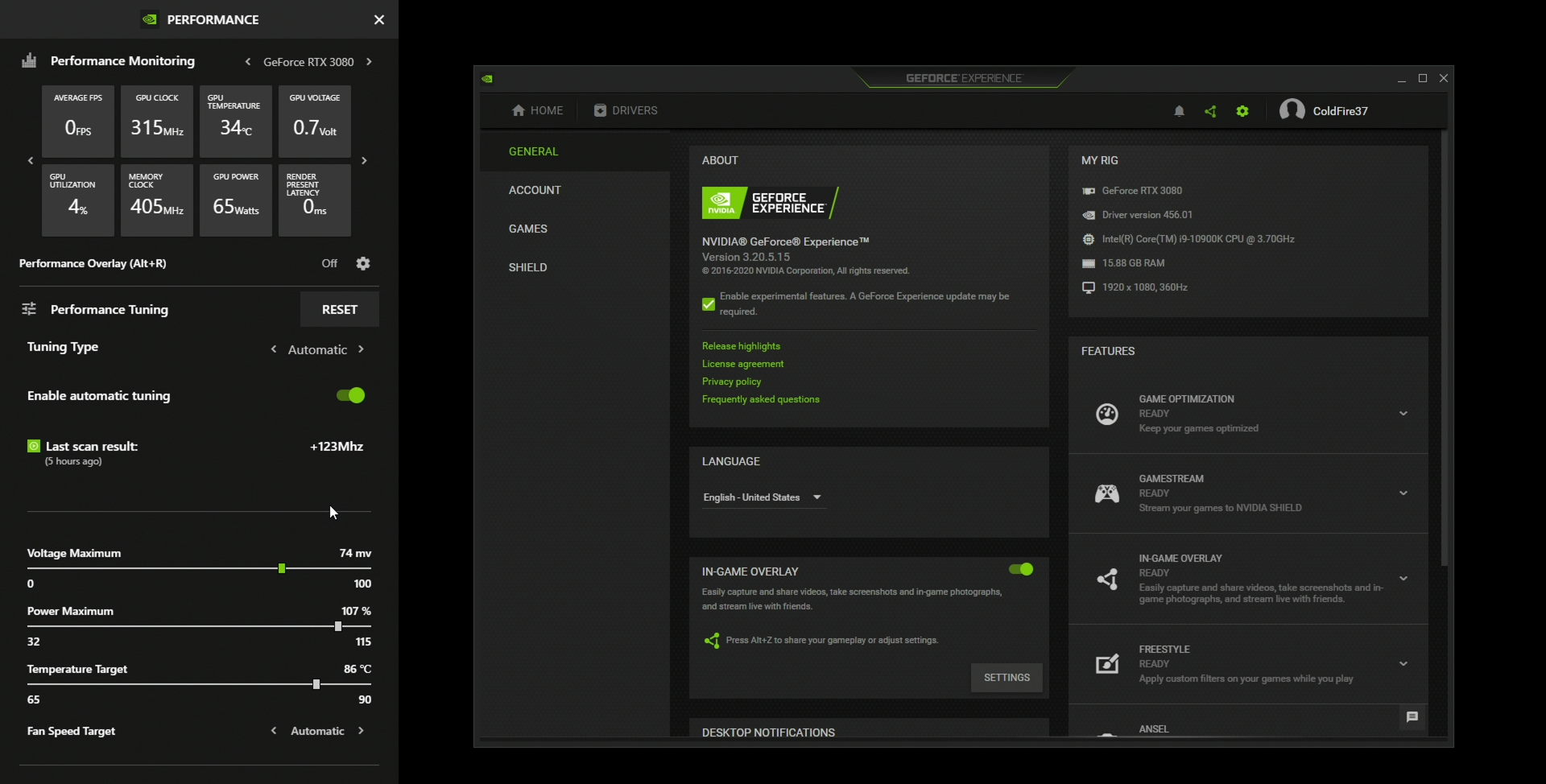

With Nvidia's Power Limit capability, you can go in the opposite direction. You set the maximum board power you want to use, the video card will downclock itself to a stable level and then you tweak upwards.The problem is to undervolt you'll basically run a benchmark at 100% load and then slowly adjust your voltage vs frequency curve so it flattens out peak frequency to lower and lower voltages until you start getting errors. You'll never really know how well it's going to work, or how low you'll be able to go, especially on a new architecture. So you're probably going to be running 100% gpu for some time near stock voltage as you lower it a bit at a time.