Yep, but that's exactly the point: One short spike during the Turbo window and an undersized PSU shuts down and your PC freezes.It rarely exceeds 100W in games.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Nvidia Ampere Discussion [2020-05-14]

- Thread starter Man from Atlantis

- Start date

-

- Tags

- nvidia

This is a perf graph from the iray developers comparing 3080 to the Quadro cards. At almost double the CUDA cores and likely some clock speed differences and other efficiencies (no tensor denoising used), the 3080 is over double the 6000 RTX. So for pure rendering it's definitely scaling well with the extra bandwidth.

https://blog.irayrender.com/post/628125542083854336/after-yesterdays-announcement-of-the-new-geforce

https://blog.irayrender.com/post/628125542083854336/after-yesterdays-announcement-of-the-new-geforce

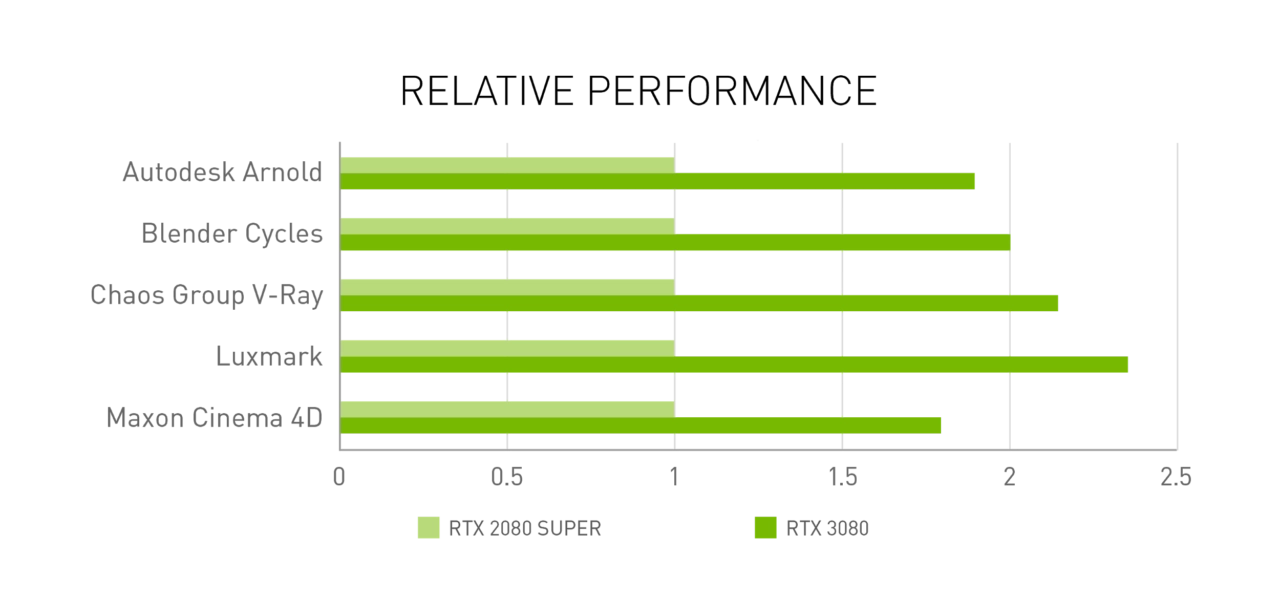

nVidia published a few numbers, too:

https://blogs.nvidia.com/blog/2020/09/01/studio-geforce-30-series/

/edit: RTX6000 has a TDP of 295W. That makes Ampere up to 90% more efficient...

https://blogs.nvidia.com/blog/2020/09/01/studio-geforce-30-series/

/edit: RTX6000 has a TDP of 295W. That makes Ampere up to 90% more efficient...

Benetanegia

Regular

Hopefully someone will also post some SGEMM benchmarks soon and settle it once and for all.

Just as a little sidenote: iray is owned by Nvidia, so these are not independent data points.This is a perf graph from the iray developers comparing 3080 to the Quadro cards. At almost double the CUDA cores and likely some clock speed differences and other efficiencies (no tensor denoising used), the 3080 is over double the 6000 RTX. So for pure rendering it's definitely scaling well with the extra bandwidth.

https://blog.irayrender.com/post/628125542083854336/after-yesterdays-announcement-of-the-new-geforce

Using the performance data gathered by Digital Foundry, I calculate the 3080 will be approximately 40-45% faster than the 2080 Ti in traditionally rasterized games. The delta in raytraced games should be larger.

I'm a little concerned about the 10GB VRAM though. At 3440x1400 it's no problem, but if I swap out my ultrawide for a 4K OLED display there are games that will definitely use this much VRAM (and more). I hope 20GB cards happen soon after launch. The fact that none have been announced yet is slightly concerning, though.

I'm a little concerned about the 10GB VRAM though. At 3440x1400 it's no problem, but if I swap out my ultrawide for a 4K OLED display there are games that will definitely use this much VRAM (and more). I hope 20GB cards happen soon after launch. The fact that none have been announced yet is slightly concerning, though.

AFAIK, to intersect must require BVH traversal, otherwise I'm not sure what you're be comparing the ray against.That's for Ray Intersection, what about BVH Traversal?

If we're working together to break down what Nvidia did; their white paper stated their metrics work like this:

RTX-OPS WORKLOAD-BASED METRIC EXPLAINED

To compute RTX-OPs, the peak operations of each type based is derated on how often it is used.

In particular:

- Tensor operations are used 20% of the time

- CUDA cores are used 80% of the time

- RT cores are used 40% of the time (half of 80%)

- INT32 pipes are used 28% of the time (35% of 80%)

For example, RTX-OPS = TENSOR * 20% + FP32 * 80% + RTOPS * 40% + INT32 * 28%

Figure 44 shows an illustration of the peak operations of each type for RTX 2080 TI

Plugging in those peak operation counts results in a total RTX-OPs number of 78.

For example, 14 * 80% + 14 * 28% + 100 * 40% + 114 * 20%.

Nvidia has a rating of 10TF per Giga Ray according to their white paper. So 10 Gigaray was 100 TF of power.

In Pascal, ray tracing is emulated in software on CUDA cores, and takes about 10 TFLOPs per Giga Ray, while in Turing this work is performed on the dedicated RT cores, with about 10 Giga Rays of total throughput or 100 tera-ops of compute for ray tracing.

** When we compare it to the new metrics:

Jensen: Using Xbox Series X methodology: The RT Cores effectively, a 34 teraflop shader, and Turing has an equivalent of 45 teraflops while ray tracing.

From Eurogamer:

"Without hardware acceleration, this work could have been done in the shaders, but would have consumed over 13 TFLOPs alone," says Andrew Goossen. "For the Series X, this work is offloaded onto dedicated hardware and the shader can continue to run in parallel with full performance. In other words, Series X can effectively tap the equivalent of well over 25 TFLOPs of performance while ray tracing."

We still have no way to compare this to

380G/sec ray-box intersection and 98/sec ray-tri peak.

But it seems pretty straight forward here:

34 TF RT shader + 11 TF of compute power = 45 TF for 2080 ? (I think 2080TI is 14 TF right?)

13 TF RT shader + 12 TF of compute power = 25 TF for XSX

That sort of messes shit things up for the Gigaray stuff up above. There's no conversion I can make sense of because 10 Gigaray used to be 100TF of power. Now their saying it's 34 TF of compute power.

If you just divide down (100/34) its a 1: 2.94 ratio.

So 6 Gigarays = 60TF = 20 TF

And 5 gigaray = 50TF = 17 TF of RT performance

hmmm.. I dunno the Xbox Series X is sitting way back like this like an equivalency of 3.9 Gigaray performance.

Not sure if I believe that. We'd have to assume the shading performance is exactly the same when it comes to computing RT intersections on compute shaders

Last edited:

Benetanegia

Regular

AFAIK, to intersect must require BVH traversal, otherwise I'm not sure what you're be comparing the ray against.

XSX requires BVH traversal shader. Nvidia claims their RT cores do full BVH traversal without the need for a shader taking care of it, "kind of a shoot ray and forget" kinda thing iirc. It is also posible to build your own custom BVH shader, but would be much slower, again iirc.

Using the performance data gathered by Digital Foundry, I calculate the 3080 will be approximately 40-45% faster than the 2080 Ti in traditionally rasterized games. The delta in raytraced games should be larger.

I'm a little concerned about the 10GB VRAM though. At 3440x1400 it's no problem, but if I swap out my ultrawide for a 4K OLED display there are games that will definitely use this much VRAM (and more). I hope 20GB cards happen soon after launch. The fact that none have been announced yet is slightly concerning, though.

How did you derive those numbers?

DF reported 34.22 fps for Borderlands 3, with a 3080 uplift of 81%. Settings are 4k, BadAss, DX12, TAA

2080 - 34.22

2080ti - 49 https://gamegpu.com/action-/-fps-/-tps/borderlands-3-test-gpu-cpu-2019

3080 - 61.9

(These numbers also seem to line up exactly with performance graph for 3080 on Nvidia website)

3080 vs 2080ti = 26%

SoTTR, 4k, Highest, TAA 3080 uplift over 2080 69%

2080 - 51 https://gamegpu.com/action-/-fps-/-tps/shadow-of-the-tomb-raider-test-gpu-cpu-2018

2080ti - 65

3080 - 86

3080 vs 2080ti = 32%

Control, 4k High, TAA, 3080 uplift over 2080 77%

2080 - 35 https://gamegpu.com/action-/-fps-/-tps/control-test-gpu-cpu-2019

2080ti - 46

3080 - 62

3080 vs 2080ti = 34%

Doom eternal. 4k, Ultra Nightmare, 3080 uplift over 2080ti "above 80%"

2080 - 76 https://gamegpu.com/action-/-fps-/-tps/doom-eternal-test-gpu-cpu

2080ti - 95

3080 - 137

3080 vs 2080ti = 44%

Control, 4k, High, DXR High, DLSS Perf, 3080 uplift over 2080ti 76%

2080 - 37 https://gamegpu.com/action-/-fps-/-tps/control-test-gpu-cpu-2019

2080ti - 48

3080 - 65

3080 vs 2080ti = 35%

Interestingly enough, the delta between Control with RT on and Off is almost non-existent

I guess the concern for me is that Nvidia states that the 2060 is the bare minimal amount of performance needed to do RT. But according to all of this, it's still nearly 50% faster than XSX. I think benchmarks are going to be needed in order for us to really figure out deltas.XSX requires BVH traversal shader. Nvidia claims their RT cores do full BVH traversal without the need for a shader taking care of it, "kind of a shoot ray and forget" kinda thing iirc. It is also posible to build your own custom BVH shader, but would be much slower, again iirc.

Benetanegia

Regular

I guess the concern for me is that Nvidia states that the 2060 is the bare minimal amount of performance needed to do RT.

Is it because of RT core capability alone tho? Is it because of general shader performance? BVH construction/updating? Etc.

But according to all of this, it's still nearly 50% faster than XSX.

2060 being considered the minimum by Nvidia (which btw would rather sell their expensive cards) on the PC space does not preclude it from being faster than the XSX. Compromises are both more common and accepted in console space. idk what the PS4 RT capabilities are but some of the RT demos they showed were full of compromises like RT being rendered at quarter resolution AND checkerboarded on top of that.

I think benchmarks are going to be needed in order for us to really figure out deltas.

Yeah, but from what I've heard it would be very difficult to make a level playing field to test RT capabilities alone, maybe even to just define them, cause just to name a few, scene complexity, type of BVH and depth of BVH could have a very different impact on the different methods.

Isn't their a separate hardware block in consoles that handle it? Unless there's a custom hardware block in these GPUs it will be trading graphics work for decompression. Microsoft has also stated that they hope to get a developer preview out sometime next year. Doesn't sound like its coming too soon. Then you have to factor in whether or not developers are going to use it in their PC version when only a small portion of the market has GPUs that support it initially.We can claim exactly the same as what is claimed for the console IO systems. Until we have independent benchmarks, both are simply advertised capabilities from the manufacturers.

There's no technical reason at this stage to doubt Nvidias claims any more than there is to doubt Sonys or Microsofts.

Yeah at this point we really will have to just ask developers almost unless we happen to have the exact same DXR 1.0 or DXR 1.1 code running on XSX and an equivalent PC part.Yeah, but from what I've heard it would be very difficult to make a level playing field to test RT capabilities alone, maybe even to just define them, cause just to name a few, scene complexity, type of BVH and depth of BVH could have a very different impact on the different methods.

Like you said, devs could roll their own traversal (I think many will as the 4 levels of standard DXR maybe "too much" for consoles and maintaining a performance they like), use different ray length limitations, etc etc etc.

It's still a different scenario. We don't know if or how it will impact GPU performance.I'm pretty certain there's plenty of GPU resources to spare on Nvidia Ampere in comparison to consoles.

It's still a different scenario. We don't know if or how it will impact GPU performance.

If you read the RadGames Developer Tweets, they give us a general idea.

Whats the name of the twitter account?If you read the RadGames Developer Tweets, they give us a general idea.

Whats the name of the twitter account?

Radgames comes up quite a few times in the Console Tech section, here's some posts referring to some nice posts about it. Be sure to read the full twitter threads about it.

Mostly that you could get 60-120 GB/s of textures decompressed if you used the entire PS5 GPU (10.28 TF). The Ampere has near that much TF to spare over and above the PS5.

Naturally, you wouldn't need to use that much, but it gives you an idea on how powerful the GPUs are when it comes to decompression.

https://forum.beyond3d.com/posts/2134570/

https://forum.beyond3d.com/posts/2151140/

https://forum.beyond3d.com/posts/2134405/

External references --

http://www.radgametools.com/oodlecompressors.htm

http://www.radgametools.com/oodletexture.htm

https://cbloomrants.blogspot.com/

Oodle is so fast that running the compressors and saving or loading compressed data is faster than just doing IO of uncompressed data. Oodle can decompress faster than the hardware decompression on PS4 and XBox One.

GPU benchmark info thread unrolled: https://threadreaderapp.com/thread/1274120303249985536

A few people have asked the last few days, and I hadn't benchmarked it before, so FWIW: BC7Prep GPU decode on PS5 (the only platform it currently ships on) is around 60-120GB/s throughput for large enough jobs (preferably, you want to decode >=256k at a time).

That's 60-120GB/s output BC7 data written; you also pay ~the same in read BW. MANY caveats here, primarily that peak decode BW if you get the entire GPU to do it is kind of the opposite of the way this is intended to be used.

These are quite lightweight async compute jobs meant to be running in the background. Also the shaders are very much not final, this is the initial version, there's already several improvements in the pipe. (...so many TODO items, so little time...)

Also, the GPU is not busy the entire time. There are several sync points in between so utilization isn't awesome (but hey that's why it's async compute - do it along other stuff). This is all likely to improve in the future; we're still at v1.0 of everything.

That's 60-120GB/s output BC7 data written; you also pay ~the same in read BW. MANY caveats here, primarily that peak decode BW if you get the entire GPU to do it is kind of the opposite of the way this is intended to be used.

These are quite lightweight async compute jobs meant to be running in the background. Also the shaders are very much not final, this is the initial version, there's already several improvements in the pipe. (...so many TODO items, so little time...)

Also, the GPU is not busy the entire time. There are several sync points in between so utilization isn't awesome (but hey that's why it's async compute - do it along other stuff). This is all likely to improve in the future; we're still at v1.0 of everything.

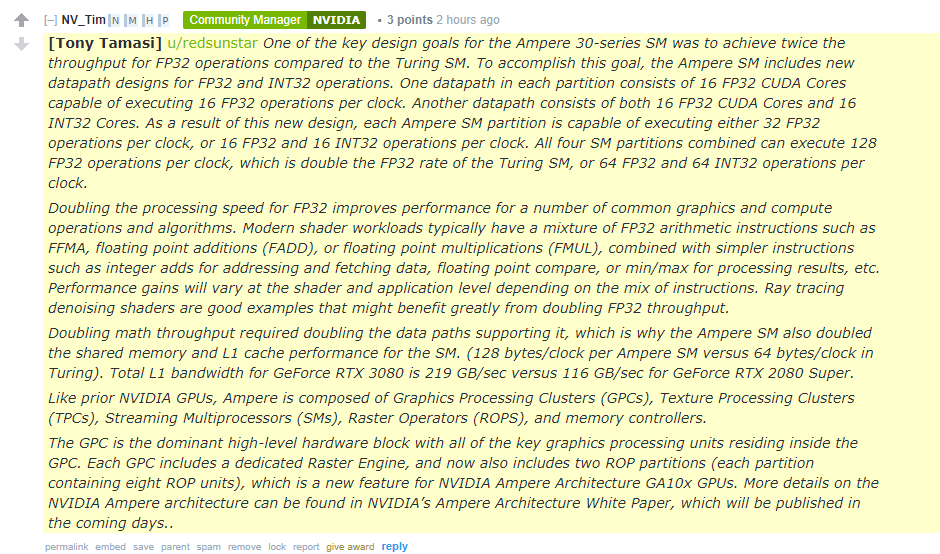

nVidia explains how they have archived 2x FP32 throughput, enjoy:

Similar threads

- Replies

- 49

- Views

- 7K

- Replies

- 98

- Views

- 32K

- Replies

- 1

- Views

- 7K