Was the thermal density of CPU/GPU discussion before?

It's come up before when discussing the downside to node shrinks and their effect on performance scaling and cooling solutions. I'm not sure what to make of the claim about creating a whole set of clock points that lead to equal thermal density. I can think of some advantages to it for the purposes of reliability, but I'm not sure what quirk to the chip or the cooler would make equal density a specific target like that.

These "cache scrubbers" on the PS5 i keep hearing about...can someone please explain to me what they are? I keep hearing that they are there to partially mitigate the bandwidth issues, but how does that work if that's indeed how it is?

Cerny discussed the scenario where the SSD loads new data into memory, and the GPU's caches have old versions of the data. The standard way of dealing with that is to clear the GPU's caches, while the PS5's coherence engines send the affected addresses to the GPU, and the cache scrubbers are able to scan through and clear those specific addresses from all the caches. While there could be a bandwidth element, I think the big motivator is performance like the volatile flag was for the PS4. Cache flushes involve clearing caches of most or all of their contents, even if only a little of it was problematic. That hurts the performance of everything else running on the GPU by forcing extra writes to memory and then forcing data to be read back in. The operation itself is also long because there are many cache lines, and the process involves stalling the whole GPU while it is going on. Presumably, the PS5 minimizes the amount of stalling or avoids stalling in many cases. However, if the goal were only reducing bandwidth, stalling the GPU would reduce it more.

I am curious if the 36 CU decision is related to BC, though. It's exactly the same as PS4pro, and Sony's backwards compatibility has often been achieved by down clocking and handling workloads in as close to original hardware configurations as possible.

I dunno. The GPU has cache scrubbers, dynamic boost clocks, and built-in custom BC hardware. The CPU is a server-derived core that in other situations could turbo to just short of 5 GHz, and the PS5 included timing modifications (firmware or hardware?) to make it act like more like a netbook processor from 7 years ago on the fly. All this in a many-core SOC with many custom processors and a transistor budget likely greater than 10 billion.

The GPU and CPU are capable of virtualizing their resources or changing modes to pretend to be something else, or to have different clocks on a whim.

I'd worry if the designers were stumped by the number 36.

I wouldn't think that the CPU would take well to massively increased latency and expect that the SSD will already be quite busy using all of it's available bandwidth for moving data into RAM (the thing it's actually designed for). This seems like a bad idea, even if it were technically possible.

Intel has Data Direct I/O, which allows for network interfaces to load directly to the L3. I haven't seen an AMD version of it, or they gave it a different name and I failed to find it. There might be more benefit with the network since there's more to packet processing versus a local disk read.

The difference between a typical game and Furmark is very significant to the point where it is recommended to not even RUN Furmark cause it might damage your GPU.

It is not unrealistic to have some parts of code that will run outside the TDP of any hardware and devs should absolutely make sure their code isn't doing this for long periods.

I think it's a mark of flawed hardware if Furmark can damage it. Modern transistor budgets are too large at this point for a design to just hope something doesn't accidentally use an extra fraction of many billions of transistors, particularly with devices that strive for extra circuit performance. I also don't know about the vendors' habit of declaring any application that makes them look bad thermally a "power virus". There are enough examples of accidental power-virus applications, or ones that just happen to be a power virus for one product configuration out of millions, or because of interactions with one driver revision. Nor is it a guarantee that code that's safe today won't cause problems in future hardware, if it removes some bottleneck that was holding things back.

So GPU manufacturers like Nvidia and AMD don't actually know the max power draw for their parts? What they're giving out is some kind of estimate of power draw?

For marketing purposes, and because silicon performance has not been scaling well since the Pentium 4, vendors have been cutting back on guard-bands for voltage and clock, and they've added billions of transistors. There are electrical specifications that outline normal operation, and then theoretical limits and peak transients, with the caveat that those have error bars as well. Regular operation can temporarily lead to spikes considered too short to be relevant, but they happen. Covering for transients means the power delivery system is capable of amounts that would be dangerous if sustained, but to discard that would be to accept far fewer transistors and much of the clock scaling over the last 7 years.

But wouldn't this just go back to hardware design? At least do synthetic tests that just blitz the worst-case functions of the gpu, and either have dynamic clocking that can handle it, or pick a fixed clock that can sustain it?

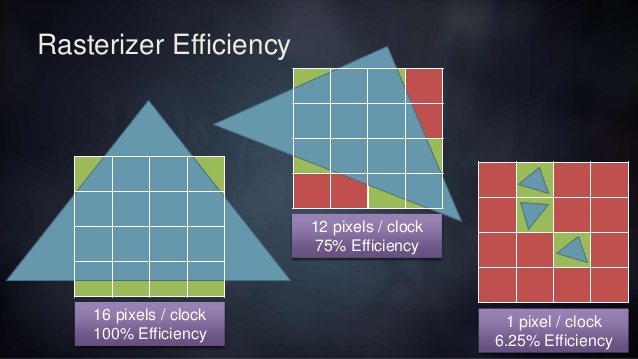

Analysis like that becomes intractable at the level of complexity of modern SOCs, then the extra turbo and boost measures, and with DVFS and power gating. It's much easier to get caught out in a scenario where some combination of hundreds of independent blocks hits a peak, particularly if its in one of hundreds of clock/voltage points running who knows what sequence of operations that can have physical side effects. Power gating is an example of something that can be brutal in terms of electrical behavior when units are re-connected to power, so it's also a combination of what state the hardware was in before a worst-case function, or maybe what it goes to after.

The gap between average and worst-case is vast, and it only takes a fraction of the chip to breach limits. Due to less than optimal power scaling, the number of transistors needed to breach a given power budget grows slowly, while nodes add up to 2x the number of potential candidates.

That may have been inspired from the overheating troubles of the original and going with the over-engineered solution. The most interesting data-point is power draw by games. Too Human is what, >85%. Does that point to something like 85% being the expected power draw for a PS5 game?

It's 85% of the "power virus" limit. This also assumes that power virus was the best one they had, or that someone didn't find something even better later. It was easier to craft a power virus when the number of units or buses was in single-digits. The Xbox Series X has over 40x the transistor count of that earlier console.

I specifically said the CPU or GPU could avoid making access requests to the RAM by using the SSD directly on code that is less latency sensitive.

For example a small 100KB script that determines enemy behavior, runs on the CPU and fits a small portion of the L3. Does it ever need to go through the main system RAM?

If going by the definition of cache, strictly speaking it does need to go to RAM at least initially. There are specific features for specific products for things like Intel Xeons that try to change that, but it's not simple and there can be potential issues that aren't worth the trouble for a console.