DavidGraham

Veteran

The previous version used in Control ran on the CUDA cores.wait, what?

The previous version used in Control ran on the CUDA cores.wait, what?

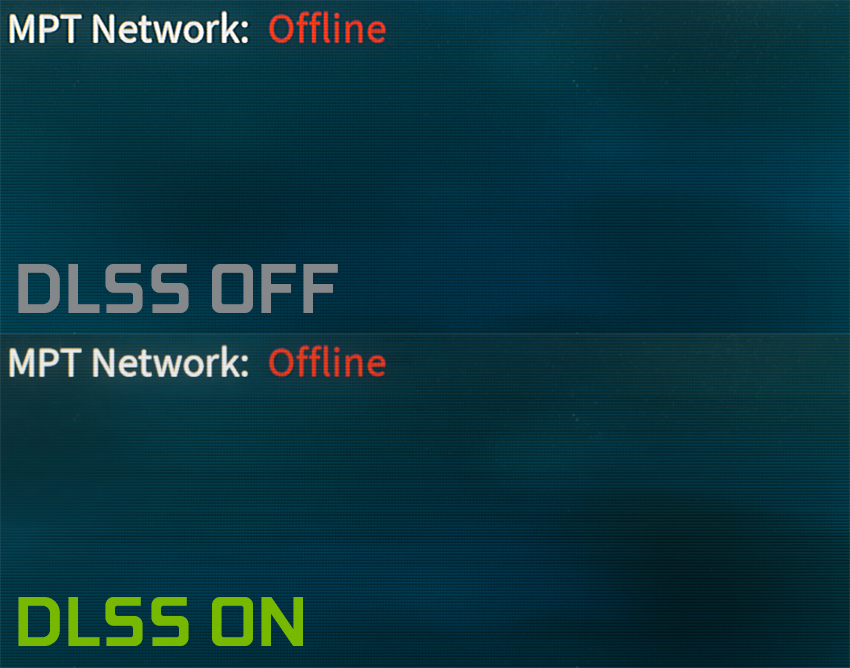

Yeah, I heard DLSS in the game is quite improved and good to see ongoing improvements! Can't wait for some reviews on the game.NVIDIA added DLSS along side RTX in the indie game Deliver Us the Moon, DLSS is now upgraded to a new version that includes 3 quality tiers: Performance, Balanced and, Quality, controlling the rendering resolution of DLSS./

Ah ok, I remember that now. Thanks. So it's the improved method from Control but working on Tensor now.The previous version used in Control ran on the CUDA cores.

The most recent implementation in Control was called DLSS but was just a post process upscale done on shader cores. Nvidia said more work had to be done to get a version using the tensor cores performant enough.wait, what?

Have you tried it yet? Please give results! Super interested to hear moreThe previous version used in Control ran on the CUDA cores.

Will this be the first known run of DLSS on tensor? That means we’re looking at an actual DNN model running real time?The most recent implementation in Control was called DLSS but was just a post process upscale done on shader cores. Nvidia said more work had to be done to get a version using the tensor cores performant enough.

No, there are basically 3 stages for DLSS:Will this be the first known run of DLSS on tensor? That means we’re looking at an actual DNN model running real time?

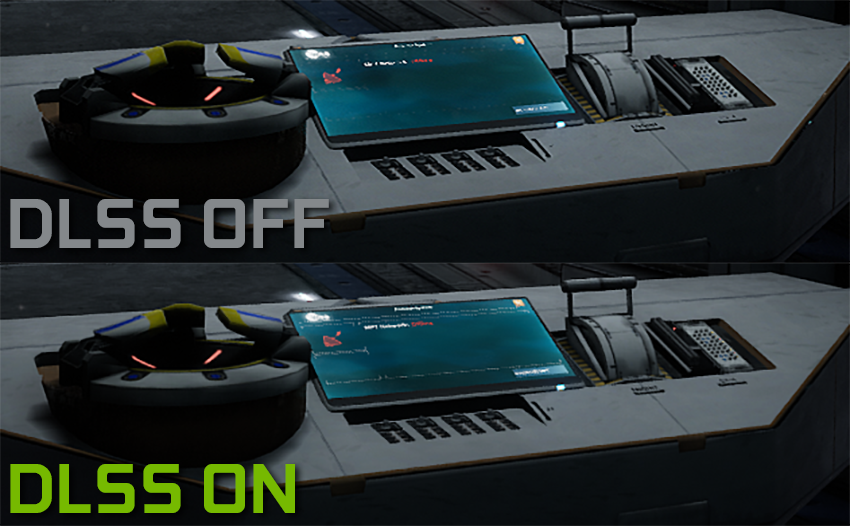

I tried the game, I couldn't spot any difference between DLSS Quality and DLSS off at first glance, of course more investigations are needed, NVIDIA says it has some advantages and disadvantages.Have you tried it yet? Please give results! Super interested to hear more

That's basically the impression people posted on resetera. Want DF to review this one ...I tried the game, I couldn't spot any difference between DLSS Quality and DLSS off at first glance, of course more investigations are needed,

There were previous implementations but IMO they were quite bad and at best simply matched standard upscaling, often times being quite a bit worse.Will this be the first known run of DLSS on tensor? That means we’re looking at an actual DNN model running real time?

That's not what he asked! If he wanted your personal opinion I believe he would have so stated.There were previous implementations but IMO they were quite bad and at best simply matched standard upscaling, often times being quite a bit worse.

Hopefully some high quality video comparisons emerge as using screens to judge IQ is meaningless.

Weren't the previous implementations of "DLSS" outside of Control, all using the tensor cores? It seems quite obvious to me that they were, given the difference in quality we're seeing, and the fact that certain resolutions were only supported on certain graphics cards in some games..referring to the fact that the performance penalty for running DLSS with lower resolutions on higher end cards was disabled because performance would be worse than just running the game at said lower res.Will this be the first known run of DLSS on tensor? That means we’re looking at an actual DNN model running real time?

This information that they ever ran DLSS on CUDA is new to me; so I'll leave it as, I am now unsure.Weren't the previous implementations of "DLSS" outside of Control, all using the tensor cores? It seems quite obvious to me that they were, given the difference in quality we're seeing, and the fact that certain resolutions were only supported on certain graphics cards in some games..referring to the fact that the performance penalty for running DLSS with lower resolutions on higher end cards was disabled because performance would be worse than just running the game at said lower res.

Anyway, whether it's a DNN done on the tensor cores, or shader based algorithm... doesn't particularly matter to me. The upscaling is impressive and if we can expect this quality or higher quality in the future, plus these improvements to framerates.. I'm happy. We just need more implementations in more games.

This information that they ever ran DLSS on CUDA is new to me; so I'll leave it as, I am now unsure.

CUDA can do the same thing as Tensor; but just at a processing speed disadvantage but the flexibility of being able to run a multitude of different types of machine learning algorithms. Tensors are less flexible; but much faster at what they do. If it was running CUDA before; you could run a more complex network on Tensor in the same time frame as CUDA.

Most ML algorithms will not branch. If branching was involved within the model itself, it would complete in different time frames. Running multiple policies and selecting the best one would involve branching let's say; but I don't believe that is what is happening here. Neural networks are done by weight and activation functions.1. There is no DLSS running only on Tensor cores. That's not possible because tensors can do only math operations but no logics / branches. So every ML algorithm occupies cuda cores, and tensors help with the bulk of work.

Possibly. It's not really DLSS then. It's just a different sampling method.2. It could be their newest method dos not use any kind of ML algorithm at all, but still use tensors for acceleration. (Actually i guess that's the case. Matrix multiplications are useful for many things.)

3. I assume there exist low precision data type extensions for game APIs that indirectly expose tensors to any shader types.

IMO upscaling with a factor <= 2 is a (too simple) problem where handcrafted solutions should lead to better results at better performance (think about hints of temporal reconstruction, velocity vectors etc.), and Control showed this is indeed the case (or at leas it was back then).

But you still need to do the math operations in order and handle the data flow, both handled by Cuda cores?Most ML algorithms will not branch.

Yeah, i think they don't want / need to tell how their upscaling works becasue it's propertiary tech of theirs.I don't think it's something they would open to developers.

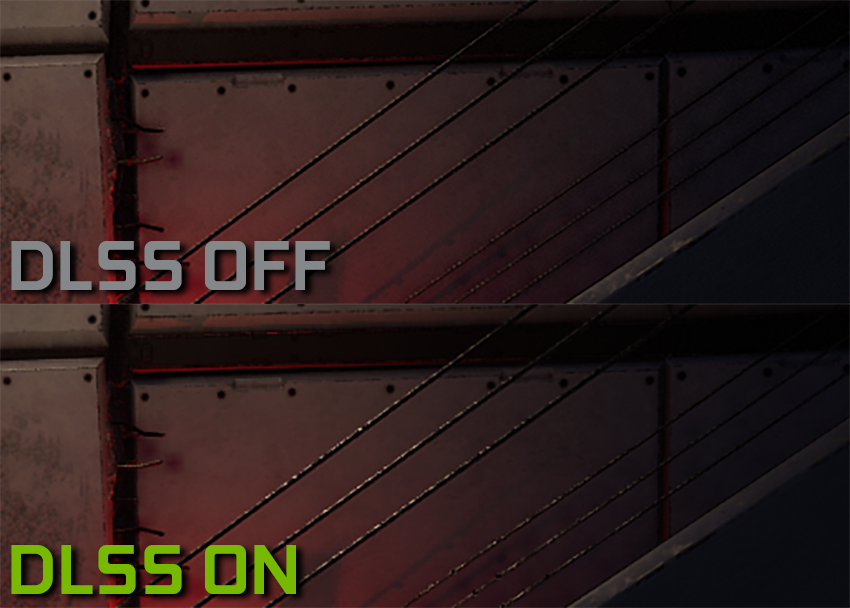

This is technically impossible - you can't improve over what the artist originally intended. Even if the results appears to look better in your eyes, they're not better because they're wrong.Looks like DLSS is strong in Wolfesntein Youngblood, sometimes reconstructing some elements even better than native resolution.

I assume it is because TAA runs at higher quality at the lower resolution than in native 4K, which would make sense because the higher the resolution, the less AA you need.sometimes reconstructing some elements even better than native resolution.

You can if the native res is combined with TAA, which doesn't represent the elements correctly, in the DF video, DLSS reconstructed a circle better than native res+ TAA.This is technically impossible - you can't improve over what the artist originally intended. Even if the results appears to look better in your eyes, they're not better because they're wrong.

No it's immediately disabled upon selecting DLSS, same for Variable Rate Shading.But i don't know if TAA is used at all with DLSS, or if DLSS replaces it?