D

Deleted member 13524

Guest

Regarding the RT hardware on PS5, I think we should look at what Mark Cerny is and what he isn't.

He is Sony's console system architect and hardware enthusiast. He is not a PR guy (even though this interview was a PR move obviously).

That said, historically Cerny has been generally conservative when talking about big numbers and features, and focuses a lot more on features with significant practical results. He could have boasted to no end about the PS4 Pro's 8.6TFLOPs FP16, yet he only mentioned that in a loose sentence. A PR guy would have boasted about those almost 9TFLOPs to no end. OTOH, he talked a lot about async compute, which was later confirmed as very important for consoles by other developers.

If he mentioned RT by name, then one of two things is happening:

1 - They're using a "pure compute" RT implementation that is very efficient and performant;

2 - There's dedicated hardware for RT in the PS5 (but not necessarily in all Navi chips) that makes RT very efficient and performant.

There's also the fact that AMD always gave really nice answers whenever they had interviewers asking when they'd get RT hardware like nvidia's RTX line.

The easy way out would have been "nvidia's RTX is too slow for proper" and "there are virtually no games using it". Yet all they said was "yeah RT is really cool and we're working with devs to make it right".

That left me the impression that they didn't want to trash talk RT to avoid compromising products coming in a near future (PC or console).

I have little doubt that the Spiderman demo was patched to take advantage of the faster storage, at least in what relates to streaming content to RAM/VRAM.

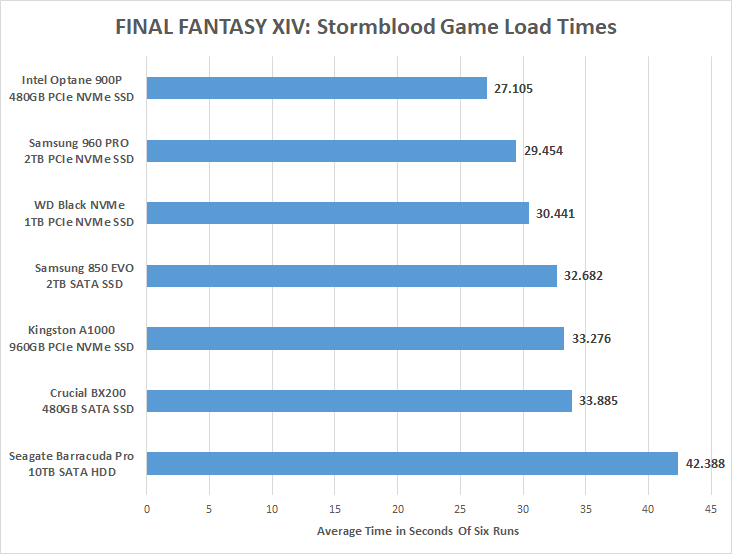

About 2 years ago I upgraded my system to have 64GB of RAM (quad-channel DDR3 at 1600MHz in a X79 platform). Using a ~40GB RAM drive, in synthetic benchmarks I get up to 7.5GB/s regardless of file size.

Back then I thought if I ran my games from the RAM drive, I'd get instantaneous loading times.

In practice, I didn't. At least not with the game I tried by back, which were AFAIR Witcher 3, Dragon Age Inquisition and Dying Light, among others.

Turns out most games, when paired with very fast storage seem to be bottlenecked by the CPU instead. Even worse, in some games like Dying Light they seemed to be using a hopeless single CPU thread to decompress/decrypt the data into the RAM, so when playing multiplayer I'd often wait more than my friends who had faster CPUs and slower SATA SSDs.

So just throwing a faster storage into the mix isn't enough to make loading times that much faster.

It's probably using the faster storage, together with some other optimizations like GPGPU decompression/decryption or using many CPU threads perhaps with dedicated instructions.

Though I wouldn't call that "cheating". Cheating would be e.g. using lower quality assets that would mean less data to transfer, or keeping those assets in the RAM without telling the journalist. I don't think he did that.

He is Sony's console system architect and hardware enthusiast. He is not a PR guy (even though this interview was a PR move obviously).

That said, historically Cerny has been generally conservative when talking about big numbers and features, and focuses a lot more on features with significant practical results. He could have boasted to no end about the PS4 Pro's 8.6TFLOPs FP16, yet he only mentioned that in a loose sentence. A PR guy would have boasted about those almost 9TFLOPs to no end. OTOH, he talked a lot about async compute, which was later confirmed as very important for consoles by other developers.

If he mentioned RT by name, then one of two things is happening:

1 - They're using a "pure compute" RT implementation that is very efficient and performant;

2 - There's dedicated hardware for RT in the PS5 (but not necessarily in all Navi chips) that makes RT very efficient and performant.

There's also the fact that AMD always gave really nice answers whenever they had interviewers asking when they'd get RT hardware like nvidia's RTX line.

The easy way out would have been "nvidia's RTX is too slow for proper" and "there are virtually no games using it". Yet all they said was "yeah RT is really cool and we're working with devs to make it right".

That left me the impression that they didn't want to trash talk RT to avoid compromising products coming in a near future (PC or console).

And I don't see Cerny cheating by using a patched game which would also defeat the purpose of his demonstration and make it invalid. He has never done anything remotely similar in the past.

I have little doubt that the Spiderman demo was patched to take advantage of the faster storage, at least in what relates to streaming content to RAM/VRAM.

About 2 years ago I upgraded my system to have 64GB of RAM (quad-channel DDR3 at 1600MHz in a X79 platform). Using a ~40GB RAM drive, in synthetic benchmarks I get up to 7.5GB/s regardless of file size.

Back then I thought if I ran my games from the RAM drive, I'd get instantaneous loading times.

In practice, I didn't. At least not with the game I tried by back, which were AFAIR Witcher 3, Dragon Age Inquisition and Dying Light, among others.

Turns out most games, when paired with very fast storage seem to be bottlenecked by the CPU instead. Even worse, in some games like Dying Light they seemed to be using a hopeless single CPU thread to decompress/decrypt the data into the RAM, so when playing multiplayer I'd often wait more than my friends who had faster CPUs and slower SATA SSDs.

So just throwing a faster storage into the mix isn't enough to make loading times that much faster.

It's probably using the faster storage, together with some other optimizations like GPGPU decompression/decryption or using many CPU threads perhaps with dedicated instructions.

Though I wouldn't call that "cheating". Cheating would be e.g. using lower quality assets that would mean less data to transfer, or keeping those assets in the RAM without telling the journalist. I don't think he did that.