Been a long time since I posted here - is an understatement - but I really like the idea behind this card - reminds me of when the Voodoo 1 came out. To me - it looks like the start of something profound - but the question will how readily will game engine developers and Nvidia drivers be able to make this magic happen. I wonder if it will be three years before its common place - or much faster and how much smarter drivers will lift performance in games that can offer substantial ray tracing or AI or physics benefits than initial reviews will show. I have no way of knowing if drivers in a year or two will markedly improve the gaming performance of first stable driver for this card. Ponder what other thing will happen here. Well done Nvidia for moving boldly!

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Nvidia Turing Product Reviews and Previews: (Super, TI, 2080, 2070, 2060, 1660, etc)

- Thread starter Ike Turner

- Start date

Babel-17

Veteran

Edit: Well this is embarrassing. It seems that this youtube group also has weeks old mock-ups of face-offs involving 20 series cards.The lack of performance leaks is surprising. I fully expected once the driver was out in the wild it would eventually spread to folks who had access to hardware and cared nothing for NDAs.

Is the driver installation process authenticated somehow? I know you have to login to download the driver but does the installation process also require a connection to nvidia?

Grain of salt:

Edit: Well this is embarrassing. It seems that this youtube group also has weeks old mock-ups of face-offs involving 20 series cards.

One more. It shows more games for the 2080.

Last edited by a moderator:

Babel-17

Veteran

That got pointed out in all the videos (there are more). Here's the reply, fwiw.I'm calling fake on all of them. In the 2080 videos they have 2080 VRAM utilization over 8GB in most games...

this value means RAM, not VRAM.

Kind of odd that value is displayed, but it would take a very sloppy faker to not know about the difference in vram amounts. Still, yeah, grain of salt.

This group has been around a while, so that counts for something.

https://www.youtube.com/channel/UCIdTXcXLwVUwcEer7rRSv3A/videos

The results seem plausible to me.I'm calling fake on all of them. In the 2080 videos they have 2080 VRAM utilization over 8GB in most games... and none of the clips shown for the 2080 TI series reflect the actual FPS improvement... it's footage that was all obviously captured on the same gpu.

The results seem plausible to me.

That's the idea when things are faked... you get enough information to where you can plausibly fake scores so people believe them.

I don't think it's very plausible that in Star Wars BF2 the 2080ti only gets 3 fps more than the 1080ti... while in Battlefield 1 the 2080ti gets almost 30fps faster...

Babel-17

Veteran

Good point. The only thing I can say, while not defending the videos, is that it was an odd scene to benchmark, there's not much going on. It's good that you brought this up as it made me look for the 2080 results vs. the 1080 ti, and for that they use a much more energetic scene.That's the idea when things are faked... you get enough information to where you can plausibly fake scores so people believe them.

I don't think it's very plausible that in Star Wars BF2 the 2080ti only gets 3 fps more than the 1080ti... while in Battlefield 1 the 2080ti gets almost 30fps faster...

So I don't know what to say. That's an odd reviewing choice, to use different scenes. Maybe they only had the cards for a short time, but they had time to make videos with their rushed benchmarking recordings? As to the perceived frame rates, we don't know how they recorded and encoded the videos. But that does add another question mark.

They've been around for a while (and they don't seem to be getting ad revenue Edit: now I'm seeing an ad on an older video), so while the oddities to be found (and there's at least one more*) suggest a typical pre-review embargo fake, I'll spend some time looking them over.

*In Rainbow Six Outbreak they have the 2080 collapsing in comparison to the 2080 ti, only 46 fps vs. 150 fps.

It's in another video, but if these are fakes I'd rather not have had totally mucked up this thread with bogus material, so I won't post it. It's on their videos page though.

Last edited:

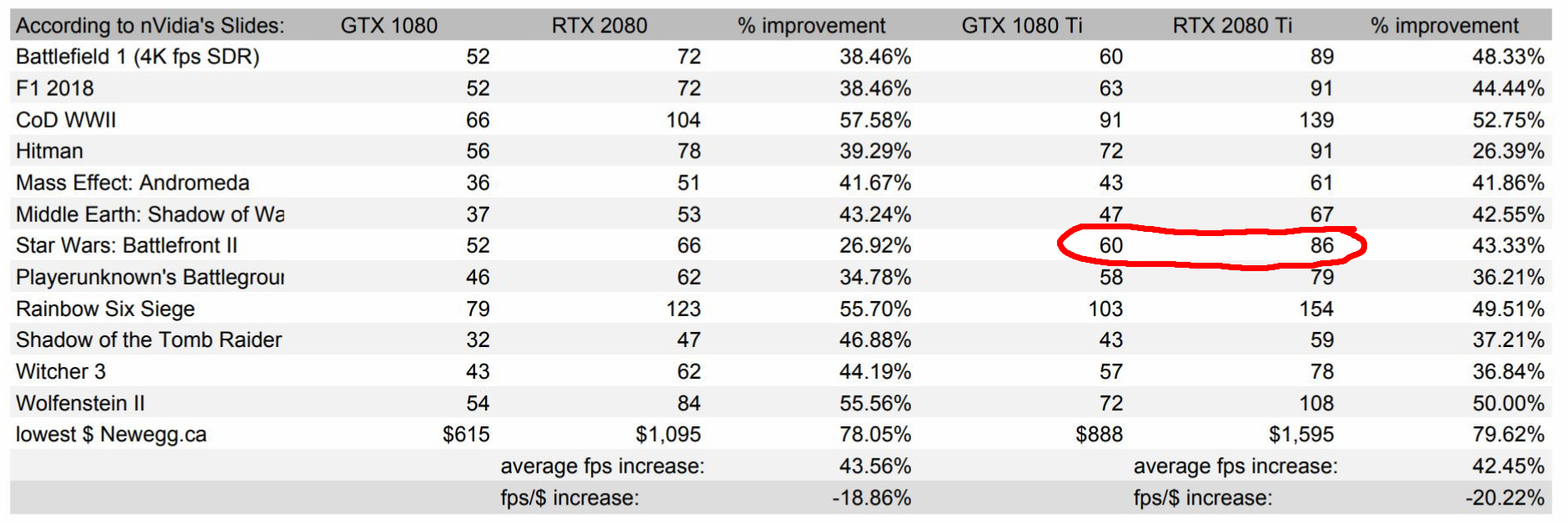

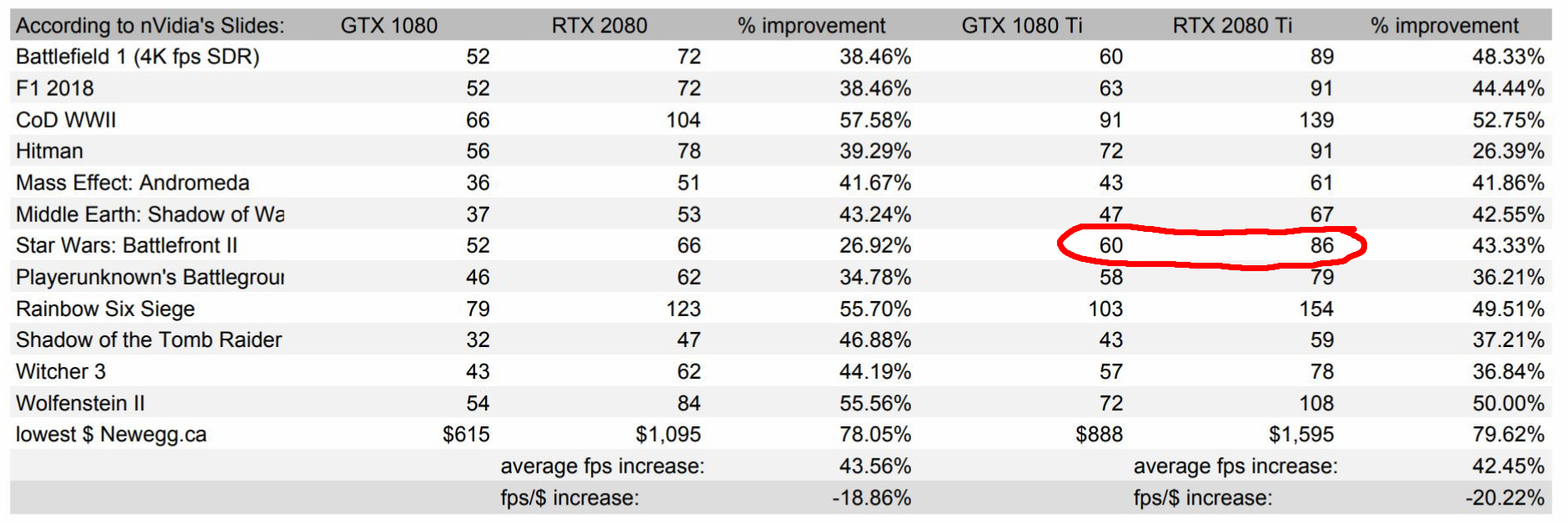

Here's a look at what Videocardz posted from Nvidia's own slides apparently:

It shows the 2080ti at 26fps faster than the 1080ti in Star Wars BF2.

Then there's Middle Earth Shadow of War... in the video the 2080ti scores 64fps vs 58 from the 1080ti...6fps difference. In this chart the 1080ti scores 47... and the 2080ti scores 67.. 20fps difference.

The numbers in the chart seem much more plausible to me.

Edit/ Babel I saw your post, and yea I agree. Look at the 2080 number for Rainbow 6 Siege.. the 2080 shows 123fps.. and not the 46fps of the video..

Now obviously different scenes will stress and potentially bottleneck gpus differently.. but these discrepancies are too extreme to ignore. You can't be using different scenes from games on different gpus and expect a meaningful comparison lol.. if that's what they're doing haha

It shows the 2080ti at 26fps faster than the 1080ti in Star Wars BF2.

Then there's Middle Earth Shadow of War... in the video the 2080ti scores 64fps vs 58 from the 1080ti...6fps difference. In this chart the 1080ti scores 47... and the 2080ti scores 67.. 20fps difference.

The numbers in the chart seem much more plausible to me.

Edit/ Babel I saw your post, and yea I agree. Look at the 2080 number for Rainbow 6 Siege.. the 2080 shows 123fps.. and not the 46fps of the video..

Now obviously different scenes will stress and potentially bottleneck gpus differently.. but these discrepancies are too extreme to ignore. You can't be using different scenes from games on different gpus and expect a meaningful comparison lol.. if that's what they're doing haha

Last edited:

Well this is embarrassing. It seems that they also have weeks old mock-ups of face-offs involving 20 series cards, one is from September 2. I'll add a disclaimer to the videos I posted. Should I delete them, while providing an explanation?

Edited to add the date.

Yea I'd leave them along with the edit. You stated to take them with a grain of salt to begin with. There's only a few more days until it's all put to rest anyway

Babel-17

Veteran

Feeling less embarrassed/alone in being taken in now. https://hardforum.com/threads/rtx-2080-ti-vs-gtx-1080-ti-benchmark.1968026/

It also made it into a thread at guru3d. We were all expecting reviews, and it'sRussia's nVidia's fault.

lol, either I'm not the only one taken in, or the videos are based on real numbers. On that older video the robot lady voice did make a disclaimer that the numbers were taken from what nVidia had published. I just was put off by the video giving the impression it was based on real time measurement. So maybe they got hold of leaked numbers, and made these videos. I'd still be put off by giving the impression that they were captures. That is not reassuring. Though if the numbers are real I'll be grateful. Meh, I'm retired, and waiting on any news. I pre-ordered a MSI 2080 Trio (it's a beast/heavy enough that I'm concerned about sag) that had been very briefly available, I got a notification that it had come back in stock, and on sale for 800 dollars, at Amazon (plus 5% back with Prime, but I have to pay tax). The cancellation window is narrow.

I'm not a fan of pre-ordering, but I still remember being pissed that I didn't jump on the AMD HD 5850 card when it first came out as prices soon soared. On the other hand, I made out like a bandit by waiting on the GTX 970s as by doing so I ended up getting an EVGA SSC that was a revised version that had the cooling fixed, came with a free backplate (I had to put it on), had been marked down a lot, and came with an excellent free game (The Witcher 3?). After some pleading Newegg even gave me the other free game that later became available. It was that messed up Batman game, and to make up for that Warner gave us all the entire series for free. Quite the deal! Edit: And I got that less than 4 GB of full speed memory fiasco rebate check from nVidia for the 970. Sweet card. It's now sitting in the case of the lady who worked with me on a political campaign. I'm on a GTX 1070 FE. I almost felt guilty for applying for that rebate, I didn't want to wait on Newegg getting around to handling it, but that became a non-issue when I paid full price for the 1070 FE, lol.

It also made it into a thread at guru3d. We were all expecting reviews, and it's

lol, either I'm not the only one taken in, or the videos are based on real numbers. On that older video the robot lady voice did make a disclaimer that the numbers were taken from what nVidia had published. I just was put off by the video giving the impression it was based on real time measurement. So maybe they got hold of leaked numbers, and made these videos. I'd still be put off by giving the impression that they were captures. That is not reassuring. Though if the numbers are real I'll be grateful. Meh, I'm retired, and waiting on any news. I pre-ordered a MSI 2080 Trio (it's a beast/heavy enough that I'm concerned about sag) that had been very briefly available, I got a notification that it had come back in stock, and on sale for 800 dollars, at Amazon (plus 5% back with Prime, but I have to pay tax). The cancellation window is narrow.

I'm not a fan of pre-ordering, but I still remember being pissed that I didn't jump on the AMD HD 5850 card when it first came out as prices soon soared. On the other hand, I made out like a bandit by waiting on the GTX 970s as by doing so I ended up getting an EVGA SSC that was a revised version that had the cooling fixed, came with a free backplate (I had to put it on), had been marked down a lot, and came with an excellent free game (The Witcher 3?). After some pleading Newegg even gave me the other free game that later became available. It was that messed up Batman game, and to make up for that Warner gave us all the entire series for free. Quite the deal! Edit: And I got that less than 4 GB of full speed memory fiasco rebate check from nVidia for the 970. Sweet card. It's now sitting in the case of the lady who worked with me on a political campaign. I'm on a GTX 1070 FE. I almost felt guilty for applying for that rebate, I didn't want to wait on Newegg getting around to handling it, but that became a non-issue when I paid full price for the 1070 FE, lol.

Last edited:

I am very curious as to how 2080 Vs 1080ti will look like. For reference, here is rather unsophisticated comparison of previous "x80 vs y80 TI cards" looked, derived by taking the results from first 5 game tests on Anandtech using 4K and 1444 resolutions, as well as price and power numbers.

As you can see, while 980 offered only a single-digit average performance increase over 780 TI in these tests, both the price and the power consumption dropped by 21%. Pascal, on the other hand, aided by 16nm FinFet process had a commending, almost 30% performance lead over previous generation TI while also bring a very respectable 18% decrease in power consumption; a combination that in my opinion easily justified 8% price increase. By comparison, early 2080 leaks seem to indicate high single/low double digit performance increase over 1080 TI paired flat price and unknown power consumption. If power use remains flat as well and the leaks are accurate, it would be a somewhat comparatively disappointing launch, combining low performance increase of the Maxwell with flat/increased price of the Pascal and no efficiency improvements that either had in spades.

As you can see, while 980 offered only a single-digit average performance increase over 780 TI in these tests, both the price and the power consumption dropped by 21%. Pascal, on the other hand, aided by 16nm FinFet process had a commending, almost 30% performance lead over previous generation TI while also bring a very respectable 18% decrease in power consumption; a combination that in my opinion easily justified 8% price increase. By comparison, early 2080 leaks seem to indicate high single/low double digit performance increase over 1080 TI paired flat price and unknown power consumption. If power use remains flat as well and the leaks are accurate, it would be a somewhat comparatively disappointing launch, combining low performance increase of the Maxwell with flat/increased price of the Pascal and no efficiency improvements that either had in spades.

Some input from the MSI Afterburner dev on the Nvidia scanner thing: https://forums.guru3d.com/threads/rtss-6-7-0-beta-1.412822/page-68#post-5585936

I shared my impressions about NVIDIA Scanner technology from software developers’s point of view. Now I’d like to post the impressions from end user and overclocker POV.

I was never a real fan of automated overclocking because the reliability was always the weakest spot of overclocking process automation. NVIDIA Scanner is not a revolution like many newsmakers are calling it simply because different forms of automatic overclocking already existed in both NVIDIA and AMD drivers for couple decades, if not more (for example NVIDIA had it inside since CoolBits era, AMD had it in Overdrive). However, it was more like a toy and marketing thing, ignored by serious overclockers because everybody used to the fact that traditionally it crashed much more than it actually worked. Different third party tools also tried to implement their own solutions for automating the process of overclocking (the best of them is excellent ATITool by my old good friend w1zzard), but reliability of result was also the key problem.

So I was skeptical about new NVIDIA Scanner too and had serious doubts on including it into MSI Afterburner. However, I changed my mind after trying it in action on my own system with MSI RTX 2080 Ventus card. Yes, it is not a revolution but it is an evolution of this technology for sure. During approximately 2 weeks of development, I run a few hundreds of automatic overclocking detection sessions. None of them resulted in a system crash during overclocking detection. None of them resulted in wrongly detecting abnormally high clocks as stable ones.

The worst thing I could observe during automatic overclocking detection was GPU hang recovered during scanning process, and the scanner was always able to continue scanning after recovering GPU at software level and lower the clocks until finding stable result. In all cases it detected repeatable approximately +170MHz GPU overclocking of my system, resulting in GPU clock floating in 2050-2100MHz range during 3D applications runtime after applying such overclocking. Even for the worst case (i.e. potential system crash during overclocking detection) Scanner API contains the recovery mechanisms, meaning that you may simply click “Scan” one more time after rebooting the system and it will continue scanning from the point before crash. But I simply couldn’t even make it crash to test such case and emulated it by killing OC scanner process during automatic overclocking detection. So embedded NVIDIA workload and test algorithms used inside the Scanner API look really promising for me. And it will be interesting to read impressions of the rest overclockers and RTX 2080 card owners who try NVIDIA Scanner in action in nearest days/weeks.

Babel-17

Veteran

Wow, nice to hear that about the software, but it's impressive to hear about such speeds, especially from MSI's Ventus. https://videocardz.net/msi-geforce-rtx-2080-8gb-ventus

Babel-17

Veteran

On my 1070 FE I've been using undervolting for a long time now, and I generally get speeds close to 2050 MHz in some games. Though I do use adaptive v-ysnc, so that often gives the card opportunities to not run full out. If it's running full out for a prolonged period of time then the core clock will droop, even at times below 2000 MHz. Custom fan profile, it hits 100% at 72 C. I don't hear the card while gaming.

Anyway, even the more popular review sites have pretty much given up on overvolting the 10 series as being important, so I don't think this will be a big deal as long as nVidia didn't get overly cautious.

Anyway, even the more popular review sites have pretty much given up on overvolting the 10 series as being important, so I don't think this will be a big deal as long as nVidia didn't get overly cautious.

A1xLLcqAgt0qc2RyMz0y

Veteran

NDA for reviews ends at what time tomorrow?

Babel-17

Veteran

A poster at guru3d said they read a tweet, or something like that, that claimed 6:00 A.M. PST. So nine in the morning for those of us on the east coast of the USA.NDA for reviews ends at what time tomorrow?

DavidGraham

Veteran