EMIB is a very wide bus that Intel uses to connect HBM2 to the Vega M in Kaby Lake G.

For GPU - CPU communication they use a much simpler and comparatively anemic PCIe 8x bus.

If console makers went back to discrete GPUs and CPU over SoCs (they probably won't), I doubt they would use EMIB for that bus.

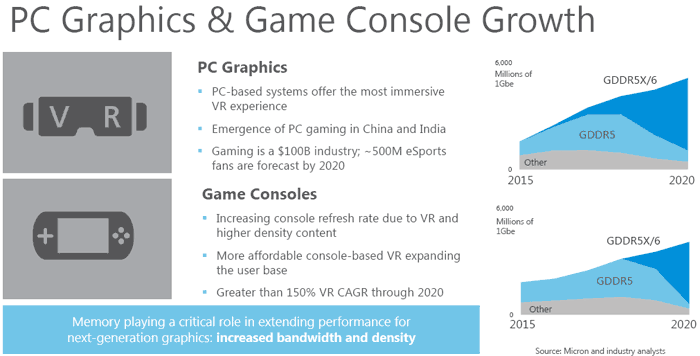

Micron seems awfully optimistic in those slides if they think HBM (within "others") will lose half its marketshare by 2020.

SK Hynix and Samsung might have a different opinion on that.

Image search shows me those slides are from early 2017. Perhaps they knew they bet on the wrong horse (HMC) when they made those slides and were trying to trick investors with those forecasts.

iPhone's average selling price has been steadily increasing over the years, with apple keeping production of the 1-year-old and 2-year-old models to offer lower-cost alternatives. The first model launched for $500 and its closest competition was the ~$730 Nokia N95 with arguably lower specs.

If the first iphone launched at $800 and no one would have touched it with a ten foot pole. Believe it or not the iphone started with a pretty aggressive price, before gaining brand recognition.

Like the first couple of iphone generations, Microsoft was in no position to ask 25% more than the competition considering they had the lower-performing console.

As pointed out yesterday, back in 2013 Sony actually switched the RSX's memory pool from GDDR3 to GDDR5 and halved the bus to 64bit in the process.

By transitioning to 4*

16Gbit GDDR6 chips, the PS4's and PS4 Pro's SoC+RAM could look like this:

They could make a tiny motherboard out of it.