You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Next Generation Hardware Speculation with a Technical Spin [2018]

- Thread starter Tkumpathenurpahl

- Start date

-

- Tags

- 5 bytes

- Status

- Not open for further replies.

When i say portable I mean surface line.When we say portable here are we talking console or having the surface line include the hardware and VM partitions to make it play the games natively (as far as the game is concerned)

I think the idea of an Xboy is too far a reach for MS as a good business case anytime soon.

What about a 7 or 8" Surface tablet with mountable controls? 'Native' play need only be Windows gaming and Play Anywhere titles, which already run on x64 PC so would run on the device. For gamers it'd just be title from their library - they don't care what architecture it's compiled for so long as it runs.

In the stead of the Switch success I wish MSFT and Sony revisite the handheld market with what seems to be its new paradigm:

no longer pocketable by any mean.

home console gameplay more or less.

Now I think that older considerations (wrt this market) still old true no matter Nintendo success.

Handheld has to be affordable so that means rational hardware choices, no fans sweet dreams. Nintendo has succes with the Switch BUT they still have a decent fans base and it is their only system at the moment. As it succeeds it attract geeks with buying power and games follow in a virtuous loop. No concurrence in sight.

Outgunning the Switch and easing cross platform developpment should not be hard, even doing so at a "decent" price (thinking of the overhead of the complex controls NIntendo includes and the assembly costs). Yet it would be about providing the costumers with a proper value proposal (that is if you want to make significant money and reach decent volume). I would not forget the early sale for the 3DS and Vita (at their release price).

I can only see this done through ARM or Intel. I would like to see an all ARM (IPs) SOCs including some new AI cores to deal with post/image processing and whatever they are suited for. Now that the geek factor, truth be told I would not be surprised if a proper Golmont + Intel SOc or Newer could proove a better bet:

* Sony and MSFT can leverage software effort they sank into the home console (emulators, os/services, non gaming apps and non demanding games, etc.)

* Intel CPU are far from bad be it their Atom or main line CPU. I thought the line was dead but Golmont+ should get close to ARMs competing IP (leaving Apple aside).

*Their GPU are decent and they are backed by impressive memory controller and by self they seems pretty bandwidrh efficient.

*Be it CPU or GPU their products are evaluated more seriously than most ARM/mobile parts (sustained used be it PC productivity software benchmarking or proper PC games banchmark). People like to bash Intel but we know where their products "stand" in perf (true for nvidia and ADM but a lot less true for a lot of mobile tech).

Defintely an OTS (unreleased) Intel Atom quad core (6-10Watts), linked to 4GB of DDR4 through a single memory channel whereas not the geeks wet dream could prove one hell of a (potentially) affordable system.

no longer pocketable by any mean.

home console gameplay more or less.

Now I think that older considerations (wrt this market) still old true no matter Nintendo success.

Handheld has to be affordable so that means rational hardware choices, no fans sweet dreams. Nintendo has succes with the Switch BUT they still have a decent fans base and it is their only system at the moment. As it succeeds it attract geeks with buying power and games follow in a virtuous loop. No concurrence in sight.

Outgunning the Switch and easing cross platform developpment should not be hard, even doing so at a "decent" price (thinking of the overhead of the complex controls NIntendo includes and the assembly costs). Yet it would be about providing the costumers with a proper value proposal (that is if you want to make significant money and reach decent volume). I would not forget the early sale for the 3DS and Vita (at their release price).

I can only see this done through ARM or Intel. I would like to see an all ARM (IPs) SOCs including some new AI cores to deal with post/image processing and whatever they are suited for. Now that the geek factor, truth be told I would not be surprised if a proper Golmont + Intel SOc or Newer could proove a better bet:

* Sony and MSFT can leverage software effort they sank into the home console (emulators, os/services, non gaming apps and non demanding games, etc.)

* Intel CPU are far from bad be it their Atom or main line CPU. I thought the line was dead but Golmont+ should get close to ARMs competing IP (leaving Apple aside).

*Their GPU are decent and they are backed by impressive memory controller and by self they seems pretty bandwidrh efficient.

*Be it CPU or GPU their products are evaluated more seriously than most ARM/mobile parts (sustained used be it PC productivity software benchmarking or proper PC games banchmark). People like to bash Intel but we know where their products "stand" in perf (true for nvidia and ADM but a lot less true for a lot of mobile tech).

Defintely an OTS (unreleased) Intel Atom quad core (6-10Watts), linked to 4GB of DDR4 through a single memory channel whereas not the geeks wet dream could prove one hell of a (potentially) affordable system.

Last edited:

TSMC will increase EUV use in their 7nm node, improving performance a bit (think in the 10% region), and hopefully lowering costs slightly.I was pretty positive they would launch in 2020 but thinking about it what kind of performance boost or cost reductions would they get for waiting the extra year? Maybe launching 2019 and being first to market would have a much bigger impact.

Their 5nm fab is scheduled to start producing in volume in 2020, although I doubt that we’ll see anything but Apple and smaller mobile players producing on the node until well into 2021.

I was pretty positive they would launch in 2020 but thinking about it what kind of performance boost or cost reductions would they get for waiting the extra year? Maybe launching 2019 and being first to market would have a much bigger impact.

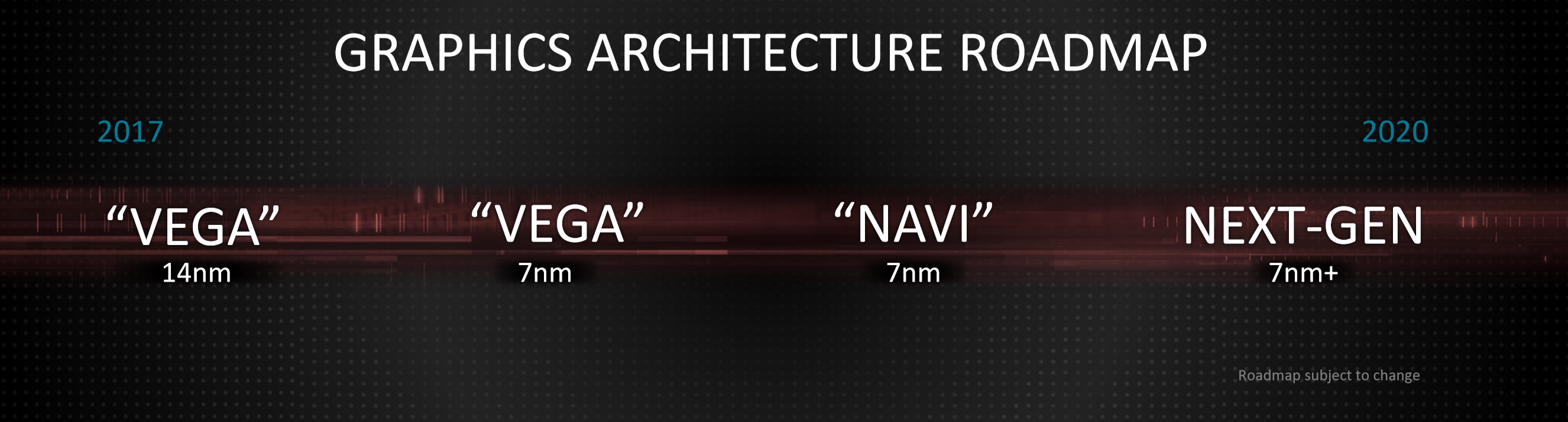

Because supposedly in 2020 they'll have access to a new "next-gen" GPU architecture instead of old GCN 6 found in Navi

https://wccftech.com/amd-new-major-gpu-architecture-to-succeed-gcn-by-2020-2021/

Tkumpathenurpahl

Veteran

I was pretty positive they would launch in 2020 but thinking about it what kind of performance boost or cost reductions would they get for waiting the extra year? Maybe launching 2019 and being first to market would have a much bigger impact.

It depends on GlobalFoundries and TSMC, and how quickly they can reach high volume production at 7nm. It might be viable next year, it might be the year after.

We know that AMD will use one of them to manufacture Vega on that node by the end of this year - albeit it, at low volume. We also know that Zen+ is due to release this April on a 12nm node.

That's the last news I read about manufacturing processes reaching that 7nm holy grail.

Zen 2 and Navi are both designed around 7nm, but their release dates haven't been announced. They're rumoured to release next year, but, even if that's the case, that won't necessarily translate into the kind of volume production needed for a console.

So it could go either way, but I hope that everything comes together for a 2019 launch.

The jump from 7nm to 7nm+ will improve size, cost, and clockspeed. Some people argue that the latter will be the right process on which to launch the next generation. I'm of the opinion that releasing on 7nm, and transitioning to 7nm+, affords an easy move to cost reduction within the first year of the console being on the market.

yes... end 2018 till end 2019 in case of another beefed up Ps4 (a kind of pro2 with maybe 16 gb and 16 jaguars core to give it life span)... This has the strongest economical sense... Of course as soon as 7nm will be affordable... IMHO

I don't see what the point would be in releasing a ProPro approximately 2 years after the Pro, and within 2 years of launching the next generation. They'd make it more difficult for themselves to differentiate the PS5 from their most recent PS4, they'd upset every Pro owner, and they'd squander newer, more powerful hardware on running PS4 games.

I can imagine slimmer versions, this year, from a more mature fabrication process, but not an improved Pro. There's already one of those: the XBoxOneX.

Chances are that 7nm won't be very cost effective without EUV. So a next gen before 7nm+ would cost a lot per mm2?

It's phenomenal that they continued to shrink features size down to 7nm with a 193nm light source. I don't understand clearly how interferometry works, but they said the multi pattern rocket surgery thing means cost per mm2 rises signifucantly using these tricks.

It's phenomenal that they continued to shrink features size down to 7nm with a 193nm light source. I don't understand clearly how interferometry works, but they said the multi pattern rocket surgery thing means cost per mm2 rises signifucantly using these tricks.

Last edited:

Incorporating the CPU and GPU on the same die within a console provides a myriad of benefits. Finding a company that has proficiency in both CPUs and GPUs, can put them on the same die (APU), and is willing and able to supply them in sufficient quantity and within the constraints of the lower margins required for the console space, is a tall task. Accordingly, it is no surprise that Sony and Microsoft both went with AMD this gen for these chips. In 2013, that meant Jaguar. There are good things that can be said about Jaguar. It checked some important boxes (cheap, low power) and was available from the same manufacturer as the GPU.

Remember when the specs were revealed for PS4 and XBONE? Despite the fact that there was literally no other viable option and that it made sense for all the reasons above, the CPUs in these consoles were a disappointment. Jaguar’s reveal in both next gen consoles immediately meant lowered expectations for CPU centric gaming implementations for a generation of titles. As we know, this spills over to PC gaming as well. Making a PC game engine with core game play mechanics requiring a more powerful CPU, and thus not possible on consoles, would be a poor business decision as the installed base of consoles is too large to ignore. The reveal of Jaguar instantly meant that the high end CPU in your PC gaming rig would be underutilized for the better part of a decade.

Part of launching a new console generation is taking advantage of the “free lunch” that tech, as an industry, offers. The next consoles will utilize things like HDMI 2.1 and USB 3.2, for example. Console manufactures did not have to create these things; they are just part of the ever evolving tech universe. A free lunch. The same idea generally holds true for the silicon. They can take advantage of the advancements in GPU, CPU, and memory technologies that the industry has been working on (and spending billions of dollars of R&D on) since the last console launch. AMD made a significant advancement with Zen. [How about that for an understatement?]

Thank you to those who persevered through my rehashing [of my admittedly neophyte understanding] of the fundamentals of the console industry. Sometimes it is helpful to revisit the basics before going forward.

We are (finally) on the cusp of moving forward from Jaguar's stranglehold on progress.You have frequently posted about Jaguar and their potential inclusion in consoles moving forward. Why?

Consoles have inherent advantages over PCs and vice versa.

PCs offer flexibility, versatility, customization, upgradability, etc. You get to choose how much GPU/CPU you want, you can upgrade components anytime you want and at your discretion. Spend a lot, spend a little; it is all up to you.

Consoles offer standardization. Game developers are able to optimize their games to specific hardware. They further improve on these optimizations as the hardware remains static for a period of time. This allows consoles to punch above their weight. Mid gen updates water down this value proposition. Proposed “tiered launches” water down this value proposition.

Another potential advantage consoles have over PCs is the opportunity to take a “clean slate” every once in a while. With PC, every component has to work with existing legacy components. New CPUs have to be able to run the same code as old CPUs. Everything “new” has to work with everything “old”. Consoles get the opportunity at a new generation to start over. What is the best thinking for designing a device to do what we want it to do based on the information we have today?

Backwards/forwards compatibility waters down and potentially destroys this advantage of consoles. How can you make the best possible device today if you also have to keep an eye on making sure it works with yesterday’s games? With mid gen consoles (and forward compatibility) how can developers be expected to make the best possible next gen game when they are bound by the significant installed base of last gen consoles and their potential customers?

There are posts advocating backwards compatibility, proposed multi SKU launches with varied hardware (CPU, GPU, RAM, etc.), and mid mid gen updates. These posts tend to act as though these items are "free" and a clear "win" for the console consumer. Like BC is a checkbox and having BC is clearly superior in every way to not having it. In my view, backwards compatibility and creating the best possible next gen system are mutually exclusive. It is simply not possible to have both.

Again, sorry for being long winded and revisiting some of the most basic of concepts. Where have I gone wrong? What will change moving forward to make these ideas less relevant?

Tkumpathenurpahl, how do you feel that multiple SKUs is more important than standardization?

Remember when the specs were revealed for PS4 and XBONE? Despite the fact that there was literally no other viable option and that it made sense for all the reasons above, the CPUs in these consoles were a disappointment. Jaguar’s reveal in both next gen consoles immediately meant lowered expectations for CPU centric gaming implementations for a generation of titles. As we know, this spills over to PC gaming as well. Making a PC game engine with core game play mechanics requiring a more powerful CPU, and thus not possible on consoles, would be a poor business decision as the installed base of consoles is too large to ignore. The reveal of Jaguar instantly meant that the high end CPU in your PC gaming rig would be underutilized for the better part of a decade.

Part of launching a new console generation is taking advantage of the “free lunch” that tech, as an industry, offers. The next consoles will utilize things like HDMI 2.1 and USB 3.2, for example. Console manufactures did not have to create these things; they are just part of the ever evolving tech universe. A free lunch. The same idea generally holds true for the silicon. They can take advantage of the advancements in GPU, CPU, and memory technologies that the industry has been working on (and spending billions of dollars of R&D on) since the last console launch. AMD made a significant advancement with Zen. [How about that for an understatement?]

Thank you to those who persevered through my rehashing [of my admittedly neophyte understanding] of the fundamentals of the console industry. Sometimes it is helpful to revisit the basics before going forward.

... another beefed up Ps4 (a kind of pro2 with maybe 16 gb and 16 jaguars core to give it life span)...

We are (finally) on the cusp of moving forward from Jaguar's stranglehold on progress.You have frequently posted about Jaguar and their potential inclusion in consoles moving forward. Why?

...I keep coming back to a 2 tier launch, and wondering how it could best be handled...

Consoles have inherent advantages over PCs and vice versa.

PCs offer flexibility, versatility, customization, upgradability, etc. You get to choose how much GPU/CPU you want, you can upgrade components anytime you want and at your discretion. Spend a lot, spend a little; it is all up to you.

Consoles offer standardization. Game developers are able to optimize their games to specific hardware. They further improve on these optimizations as the hardware remains static for a period of time. This allows consoles to punch above their weight. Mid gen updates water down this value proposition. Proposed “tiered launches” water down this value proposition.

Another potential advantage consoles have over PCs is the opportunity to take a “clean slate” every once in a while. With PC, every component has to work with existing legacy components. New CPUs have to be able to run the same code as old CPUs. Everything “new” has to work with everything “old”. Consoles get the opportunity at a new generation to start over. What is the best thinking for designing a device to do what we want it to do based on the information we have today?

Backwards/forwards compatibility waters down and potentially destroys this advantage of consoles. How can you make the best possible device today if you also have to keep an eye on making sure it works with yesterday’s games? With mid gen consoles (and forward compatibility) how can developers be expected to make the best possible next gen game when they are bound by the significant installed base of last gen consoles and their potential customers?

There are posts advocating backwards compatibility, proposed multi SKU launches with varied hardware (CPU, GPU, RAM, etc.), and mid mid gen updates. These posts tend to act as though these items are "free" and a clear "win" for the console consumer. Like BC is a checkbox and having BC is clearly superior in every way to not having it. In my view, backwards compatibility and creating the best possible next gen system are mutually exclusive. It is simply not possible to have both.

Again, sorry for being long winded and revisiting some of the most basic of concepts. Where have I gone wrong? What will change moving forward to make these ideas less relevant?

Tkumpathenurpahl, how do you feel that multiple SKUs is more important than standardization?

I'd love to see a journalist interview some game engine developers, and ask about what they would have prefered in terms of cpu/gpu/memory balance. With mid-gen, would anyone have prefered to sacrifice say 25% less GPU for 2 more cpu cores? Or for more ram? Has the opinion against split ram changes since 2006?

Niebotskick

Newcomer

RAMming higher quality assets into memory is something a game developer can never get enough of.

HBRU

Regular

I understand that in console environment with unified memory pool and greatly optimized code most of Ryzen benefits over Jaguar are lost... But Ryzen uses a lot of silicon more that can be better utilized.... Thats why I keep on saying Jaguar will be used, maybe an improved version ok....https://www.realworldtech.com/jaguar/

HBRU

Regular

also the 40 bit jaguars memory adress space means less data moving around... So saving bandwidth ... A lot of code around is higly optimized for this CPU... https://www.realworldtech.com/jaguar/7/

- Status

- Not open for further replies.

Similar threads

- Replies

- 22

- Views

- 7K

- Replies

- 2

- Views

- 235

- Locked

- Replies

- 47

- Views

- 3K

- Replies

- 7

- Views

- 795