Sigh says a lot when debate come down to 'Strawman' accusation.Almost everyone with a PS4 Pro and a 4K TV, playing their games in 4K mode. Which makes it the majority of people with a 4k 60Hz monitor.

Besides, people with a 4K monitor are interested in the higher detail. If their concern was response times and framerates they'd rather go with a 1080p 120Hz panel which is a lot cheaper.

So by your logic, a lower framerate that's good enough for one game must always be good enough for all other games.

Strawman right back at you.

You do know that Eurogamer in comparing the PS4 to the PS4Pro actually say the games they have tested are more playable with the higher fps than the 30fps and worth it in quite a few of them?

Again 4k monitors you say detail over performance, then they should not be purchasing 60Hz monitors......

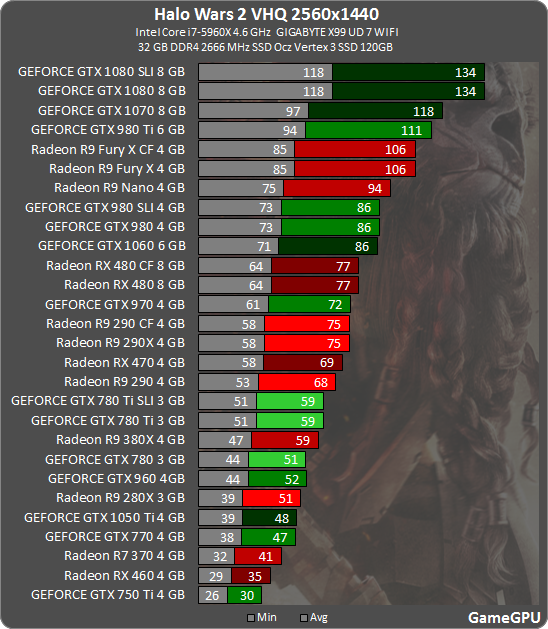

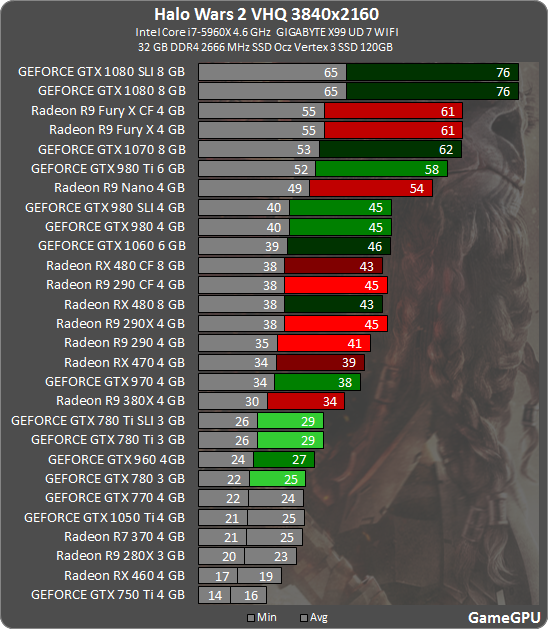

IF you say they should lower settings to hit close to 60fps, then that just reinforces my point about 33fps-38fps test results and using that as a point on how well FuryX performs (we do not know if any of the post-processing effects reduce performance of cards and so this could change with lower settings).

By my logic, I am just pointing out it is meaningless to conclude and use much about 4K result in a game when it is at a setting that will never be used; as you said you would cap to 30Hz and then FuryX/1070/1080 are all at parity.

Nice of you to throw in Strawman accusation (usually I see this thrown around in tech-engineering audio forums where it comes from entrenched positions) without discussing this in a better way.

Same way I do not bother arguing about FuryX and 1070 card performance at 1080 resolution as it is not really designed for that even though that is more likely than your 4K point as some want as close to as 144Hz as possible in their games.

Last edited: