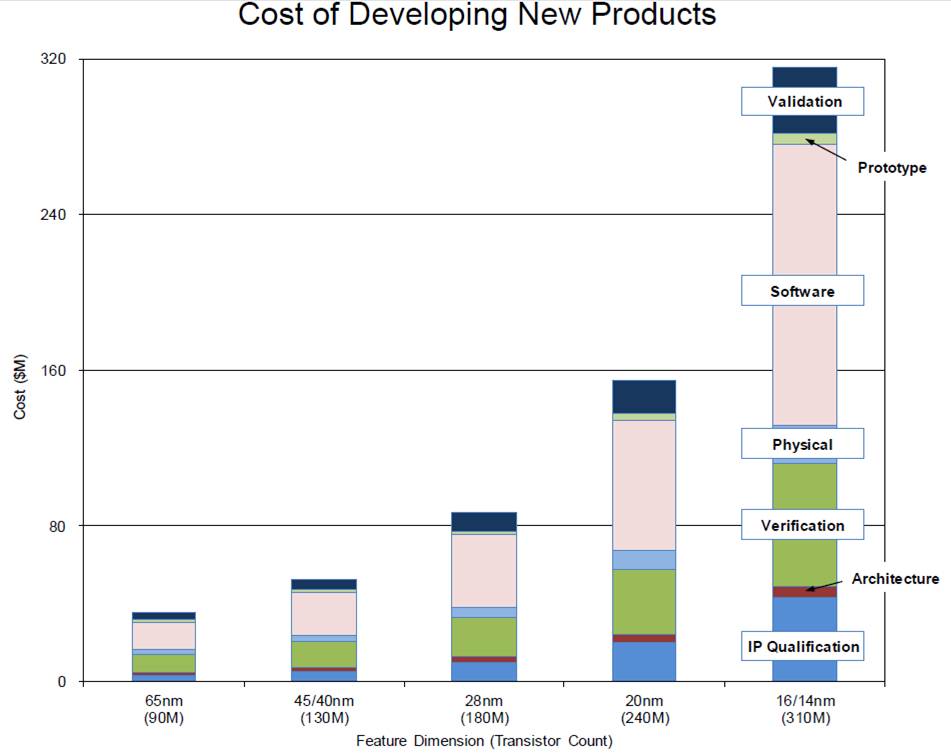

I don't think Polaris is profitable at this point and when you add in development costs, I don't think it ever will be.

The last time we had cards this cards this cheap on a new node with a comparable size was the 3870 and 3850 and these were 220 and 180 dollar cards. These cards were 20 percent smaller and on a vastly cheaper node. Add in inflation in well over 8 years and it doesn't make sense that the rx 480 and the newly demoted rx 470(the cut down of polaris) is 200 and 150 respectively. And it all has to do with costs. Lets look at wafer cost first.

Of course these are first run wafer costs, but the point still stands. If AMD had any time to raise graphic card prices, it would have been completely justified this time around. But for some reason, its never been cheaper. Add in development costs as seen below and basically Kyle Bennett might be right, in AMD entire graphic product stack might have collapsed, not because they are bad cards those, but because they are unprofitable.

So why did AMD price their cards so low then. The answer is competition.

The 1070 for marketing purpose and it's supposed 379 dollar price is a price killer for AMD. If the 1070 is 35-40% faster than a rx 480, then the 200 and 229 price wasn't a consciously made price point for them. It was forced upon them. This is because Nvidia is simply the stronger brand and has the greater marketshare. This means at similar pricepoints and similar price to performance, nvidia will take marketshare away from AMD. If AMD priced their rx 480 at 300 dollars, it would be a repeat of tonga vs the gtx970/980 as far as marketshare bleed.

I think from the slides shown to us in January, AMD wanted to price this chip in the 350 range because AMD initially indicated this to be the sweet spot range. That's was also the price of pitcairns which was again made on a cheaper node, and was smaller.

Hopefully for AMD sake, the gm206 arrives late and Nvidia pricing isn't aggressive.