Forgive my lack of faith in Global Foundries.

Anyways, just a random idea.

Haha, touché. I am slightly hopeful, since they're using Samsung's R&D.

Forgive my lack of faith in Global Foundries.

Anyways, just a random idea.

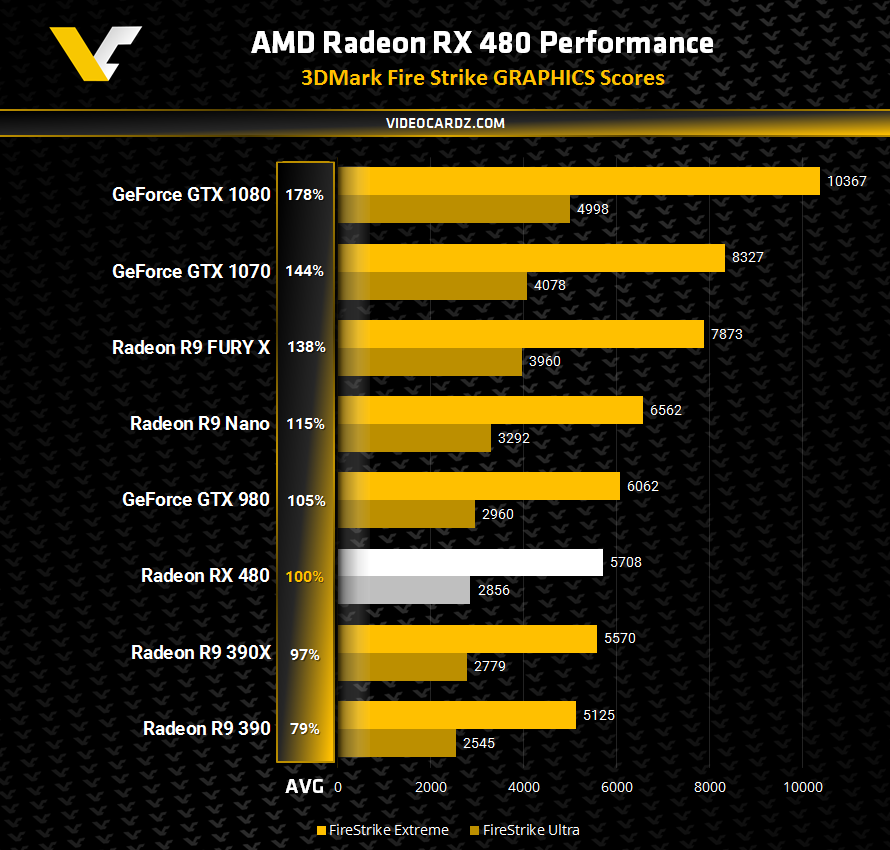

According to most leaks, P10 seems to score around 390/x in benchmarks. RX 480 is probably? also the full P10-chip which makes it a great upper mid range card at a very good price.

But what about the HighEnd?

Is RTG:s plan to leave the performance/HighEnd completely to Nvidia for a whole 6 months?

Or maybe the GP104:s performance took them by a complete surprise?

Performance/watt seems to be the main focus, not necessary performance.

According to most leaks, P10 seems to score around 390/x in benchmarks. RX 480 is probably? also the full P10-chip which makes it a great upper mid range card at a very good price.

But what about the HighEnd?

Is RTG:s plan to leave the performance/HighEnd completely to Nvidia for a whole 6 months?

Or maybe the GP104:s performance took them by a complete surprise?

Performance/watt seems to be the main focus, not necessary performance.

The current rumours suggest Vega will be pushed out early, in OctoberHard to say if it was set like that from the start, or if they was initially believe that HBM2 will be released faster and had previously think to been able to release a Polaris " high end " + HBM2, or Vega and Polaris in a shorter time frame.

IF ( and that a big IF ), GF 14nm can clock as high as the 1080,... I dont really see any reason to dont release a "slighty bigger" chips at first ( based on Vega or Polaris ) with high clock, as gaming part. But if they had plan to use HBM2 only, well this could explain the delay.

Storage fees? They're just setting the standard usable production at fewer functional CUs. If more work, then so be it - laser cut. It's not like it hasn't happened before.

AMD is intent on doing a price war of sorts, remember. More chips that can be used for a product line now. When fully enabled parts crosses some yield threshold (or they're looking at the next year's product line-up), then they'll have something to show to OEMs or to folks holding off.

There's already rumour of "10nmFF" being not much of an upgrade as well. For all we know, it's gonna be another 4-5 years to hit 7nm, and we don't know what sorts of things AMD is planning to do to GCN in the interim. Stretching out the Polaris 10/11 masks for products over the years might be useful for their er... bottom line/budgets.

Those figures do not align well with Videocardz, who state they received their info from an AMD partner and would be either at correct reference clocks or slightly higher.

So who do you trust more, videocardz or Wccftech, and whose figures are closer to what has come out of AMD.

390 should be 89%

Cheers

I'm pretty sure we'll know the real deal after Apple has updated their machines with Polaris GPUs, then we'll see if they're getting 36 CU part, or is it like Tonga where they got the first full shader partsNot selling things you have is a pretty poor way to make money.

Any idea which article?

Reason I ask is going through an article on Anandtech I found, the load power of a custom AIB OC 270 only has 257W against 267W for an AMD reference 270x,

In Furmark the custom board OC 270 is 33W less.

Like you I trawled various reviews but they make it hard to get a true like-for-like from one reviewerHmmm, I honestly can't find it again. It had both a reference 270 and reference 270x, IIRC. I'm guessing I saw it when I was looking up various other card combinations and found it strange. Then again that plays right into what Dave said in that there is much higher variability in the salvage chips than in the full chips used for the higher models.

Another example, I ran across while looking for the article again. R9 280 consumers 14 watts less than R9 280x. R9 290 consumes 6 watts more than R9 290x.

Yeah, my apologies. Either I thought I thought I saw something that I didn't see, or that was one obscure video card review that I can't find again.

Regards,

SB

next year ? you mean fall ? Small vega should hit in fall which would negate the impact of a 480x with 40cu. I could see if it existed them announcing it in the fall with the vega series ?What if AMD is saving fully enabled parts for next year?

i.e.

480X - 40CU

480 - 36

470X - 32 ???

470 - 28 ???

460X - 20 ???

460 - 16

They'd maybe get more yields out of introducing non-X parts now, and then when the node matures, they can bring out fully operational chips.

One might say it was the reverse of 28nm strategy (aside from tonga situation).

View attachment 1342

Why are people so caught up on the steam VR score? They used drivers from January for the test and the test has variation of 20-30% between tests on the same system. I think the only reason AMD put that was to prove it was VR ready but the performance isn't indicative from the score. Based on AMD's benchmarks with the 470, its between 1.6x and 1.75x the 270x based on if you want to include hitman in the average.

based on this performance summary, it puts the 470 at the 290 to 390 performance. Which means the 480 has to be higher than that.

it also puts a performance per watt at 20% better than the 1080 in the best case if the 2.8x holds up.

Yes but you dont really spend 200 dollars expecting to play a 4k, do you?Probably because the card is pitched towards affordable VR - and I think it will provide that, but at the same time people may be having high expectations for high resolution performance in general -

I have the distinct feeling this card will be ROP limited at 4K and VR if the 6.3 score is any indication - perhaps evn raw Bandwidth.

These will IMO shine at 1080p , but beyond that - maybe a bit iffy, and not compare so favourably to the old Hawaii's

As for old drivers - That's fine, but most of the data for Hawaii cards hitting well over 7, consistently are on old drivers too.

Well, VR has really high requirements:Yes but you dont really spend 200 dollars expecting to play a 4k, do you?

Where is that "100W range" thing coming from?