Orion

Regular

So, which is it, 150 watts or 100? (And why is there such a range?)

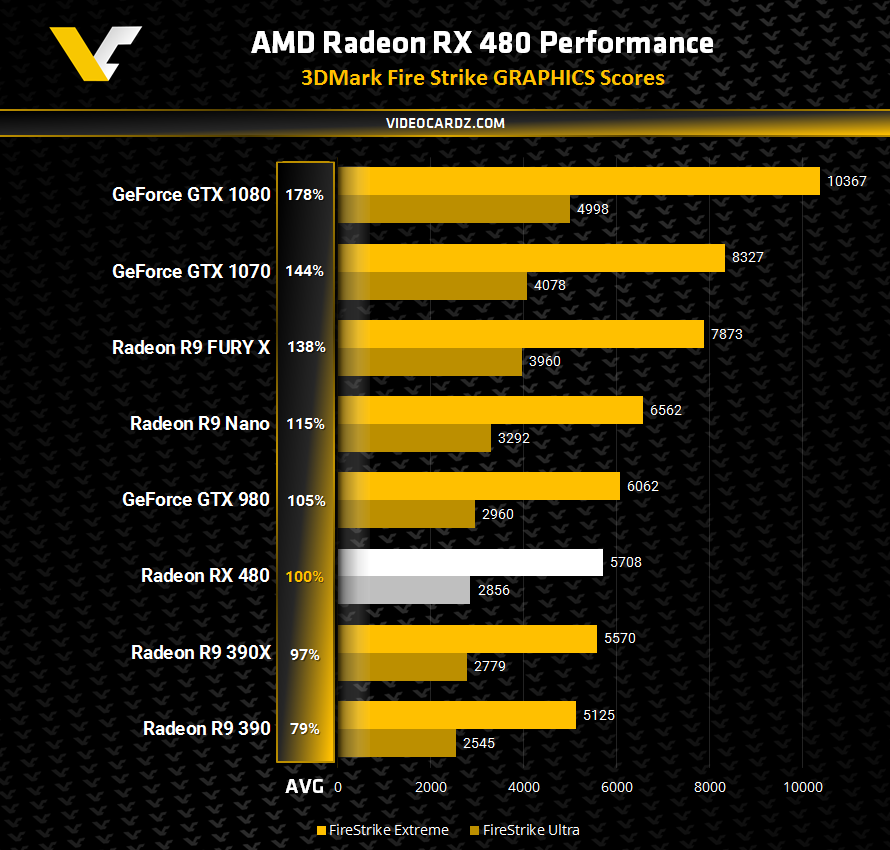

If it's drawing 100 while running 3dMark Firestrike Ultra the RX 480, normalized to the 1080's tdp of 180 watts, gets a benchmark of 6,000 while the 1080 gets 5,000. If it's pulling 150 watts then it's a normalized score of 4,100. Why is there such a huge range, and even how, and is it PR bullshit? I dunno, but there's the math of it.

Intriguing. I wonder how much efficiency it will lose by raising clocks whichever the truth is. The article in question also seems to hint at an 8pin+6pin card, which would suggest it can at least run so high as to require that much power.