You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

A bunch of startups have been working in similar stuff as well. So far is only for inference. No doubt custom silicon will come eventually as well, but the technical hurdles for that should be quite a bit higher.When Google's monthly revenue is more than NVidia's annual revenue, ASICs become possible and fucking about with GPGPU becomes history.

The Google IO keynote must have put a lot of people on notice about the possibilities that lay ahead. The total market could be pretty large. It will be interesting to see who'll take the biggest pieces of the pie.

With the mobile phone SOC space consolidated and on a predictable trajectory, this is finally a new application where a silicon startup (or big company) can make a big splash. Especially because the design work isn't that high: the same logic copied many times over.

It was apparently indicated to be using 8-bit integer operations.

http://www.nextplatform.com/2016/05...ional-route-homegrown-machine-learning-chips/

At least in that regard, there was some notice prior to this announcement.

GP100 is apparently going to support 8-bit ops, which coupled with its AI initiatives indicates they were moving in the direction of this at least as far back as Pascal's design was being laid down.

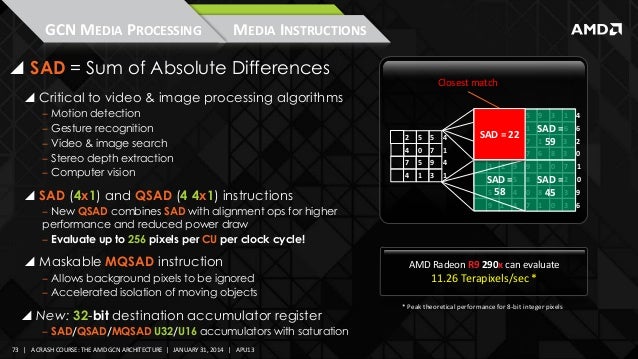

AMD has in more recent GCN iterations included the ability to manipulate register data at byte granularity, although perhaps that was targeting something other than machine learning like signal analysis?

http://www.nextplatform.com/2016/05...ional-route-homegrown-machine-learning-chips/

At least in that regard, there was some notice prior to this announcement.

GP100 is apparently going to support 8-bit ops, which coupled with its AI initiatives indicates they were moving in the direction of this at least as far back as Pascal's design was being laid down.

AMD has in more recent GCN iterations included the ability to manipulate register data at byte granularity, although perhaps that was targeting something other than machine learning like signal analysis?

Indeed this Google TPU is meant for power efficient inferencing, ie the part of using the neural network.

A processor that can accelerate neural network training has vastly different requirements, such as high precision floating point, fast inter process communication and high memory bandwidth.

None of this is required for inferencing (also not memory bandwidth if the neural network fit's compressed on chip)

So Nvidia doesn't need to be too concerned yet about demand for the P100 for training purpose.

A processor that can accelerate neural network training has vastly different requirements, such as high precision floating point, fast inter process communication and high memory bandwidth.

None of this is required for inferencing (also not memory bandwidth if the neural network fit's compressed on chip)

So Nvidia doesn't need to be too concerned yet about demand for the P100 for training purpose.

GeForce GTX 1060 Possibly Spotted In Shipment Tracking

http://www.guru3d.com/news-story/geforce-gtx-1060-possibly-spotted-in-shipment-tracking.htmlThe GeForce GTX 1060 will likely be based on the ASIC called GP106. IF the listing in Zauba is to be believed then Nvidia will use a 256-bit GDDR5 memory controller for this mainstream product. This this is similar to the GTX 1070 and 1080. Though this remains speculation, it is expected to have 1280 Cuda/shader Cores

Just to add as it fits with this, Google is also one of the clients for the P100.Indeed this Google TPU is meant for power efficient inferencing, ie the part of using the neural network.

A processor that can accelerate neural network training has vastly different requirements, such as high precision floating point, fast inter process communication and high memory bandwidth.

None of this is required for inferencing (also not memory bandwidth if the neural network fit's compressed on chip)

So Nvidia doesn't need to be too concerned yet about demand for the P100 for training purpose.

Cheers

It's the top and the bottom that matter. 3rd party open air coolers typically don't use the mounting hole on the right, since they're one flat heatsink extending to the end of the card (and often beyond). It's really only for blowers, which need it to secure the far end of the shroud.

Do you have still your GTX1080? Possible that you can use GPUView to get informations about Pascal's behaviour with Async Compute?

itsmydamnation

Veteran

Now that some more details are getting published it looks like the 1080 is pushed just that inch to far(in term of clock rate), will be interesting to see if reviewers got golden samples or just regular cards. Presenting like a realistic founders edition clock is 2.1ghz and then to have clocks over time look like:

http://www.gamersnexus.net/guides/2441-diy-gtx-1080-hybrid-thermals-100-percent-lower-higher-oc-room

http://hardocp.com/article/2016/05/17/nvidia_geforce_gtx_1080_founders_edition_review/5#.Vz-MWZF94VB

Call me totally surprised for not seeing the same level of rage as with the 290X launch............

Wonder how long it will take to get custom cooler cards without the $100 premium that will actually allow for sustained clocks.......

So the next question is does GP102 actually exist? Cuz im guessing we wont see a consumer GP100 for a while nor will we see vega for a while and mid range cards at high end prices just isn't my thing........

http://www.gamersnexus.net/guides/2441-diy-gtx-1080-hybrid-thermals-100-percent-lower-higher-oc-room

http://hardocp.com/article/2016/05/17/nvidia_geforce_gtx_1080_founders_edition_review/5#.Vz-MWZF94VB

Call me totally surprised for not seeing the same level of rage as with the 290X launch............

Wonder how long it will take to get custom cooler cards without the $100 premium that will actually allow for sustained clocks.......

So the next question is does GP102 actually exist? Cuz im guessing we wont see a consumer GP100 for a while nor will we see vega for a while and mid range cards at high end prices just isn't my thing........

Infinisearch

Veteran

Yeah I saw that too, and was wondering if its going to be an issue or not.

The early clock ramp and then pulling back seems reminiscent of Hawaii's method of thermal target management.

The behavior does seem to show Nvidia's chips are not going to be as consistent with boost clocks, which may show there's not as much margin as before.

That might be a consequence of the new clock/power management, which would eat into margin and open the product up to similar criticism as the 290X received from clock watchers.

I think the psychology of having a base and turbo clock, rather than an "up to" that has no minimum is different. The former gives a foundation, and then X amount of "bonus", while the latter gives disappointment in varying doses.

I'm still not sure if the cooler variability and fan control problems that afflicted the 290, or the two fan modes, or the loudness are in evidence this time.

Also, AMD may only have themselves to blame for reviewers not (edit: disregard the "not") checking how the GPUs vary their clock and thermals over time, and for softening the PR blow for those that follow.

It also doesn't seem to be the case with the 1080 that it's consumers finding out the hard way about faults in the product, like they did with the 290 and Fury X.

The behavior does seem to show Nvidia's chips are not going to be as consistent with boost clocks, which may show there's not as much margin as before.

That might be a consequence of the new clock/power management, which would eat into margin and open the product up to similar criticism as the 290X received from clock watchers.

I think the psychology of having a base and turbo clock, rather than an "up to" that has no minimum is different. The former gives a foundation, and then X amount of "bonus", while the latter gives disappointment in varying doses.

I'm still not sure if the cooler variability and fan control problems that afflicted the 290, or the two fan modes, or the loudness are in evidence this time.

Also, AMD may only have themselves to blame for reviewers not (edit: disregard the "not") checking how the GPUs vary their clock and thermals over time, and for softening the PR blow for those that follow.

It also doesn't seem to be the case with the 1080 that it's consumers finding out the hard way about faults in the product, like they did with the 290 and Fury X.

Last edited:

Silent_Buddha

Legend

Call me totally surprised for not seeing the same level of rage as with the 290X launch............

I imagine there won't be much outrage. Just like power consumption was fine even though it was quite significantly higher than AMD for quite a while. And then suddenly it was very very important once Nvidia was better at power consumption. And AA quality didn't matter at all when Nvidia weren't good at it compared to AMD and then it suddenly mattered quite a bit once they offered similar or better quality. The same goes for AF, etc. It didn't matter when Nvidia were quite late following 5870, but it suddenly mattered when Nvidia were executing better.

The internet in general has shown it is quite lenient when it comes to Nvidia outside of the occasional semi ragey hiccup that doesn't last very long.

And if this isn't an issue with OEM custom cards, will it matter anyway? I'm sure many people will forgive Nvidia for charging 100 USD extra for a card that may or may not live up to all of its specifications.

All that said, I'd still be one of the first ones buying this card if it was within my price range just because there doesn't seem to be any competition in the next couple of months. And probably bad mouthing them online if it didn't meet all the specifications.

Regards,

SB

It probably comes down to the Boost3 and the fact there is now another variable involved (voltage) in controlling fan and frequency, not just temperature.The early clock ramp and then pulling back seems reminiscent of Hawaii's method of thermal target management.

The behavior does seem to show Nvidia's chips are not going to be as consistent with boost clocks, which may show there's not as much margin as before.

That might be a consequence of the new clock/power management, which would eat into margin and open the product up to similar criticism as the 290X received from clock watchers.

I think the psychology of having a base and turbo clock, rather than an "up to" that has no minimum is different. The former gives a foundation, and then X amount of "bonus", while the latter gives disappointment in varying doses.

I'm still not sure if the cooler variability and fan control problems that afflicted the 290, or the two fan modes, or the loudness are in evidence this time.

Also, AMD may only have themselves to blame for reviewers not (edit: disregard the "not") checking how the GPUs vary their clock and thermals over time, and for softening the PR blow for those that follow.

It also doesn't seem to be the case with the 1080 that it's consumers finding out the hard way about faults in the product, like they did with the 290 and Fury X.

So I see this as requiring tweaking by NVIDIA rather than a long term problem.

Why?

Because the behaviour is similar even when temperature is not an issue and so more than likely comes back to voltage (IMO but time will tell) and its modifiable influence on Boost3.

Here is HardOCP OC using max fan speed to remove thermal constraints but nothing regarding voltage.

You can read more on what they did, but it shows as some other reviews have done that Boost3 is more complex than in the past, with voltage playing a more active role.

http://hardocp.com/article/2016/05/19/geforce_gtx_1080_founders_edition_overclocking_preview

Cheers

With the 290X, AMD promised a clock of 1GHz on the box. With the 1080, Nvidia promises a boost clock of 1733MHz.

Are there cases where this 1733MHz isn't reached?

Edit: never mind, I see on the later graph that it sometimes goes below 1733. But it never goes below the base clock. Wasn't it agreed on that, for the 290X, AMD's mistake was to not list a base clock, and confuse people into thinking that 1GHz was a guarantee? You can avoid that by using a base/boost combo.

Wasn't it agreed on that, for the 290X, AMD's mistake was to not list a base clock, and confuse people into thinking that 1GHz was a guarantee? You can avoid that by using a base/boost combo.

Are there cases where this 1733MHz isn't reached?

Edit: never mind, I see on the later graph that it sometimes goes below 1733. But it never goes below the base clock.

Last edited:

It probably comes down to the Boost3 and the fact there is now another variable involved (voltage) in controlling fan and frequency, not just temperature.

So I see this as requiring tweaking by NVIDIA rather than a long term problem.

Why?

Because the behaviour is similar even when temperature is not an issue and so more than likely comes back to voltage (IMO but time will tell) and its modifiable influence on Boost3.

Here is HardOCP OC using max fan speed to remove thermal constraints but nothing regarding voltage.

I'm not sure that I see that article as indicating that voltage controls fan speed.

Fan speed can influence when the temperature target is hit, but it's not going to spool up just because voltages change.

Voltage can govern the highest stable clocks that can be achieved, with the caveat that at any given clock speed higher voltage equals higher power consumption.

If the fan's speed is maxed out and the temperature target is hit, neither clock or voltage increases will provide a benefit. The fan was manually set to 100% to push this scenario as far out as possible.

If the temperature target is not hit, but the clock and voltage curve hits the power target, then the fan can do nothing.

The overclocking attempt appears to be mostly capped by stability, although the more uniform clock graph for the overclocking attempt may mean another target is being hit. It might be the temperature target in the early part, but power limits might be contributing to some of the behavior that later doesn't match the temperature readings.

Not really encouraging on the H2o overclocking side. but that tend to be the norm with Nvidia cards since Maxwell ... As improved max clock speed at stock, and good air clocking eat on the h2o clocking margin. ( and as sadly not everyone have access to modified bios for subzero cooling .. )..

With GTX 980ti, or Maxwell in general, the differences by using H2o over air was marginal .. ( compared to older generations ( let alone AMD gpu's ).. ok this is some sample, some could do better maybe.

100mhz difference ( over air ) for half the temperature and without throttling then ( who can anyway traduct on better fps due to the "no throtttling issue ). thats a bit poor for an overclocker.

lets hope tdp unlimited bios will be available widely ..

its the problem, you have a TDP limit, artificially set, even when you OC ( +120% ) and an temperature limit.. on air you will hit quickly both ( temperature first, but termperature increase the TDP Too, but under H2o, you should not hit the temperate limit, and so just hit the tdp limit first.. the problem in this case, is only 100mhz more ? .... thats cheap . So i understand why on the presentatioo, he was say that you could ask for a tdp less limited bios by ask them a request... )

What worry me more in the test of gamernexus, is this throttling,... look completely uncontrolled and abnormal when overclocked..

With GTX 980ti, or Maxwell in general, the differences by using H2o over air was marginal .. ( compared to older generations ( let alone AMD gpu's ).. ok this is some sample, some could do better maybe.

100mhz difference ( over air ) for half the temperature and without throttling then ( who can anyway traduct on better fps due to the "no throtttling issue ). thats a bit poor for an overclocker.

lets hope tdp unlimited bios will be available widely ..

its the problem, you have a TDP limit, artificially set, even when you OC ( +120% ) and an temperature limit.. on air you will hit quickly both ( temperature first, but termperature increase the TDP Too, but under H2o, you should not hit the temperate limit, and so just hit the tdp limit first.. the problem in this case, is only 100mhz more ? .... thats cheap . So i understand why on the presentatioo, he was say that you could ask for a tdp less limited bios by ask them a request... )

What worry me more in the test of gamernexus, is this throttling,... look completely uncontrolled and abnormal when overclocked..

Last edited:

No it is part of the whole package, voltage is linked to the frequency in some way as temperature (but the temp is never above 65 in that link I gave) which is why it drops IMO (voltage profile-algorithm with frequency).I'm not sure that I see that article as indicating that voltage controls fan speed.

Fan speed can influence when the temperature target is hit, but it's not going to spool up just because voltages change.

Voltage can govern the highest stable clocks that can be achieved, with the caveat that at any given clock speed higher voltage equals higher power consumption.

If the fan's speed is maxed out and the temperature target is hit, neither clock or voltage increases will provide a benefit. The fan was manually set to 100% to push this scenario as far out as possible.

If the temperature target is not hit, but the clock and voltage curve hits the power target, then the fan can do nothing.

The overclocking attempt appears to be mostly capped by stability, although the more uniform clock graph for the overclocking attempt may mean another target is being hit. It might be the temperature target in the early part, but power limits might be contributing to some of the behavior that later doesn't match the temperature readings.

That example I provided before is with the fan set to 100%, so I am showing it is more likely your seeing the voltage-frequency profiling of Boost3 in action as it is nowhere near as bad as the one where they showed it with a temp of 82degrees.

You can set individual voltage to clock speed.

Quoting TPU who also done a nice study showing influences to boost.

http://www.techpowerup.com/reviews/NVIDIA/GeForce_GTX_1080/29.htmlCurrently, there are 80 voltage levels and you can set an individual OC frequency for each, which would make manually OCing this card extremely time intensive. That's why NVIDIA is recommending that board partners implement some sort of OC Stability scanning tool that will create a profile for you.

So to me the issue at moment probably comes back to the voltage profile when it peaks, as HardOCP shows similar kind of frequency control when temperature is stable at 65 - see chart in post 1014.

Just to add, TPU had to stop the fan to get a trend above 82 degrees.

Anyway Boost3 is more complex than what has been implemented in the past for either NVIDIA or AMD, so I see it still requiring fine tuning and probably how it profiles voltage-temp-offset to frequency, which is not helped with NVIDIA being too soft with the fan profiling and so it hits 82 too easily if not modified.

Note it is rather dynamic, which means we can see different behaviour/trends influencing it hitting the ceiling in terms of voltage-clock per game and also per "scene" in a game.

As an example look how it can vary by a large amount per game in the following chart at gameshardware, 10mins warmup:

http://www.pcgameshardware.de/Nvidi...5598/Specials/Benchmark-Test-Video-1195464/2/

Again please note this does not necessarily reflect the maximum OC one can get on the FE design, but how it can vary by a wide margin per game and the dynamic boost hitting the ceiling at points in a game.

Cheers

Edit: to summarise:

In the past, overclocking worked by directly selecting the clock speed you wanted. With Boost, this changed to defining a fixed offset that is applied to any frequency that Boost picks.

On Boost 3.0, this has changed again, giving you much finer control since you can now individually select the clock offset that is applied at any given voltage level.

Last edited:

not sure about the conclusion of hardocp lol ( since they have been fired by AMD ) anyway ..

CSI, your example are good for a card at stock, but the voltage is too modified when you overclock it . the TDP limitation is the simple and will explain this.. and finally voltatge is part of the TDP result. if their algorythm for set the right voltage is wrong, the result is the same, they hit the tdp quicker, but we dont speak about stock gpu there.. it is with overclocked gpu's . IF this voltage algorythm have some fail, it is not important.... when the bios who remove the limitation of TDP will be out, it will be removed by itself.. at a 300W limit, this will not have much importance ... Certainly same for the EVGA superclocked, or MSI, ASUS DC xxx ... this will their bios who will control the TDP .. IF the Greenlight program allow it ofc.

If you can provide a sample who dont hit the lmitation of TDP and still act the same ( and this will be worse than our expectations ) when overclocked .. ok.

CSI, your example are good for a card at stock, but the voltage is too modified when you overclock it . the TDP limitation is the simple and will explain this.. and finally voltatge is part of the TDP result. if their algorythm for set the right voltage is wrong, the result is the same, they hit the tdp quicker, but we dont speak about stock gpu there.. it is with overclocked gpu's . IF this voltage algorythm have some fail, it is not important.... when the bios who remove the limitation of TDP will be out, it will be removed by itself.. at a 300W limit, this will not have much importance ... Certainly same for the EVGA superclocked, or MSI, ASUS DC xxx ... this will their bios who will control the TDP .. IF the Greenlight program allow it ofc.

If you can provide a sample who dont hit the lmitation of TDP and still act the same ( and this will be worse than our expectations ) when overclocked .. ok.

Last edited:

Jawed

Legend

AMD has in more recent GCN iterations included the ability to manipulate register data at byte granularity, although perhaps that was targeting something other than machine learning like signal analysis?

Similar threads

- Replies

- 12

- Views

- 683

- Poll

- Replies

- 289

- Views

- 14K

- Replies

- 124

- Views

- 10K