You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Is UE4 indicative of the sacrifices devs will have to make on consoles next gen?

- Thread starter datadigi

- Start date

Inuhanyou

Veteran

I doubt they were doing much in that timeframe. A month isn't really a lot to do anything.

Especially considering the games we saw weren't on that hardware at all, and they have been in development for a while, killzone in particular for years when the kits were back at 2gb or something

Especially considering the games we saw weren't on that hardware at all, and they have been in development for a while, killzone in particular for years when the kits were back at 2gb or something

Bagel seed

Veteran

I would wager to say that even Deep Down was running on 2gb or less. Confined cave area, RE6+ quality characters. It was impressive for other reasons (mostly lighting, DoF and cutscene style animation imo). And we already know something on Agni's level can be done with 1.8gb vram usage. The full ~6-7gb used should be a revelation!

No I'm not Mark - nor a Epic developer. Ask yourself this? Would it be far fetch for a school teacher to know her City Councilmen/woman? Would it be far fetch for a City Councilmen/woman to know a State Senator? Would it be far fetch for a Police Officer to know an FBI agent? Would it be far fetch for an FBI agent to know a CIA agent? In other words, I don't have to be in the actual hen house to know what's going on. Let's just say I'm into hardware technology.

Anyhow, I've been lurking around Beyond3D for years, never cared to register until recently. I'm not here to fuel any one side (PC Vs. PS3 Vs. Xbox or Intel Vs. AMD Vs. Nvidia). I'm here to offer additional information... be it firsthand or secondhand. Sometimes I will have to be vague about things - just because I'm not stupid. Additionally, I will never belittle anyone here, not my thing. I've seen over the years, people (respectful people of the industry), lose their calm over internet nonsense. Taking pictures of pistol's in their hand's and threatening other board members.

Back on topic. I stand by all my comments ...that's all I can say.

Anyhow, I've been lurking around Beyond3D for years, never cared to register until recently. I'm not here to fuel any one side (PC Vs. PS3 Vs. Xbox or Intel Vs. AMD Vs. Nvidia). I'm here to offer additional information... be it firsthand or secondhand. Sometimes I will have to be vague about things - just because I'm not stupid. Additionally, I will never belittle anyone here, not my thing. I've seen over the years, people (respectful people of the industry), lose their calm over internet nonsense. Taking pictures of pistol's in their hand's and threatening other board members.

Back on topic. I stand by all my comments ...that's all I can say.

Extra memory doesn't increase computational resources or bandwidth. If you want to render more objects or particles (more object density or higher view range), you need to perform more computations. If you want to use higher resolution textures or higher detailed polygon meshes, you need more bandwidth.And we already know something on Agni's level can be done with 1.8gb vram usage. The full ~6-7gb used should be a revelation!

More memory can be used to bring more variation for textures and meshes, and bigger (persistent) areas to explore. Clarification: Bigger areas = more objects behind the corner (in the next rooms) or beyond the view distance. More objects in the screen at once would of course require more computational resources and bandwidth (so that's not possible by just adding more memory).

Higher memory capacity helps game logic code much more than it helps rendering (since streaming techniques pretty much solve the memory usage for rendering). Memory can be used to keep persistent world state in intact, and used to create a more dynamic lifelike game world. However more memory cannot be used to perform larger scale physics simulations. More simultaneously active physics bodies would require more computational resources and more bandwidth. However once the bodies have stopped, you can use the extra memory to store their positions (and damage, bullet holes, shrapnel, etc fine details that have changed). Extra memory is a very good thing for a dynamic fully destructible game world... as long as you make sure the player cannot destruct too much at once... or you run out of computational resources and bandwidth and the frame rate plummets

Inuhanyou

Veteran

A lot of devs seem super excited about that, and they say it gives major longevity to the platform. I'm guessing the ability to use much higher resolution textures has something to do with that.

I mean the very first thing your likely to see age on consoles in comparison to the advancement of technology is low resolution assets, and its a pretty obvious for 360 and PS3 at this point to see that a majority of things in the game world are flat textures given the illusion of depth

I mean the very first thing your likely to see age on consoles in comparison to the advancement of technology is low resolution assets, and its a pretty obvious for 360 and PS3 at this point to see that a majority of things in the game world are flat textures given the illusion of depth

djskribbles

Legend

You work for AMD? Sony?No I'm not Mark - nor a Epic developer. Ask yourself this? Would it be far fetch for a school teacher to know her City Councilmen/woman? Would it be far fetch for a City Councilmen/woman to know a State Senator? Would it be far fetch for a Police Officer to know an FBI agent? Would it be far fetch for an FBI agent to know a CIA agent? In other words, I don't have to be in the actual hen house to know what's going on. Let's just say I'm into hardware technology.

Anyhow, I've been lurking around Beyond3D for years, never cared to register until recently. I'm not here to fuel any one side (PC Vs. PS3 Vs. Xbox or Intel Vs. AMD Vs. Nvidia). I'm here to offer additional information... be it firsthand or secondhand. Sometimes I will have to be vague about things - just because I'm not stupid. Additionally, I will never belittle anyone here, not my thing. I've seen over the years, people (respectful people of the industry), lose their calm over internet nonsense. Taking pictures of pistol's in their hand's and threatening other board members.

Back on topic. I stand by all my comments ...that's all I can say.

Last edited by a moderator:

Bagel seed

Veteran

More memory can be used to bring more variation for textures and meshes, and bigger (persistent) areas to explore. Clarification: Bigger areas = more objects behind the corner (in the next rooms) or beyond the view distance.

Those alone I feel can give a significant step up in perceived visual quality... variation and more minute details make game worlds seem more believable. I think it's the first thing people usually notice. Hence why we're confined to corridors and repetitively styled environments in order to impress now. More polys and objects are great too but sometimes subject to diminishing returns. People can be 'fooled' more easily with texture variety I think.

So theoretically we're looking at under 6gb/frame at 30fps. Any comments on how that would figure into a real world engine scenario with ~7gb available?

Extra memory is a very good thing for a dynamic fully destructible game world... as long as you make sure the player cannot destruct too much at once... or you run out of computational resources and bandwidth and the frame rate plummets

Agreed! I'm hoping to see more of this. Bigger, destructible sandbox levels.

Last edited by a moderator:

A lot of devs seem super excited about that, and they say it gives major longevity to the platform. I'm guessing the ability to use much higher resolution textures has something to do with that.

sebbbi is a console dev, and he's just specifically said it's not going to lead to particularly higher resolution textures (over 4GB) because that would also require more bandwidth.

And approximation hardware i'm guessing, like Ubisoft for Watch Dogs, they were running it on a "comparable" PC. But there is no comparable PC with that kind of HSA APU like achitecture in combination with that kind of ram configuration(with that large amount of ram reserved for both CPU and GPU at 176gb/s. In PC's, the GDDR5 ram speed only goes for the GPU(since it is obvious graphics ram), and everywhere else has ram of much slower bandwidth. So really, you'd have to wait for retail units to see what it can really do if they aren't using the actual PS4 dev kits :/

Yep, and proof of this has born out with every console generation. We don't get the "good" stuff until 1-2 years after a console has been released (average game development time) when developers have been handed a full retail dev kit and aren't handed final production silicon just a few months before a game is supposed to be released.

It's important to note that the PS4 is PC-like, it's not a PC. You don't treat GDDR5 the same way you treat DDR3 RAM, otherwise there's no reason Sony should have spent more for it.

sebbbi is a console dev, and he's just specifically said it's not going to lead to particularly higher resolution textures (over 4GB) because that would also require more bandwidth.

I would qualify that to 'within the same scene'. Think of two opposing textures on a single wall in the same environment, of which you can only ever see one at the time. If you had bandwidth enough but not memory, doubling the resolution of both textures would increase bandwidth per frame 2x, but if the textures were on adjoined walls (e.g. each side of the corner) bandwidth would increase 4x.

user542745831

Veteran

Hey guys,

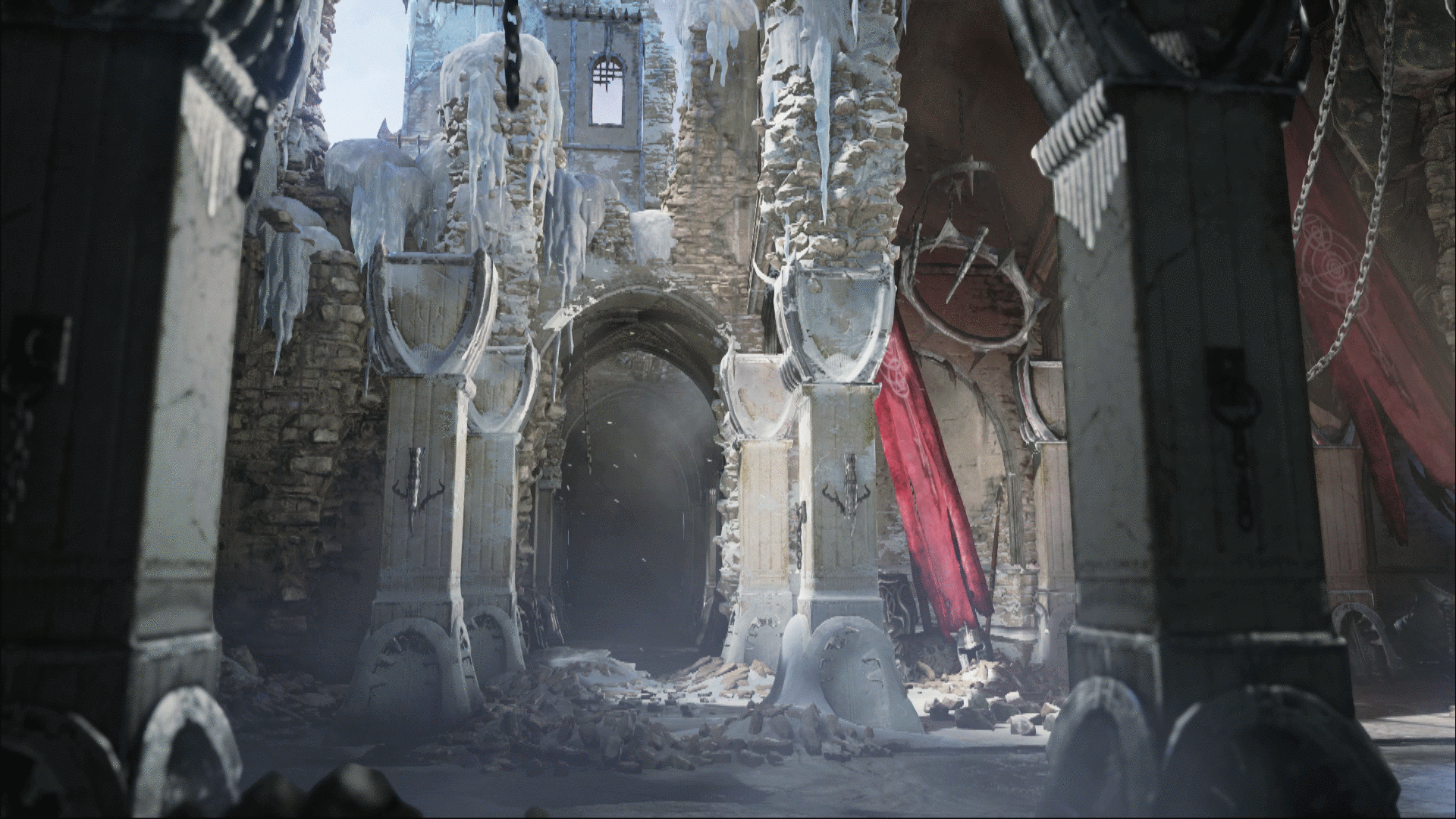

regarding textures, i even noticed a difference in the Elemental Cinematic Part 1 demo (the GTX 680 one).

Just look at the following press screenshot that was released alongside the 911 MB 1080p video:

Now compare this press screenshot with the same scene (same frame) in the 911 MB 1080p video available over there for example:

The texture quality, and apparently the lighting as well, appear to be much better in the press screenshot as compared to in the video (see the wall textures next to the archway for example).

(see the wall textures next to the archway for example).

Also, in the press screenshots released alongside the video there are another two pictures which show a difference.

One of the press screenshots shows how it looks like in the 911 MB 1080p video (apart from the rendering resolution/supersampling):

While another press screenshot shows how it is not looking in the 911 MB 1080p video:

The lighting in the second picture is different than in the first picture / different than in the 911 MB 1080p video.

Just saying .

.

regarding textures, i even noticed a difference in the Elemental Cinematic Part 1 demo (the GTX 680 one).

Just look at the following press screenshot that was released alongside the 911 MB 1080p video:

Now compare this press screenshot with the same scene (same frame) in the 911 MB 1080p video available over there for example:

Unreal Engine 4 - Elemental Demo

http://www.gamefront.com/files/21816579/UE4_Elemental_Cine_1080_30_H264.mov

Unreal Engine 4 E3 2012 Elemental demo trailer

http://www.shacknews.com/file/31991/unreal-engine-4-e3-2012-elemental-demo-trailer

The texture quality, and apparently the lighting as well, appear to be much better in the press screenshot as compared to in the video

Also, in the press screenshots released alongside the video there are another two pictures which show a difference.

One of the press screenshots shows how it looks like in the 911 MB 1080p video (apart from the rendering resolution/supersampling):

While another press screenshot shows how it is not looking in the 911 MB 1080p video:

The lighting in the second picture is different than in the first picture / different than in the 911 MB 1080p video.

Just saying

Last edited by a moderator:

Do current PS4 development kits already run close to the metal, or do they run Windows + DirectX ... with initial games being released running through a DirectX emulation layer?

Isn't Sony going to be using Open GL, or at least a subset?

I haven't really been keeping up, but if that assumption can be made there would be no need for Direct3D as they could target Open GL.

Sure, but I'm just looking at it from the perspective of what they could get up and running fastest ... which is probably Windows and DirectX.Isn't Sony going to be using Open GL, or at least a subset?

Isn't Sony going to be using Open GL, or at least a subset?

Not exactly. Sony uses libgcm (perhaps libgnm on the PS4?). They will probably build an openGL wrapper for indie developers at some stage - rumour is that it's not ready yet.

The earliest devkits for PS4 were leaked to be windows boxes with the graphics emulated. Later boxes are almost certainly running 'in-place' without the windows layer.

Both libgcm/gnm and the Durango API are thought to be substantially 'closer to the metal' than openGL/directX. (although there are rumours that the Durango API may be further away from the metal than some devs like)

Texture bandwidth usage is a quite interesting discussion on it's own. As long as you have enough texture resolution to sample everything at 1:1 texel / screen pixel ratio, the bandwidth requirement stays exactly the same, no matter how big textures you are using. Mipmapping takes care of this. The GPU calculates the mip level that provides closest to 1:1 ratio, and samples only that texture mip level (and the next level for trilinear blending). If there are more detailed mip levels available that is required for 1:1 mapping, these are not sampled at all. So basically texture resolution beyond the required sampling resolution do not increase the bandwidth usage.sebbbi is a console dev, and he's just specifically said it's not going to lead to particularly higher resolution textures (over 4GB) because that would also require more bandwidth.

Usually most geometry that is further away from the camera doesn't use the most detailed mip level. Adding extra texture resolution doesn't increase the bandwidth usage in this case at all. For the geometry near the camera, the GPU would usually want to sample from a higher mip level that is available. If the texture resolution is not enough, we see a blurred result (bilinear filtered magnified texels). This is the case where extra texture resolution would bring improved visuals, but is also the case where extra bandwidth must be spend to fetch those extra texels (instead of reusing the same texels again and again from the texture cache for bilinear upsampling).

Adding texture resolution is not the only way to improve surface quality when you got more memory. The developer could instead choose to use the extra memory to add more texture layers or more texture decals on top of the objects (to add variety and more life like materials). Extra texture layers increase the bandwidth usage, since the GPU needs to access more textures for each pixel. This of course also applies to further away objects (unless the engine fades out the decals or extra texture layers in distance). Often developers tend to use some mix of added texture resolution and more texture layers to improve visuals when there's more memory available.

As we are in the UE4 thread, we must also discuss about the SVO (sparse voxel octree) based global illumination. High resolution volumetric (3d) data structures take a lot of memory (since you have n^3 texels instead of n^2). SVOs save memory compared to traditional (dense) 3d volume textures, since they do not store empty space (only surfaces are stored). Still they are much larger in memory compared to 2d textures. Bandwidth usage when sampling a SVO is also higher compared to 2d textures, but not as high as the increase in memory usage. This is one of the graphics rendering techniques, that benefits from added memory capacity. Of course you can also stream SVOs from the hard drive (if your world is mostly static), but that requires quite a fast HDD (since the amount of data is much higher compared to for example virtual texturing).

If you have various texture layers, can't you just merge them once before you render them the first time, or doesn't that work precisely because you want to keep them in memory separately? But then, if you keep them in memory separately and merge them when rendering, is that to conserve memory? Because in that case, using more memory by merging layers before rendering could decrease bandwidth use?

If you have various texture layers, can't you just merge them once before you render them the first time, or doesn't that work precisely because you want to keep them in memory separately? But then, if you keep them in memory separately and merge them when rendering, is that to conserve memory? Because in that case, using more memory by merging layers before rendering could decrease bandwidth use?

It depends on how you layer the textures. Your additional layers might use a different UV set, or they might be tiled, or their contribution might be controlled by blend values from vertex data. If any of those are true, then you would consume more memory than if you kept them separate. Of course if you're doing something like megatextures which gives you support for fully unique textures then you can pre-combine all you'd like. In fact this is one of the ways in which RAGE was really efficient: they never needed to sample additional texture layers in the pixel shaders.

Similar threads

- Replies

- 4

- Views

- 2K

- Replies

- 284

- Views

- 50K

- Replies

- 32

- Views

- 8K

- Replies

- 30

- Views

- 8K

- Replies

- 188

- Views

- 29K