I doubt that they will have CU reservation at the moment.

Much probably, instead, they have CPU reservation for OS and other task (like the announced Voice Recognition functions).

The courious thing about Voice Recognition reservation is that, in order to work, will be always present no matter if you have PSeye or not. Courious choice.

From a technical point of view, speaking about this topic, it will be very interesting to know how PS4 will manage the CPU, in regard of audio, speech recognition, OS gestures and so on, because at the moment PS4 seems to lack any dedicated hardware to offload the CPU. (In advance, regarding the Audio, Cerny has only declared that PS4 has a compression decompression functions, similar to the one already present of Xbox360, nothing more nothing less).

In the future, for sure, some tasks will be moved to CU, but not so soon, because (as I have learned on this board) some tasks (like audio tasks, i.e. among others, speech recognition) will be very very difficult (but not impossible!) to manage via CU.

As it is, PS4 seems to be a bit unbalanced, a great GPU with a weak CPU.

I only hope that they will have some hidden dedicated hardware to offload the cpu works, otherwise the situation could be that CPU (loaded with many tasks) will be the bottleneck of the system.

And I have to add, but it is only my personal sensation (no info, no rumor) that we could have some surprise on CPU clock speed... Good, Bad... Who knows?

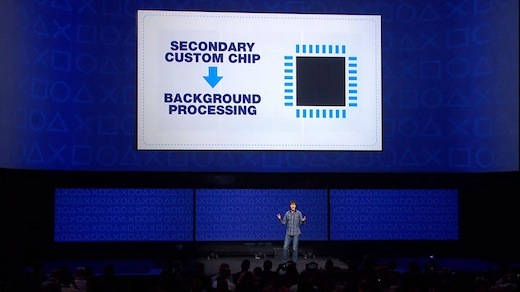

Why does it seem that everyone has forgotten about the Secondary Custom Chip?