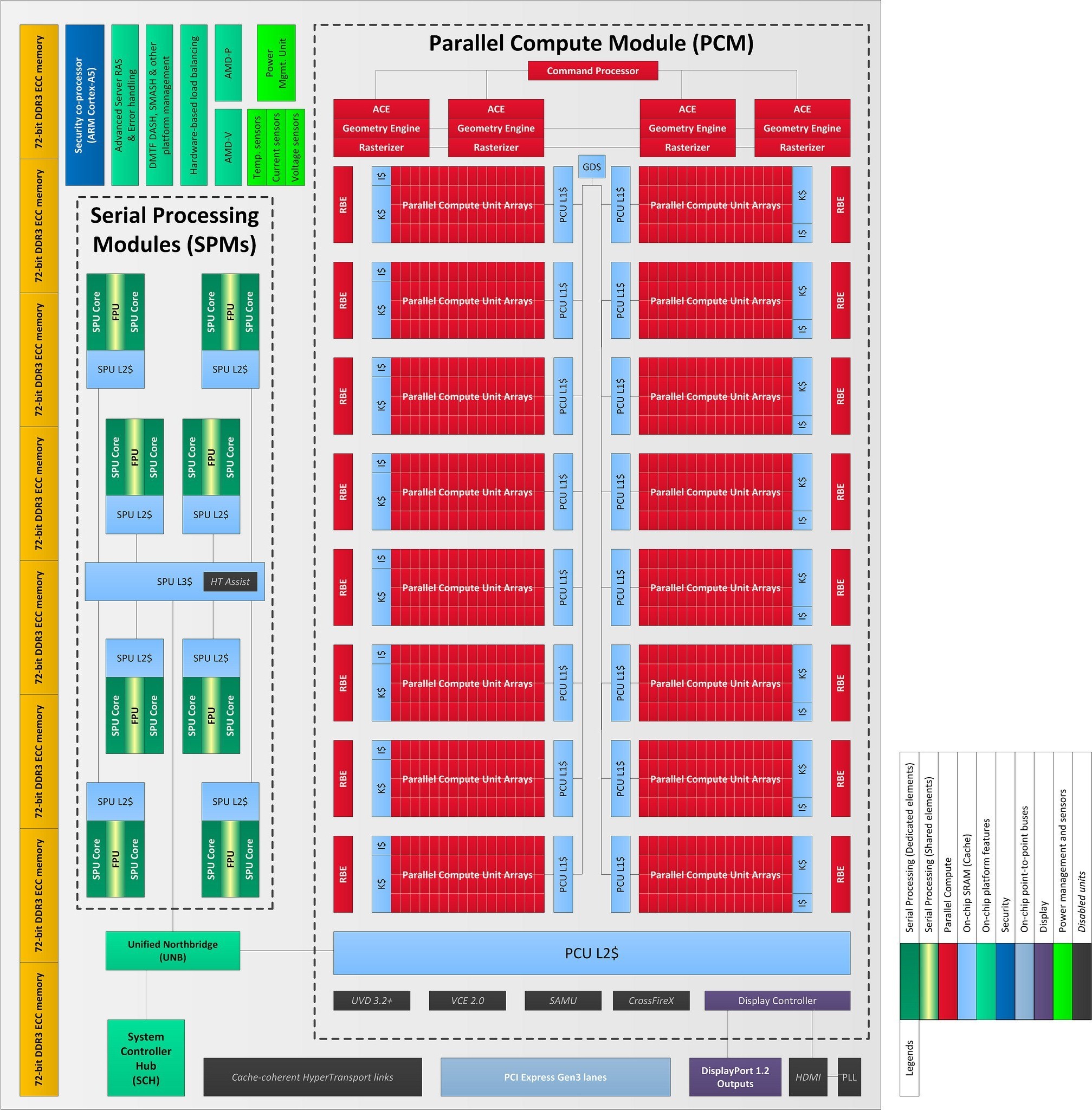

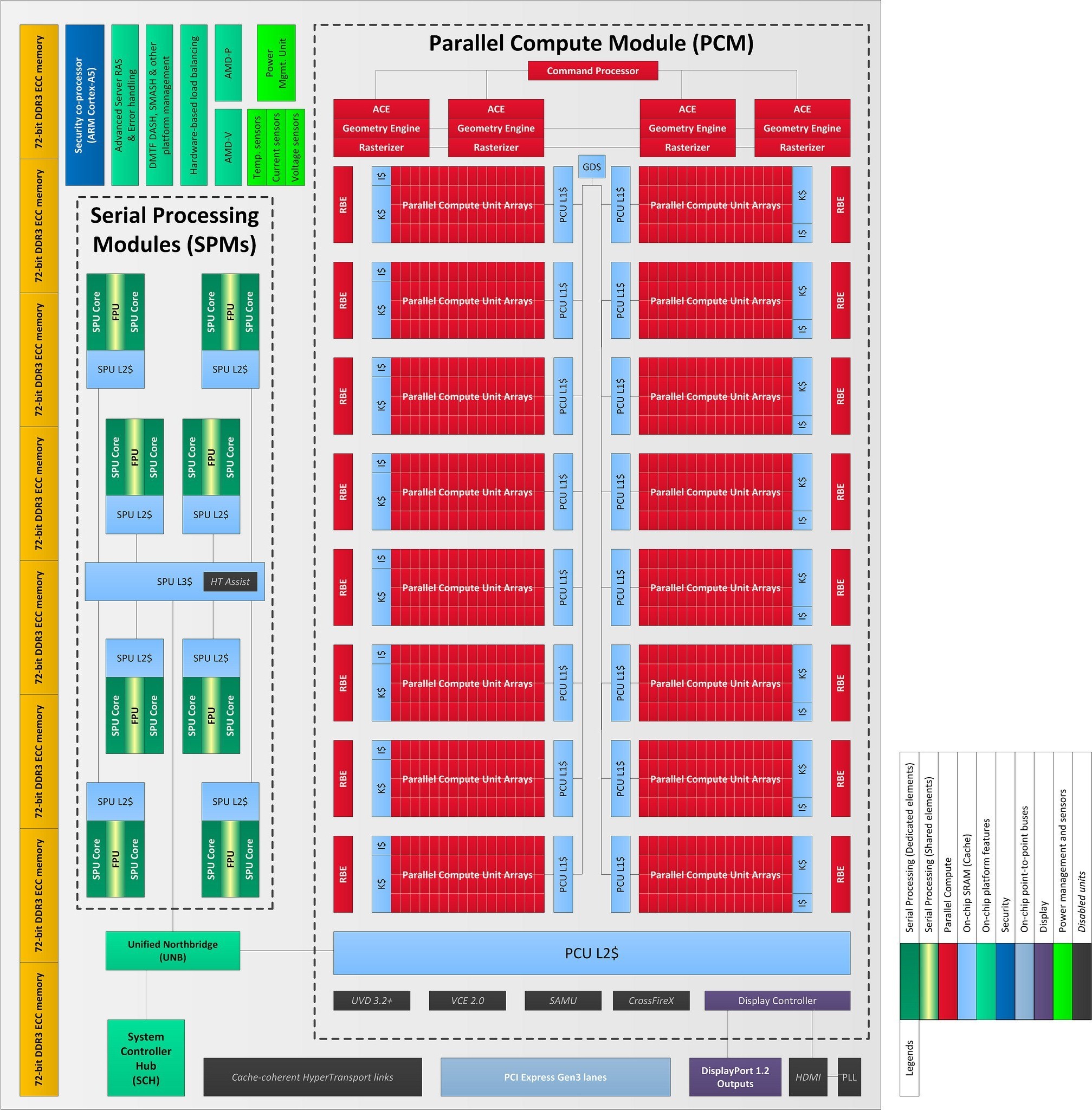

The diagram is bullshit. Well its not a graphics card diagram that much is sure.

likely nothing to do with VI or the xbox. May have nothing to do with AMD either.

I will reveal: this is the steam/apple console.

joke of course, maybe.

The diagram is bullshit. Well its not a graphics card diagram that much is sure.

likely nothing to do with VI or the xbox. May have nothing to do with AMD either.

The difference is that 16CUs have 4096 SPs while Southern Islands 32CUs give 2048 SPs.

http://wccftech.com/rumoramd-volcan...-leaked-512bit-memory-4096-stream-processors/

Highly doubt that's xbox when the HDMI lanes are disabled

Looks like something more applicable to a server part maybe a new iteration of AMD's Sky line of GPUs.

Was initially my thought, too. But why would it have display port?

It could potentially be an Xbox part... If Microsoft is moving into having Xbox support in windows and they will be using it as a "games will work guaranteed" type thing

Having the on chip/board security is really baffling to me

AMD is implementing TrustZone on their APUs.

http://www.anandtech.com/show/6007/...cortexa5-processor-for-trustzone-capabilities

That diagram doesn't look like a console chip to me.

can Infinity/ps4 be similar to intel/amd when coming to gp-gpu computing?

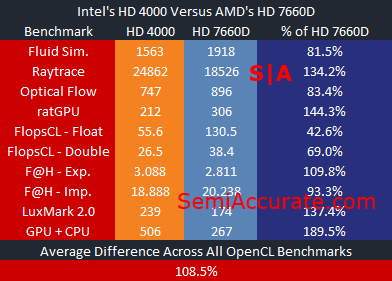

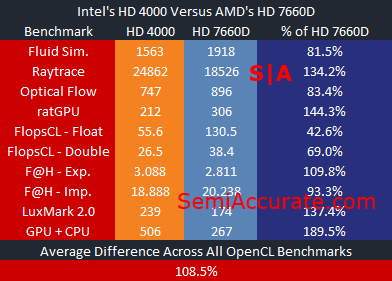

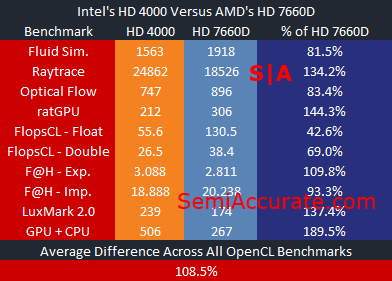

take an Intel’s Core i7-3770k and AMD’s A10-5800k, the intel have a weak HD4000 as gpu and amd a much stronger HD7660, this AMD gpu have more than double the Flops when compared to intel gpu, Intel’s HD 4000 has 16 EUs and AMD’s HD 7660D has 384 VLIW4 cores arranged into six groups

but

even if it's weaker, the HD 4000 can use the i7-3770k’s eight megabyte L3 cache to improve its performance.

results: the HD4000 is a best performer, in some cases really outperform HD7660

I'm believing that low-latency eSram can do the same thing as the L3 cache on the i7.

This is only for GP-GPU, not for general 3D, of course

In compute (Open CL benchmark you posted) is clear a cache will boost performance. In rendering as already said multiple times not so much.

Surely AA doesn't count as GPGPU as it's actually dealing with the graphics and presumably uses the graphics hardware? future AA techniques will use sophisticated graphics shaders.in standard rendering I Agree, but a lot of post-processing can be done with gp-gpu computing, included AA and others things

There is no '4 CUs' in Orbis.is not meant for this the 4 CU on Orbis? to help rendering with gp-gpu computing?

Surely AA doesn't count as GPGPU as it's actually dealing with the graphics and presumably uses the graphics hardware? future AA techniques will use sophisticated graphics shaders.

There is no '4 CUs' in Orbis.

Shaders are just computer programs that run on GPUs. Whether they are computing graphics or physics, they are shaders. Compute is just running shaders that calculate non-graphics tasks (or rather don't hit the graphics-specific components as a recent discussion on this board suggested the definition should be).AA in post process can be implemented using shading, but maybe could be possible to achieve better results with a smartest approach.

I've lost the thread of what you're trying to say. What is your point regards Durango and compute/GPGPU/CPU?I strongly believe that the software have the duty to exploit hardware capabilities, and if a machine have a strong point in GP-GPU, talented developers will find a way to make it shine. or they could change occupation

even in ps3 development, they has a weak gpu, but some spe useful in computing, and they pushed to use cell'spe in some way to help rendering. I think they've done a good work, in the end

Or it was never in and that was a misunderstanding. But that's an Orbis discussion.now they removed the 4 CU isolation, but there was

I count only 16 CUs with 4 SIMD 16-way each, for a total of 1024 ALUs.

The ECC memory controller, and the 16 Steamroller cores makes me think of a APU for high-performance computing, with around 1 Teraflops DP performance. As for size, at 20nm it shouldn't be much bigger than current Bulldozer or Tahiti chips.

On the paper it's seems a nice part for HPC market.

I would not attribute the merit only to "L3" or eSRAM, I think Intel has a really good design:can Infinity/ps4 be similar to intel/amd when coming to gp-gpu computing?

take an Intel’s Core i7-3770k and AMD’s A10-5800k, the intel have a weak HD4000 as gpu and amd a much stronger HD7660, this AMD gpu have more than double the Flops when compared to intel gpu, Intel’s HD 4000 has 16 EUs and AMD’s HD 7660D has 384 VLIW4 cores arranged into six groups

but

even if it's weaker, the HD 4000 can use the i7-3770k’s eight megabyte L3 cache to improve its performance.

results: the HD4000 is a best performer, in some cases really outperform HD7660

I'm believing that low-latency eSram can do the same thing as the L3 cache on the i7.

This is only for GP-GPU, not for general 3D, of course

Its 16 CU arrays not 16 CUs. Its just tahiti X 2 plus changes that arent so readily described by the diagram. One array equals 4 CU and tahiti sports 8 arrays.

Im guessing that AMD and Nvidia are going to offer graphics parts with cpus on them with the intention of creating parts that are capable of CPU based rendering in realtime. Basically a hybrid chip thats can deploy fixed/traditional programmable gpu hardware, gpgpu schemes as well as cpus for tasks. Anything done in reyes or ray tracing that cant be readily realized using gpgpu can simply be performed by a cpu array.

I dont thinks its applicable to durango as it may sport a capable configuration but lacks the necessary complexity and amount of hardware to pull it off. That diagram looks like a chip that if ran at durango speeds would be 7 TFLOPs or more.

No, because devs working with devkits now have a 1.2 TF GCN APU with eSRAM. If the intention was a powerhouse as far back as that alpha kit, why has MS gimped their developers with such low performance compared to what the console is actually going to have? It could only be true if the existing devkits with their custom APU hardware, which needed full design and manufacture, is just an elaborate cover-story.There might be nothing to these clues. But isn't there a possibilty?