You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

jaguar has on chip south bridge?

edit

ehm... ok, I googled...

Jaguar is a core, not a chip.

But AFAIK those jaguar-based chips will have it.

Jaguar is 15% faster in integer code compared to Bobcat, but it has also doubled the peak flops compared to Bobcat (double wide SIMD units and doubled SIMD bandwidth per core). Bobcat was already beating ATOM in benchmarks, so Jaguar should be over 2x faster than ATOM in flops heavy utilization. And top of that, it also doubled the core count from Bobcat (there's no 4 core ATOMs currently in the market to challenge the Jaguar either).I actually looked into Jaguar a little more for the first time. It's really puny, based on it being perhaps a 15% faster Bobcat.

I would say it's somewhere in the same ballpark as Intel's Atom.

If you compare a Jaguar core to a Piledriver core (Trinity), Jaguar doesn't look that bad really.Trinity has MUCH better single-thread performance than Jaguar. Everything that is not heavily threaded will work much faster on Trinity.

Based on information from these sources:

- http://semiaccurate.com/2012/08/28/amd-let-the-new-cat-out-of-the-bag-with-the-jaguar-core/

- http://www.anandtech.com/show/6201/amd-details-its-3rd-gen-steamroller-architecture/2

- Agner Fog's microarchitecture.pdf

We can gather following comparison results:

- Both are modern x86/x64 out of order cores (with register renaming, efficient store forwarding, etc goodies)

- Both support newest instruction sets (BMI, AVX, FC16, etc).

- Both can execute 2 integer (ALU) operations per cycle.

- Both have throughput of 8 (vector) flops per cycle per core (Jaguar = 128b add + 128b mul, Piledriver = 128b mad, assuming of course that the other core uses half of the shared FPU resources).

- Both split 256 bit AVX instructions to two 128 bit operations.

- Jaguar cores have their own 2-way 32 KB L1i caches. Two Piledriver cores share a 2-way 64 KB L1i cache. Sharing a 2-way cache between 2 cores is bad for performance, so Jaguar seems to win this one.

- Piledriver core has tiny 16 KB L1 data cache, while Jaguar core has larger 32 KB L1 data cache. Jaguar wins again.

- Piledriver has a shared 16 way 2 MB of L2 cache for a pair of cores (4 MB for four cores in total). Jaguar has a shared 16 way 2 MB of L2 cache for four cores. Piledriver is better here.

- Jaguar has shorter pipelines than Piledriver. This should improve branching performance and help Jaguar to keep it's pipelines filled. But Piledriver has more complex branch prediction and lots of other IPC improving features.

- According to Agner Fog's analysis Bulldozer has significantly more bottleneck cases than Bobcat. Both Piledriver and Jaguar improved IPC of their predecessors, and likely shifted the bottlenecks a bit. But it's still unlikely that Jaguar has significantly more bottlenecks than Piledriver.

--> The IPC of Piledriver and Jaguar CPU cores should be pretty close.

Of course Piledriver has much much higher clock ceiling (for desktop use). However 17W Piledriver ULV clocks shouldn't be that much higher than comparable Jaguar clocks. 17W ULV Sandy Bridges were clocked at 1.6-1.8 GHz, and Jaguar cores should be slightly above that (1.1 * 1.65 GHz = 1.815 GHz). I don't personally expect 17W ULV Trinity (two module, four cores) to hit much higher clocks than that (turbo might of course reach 2 GHz+ just like it does on Sandy/Ivy Bridge).

Could you explain the reasons why Trinity/Piledriver has "MUCH better" single-threaded performance than Jaguar? I am not a hardware engineer, so I have likely missed some fine details.

Last edited by a moderator:

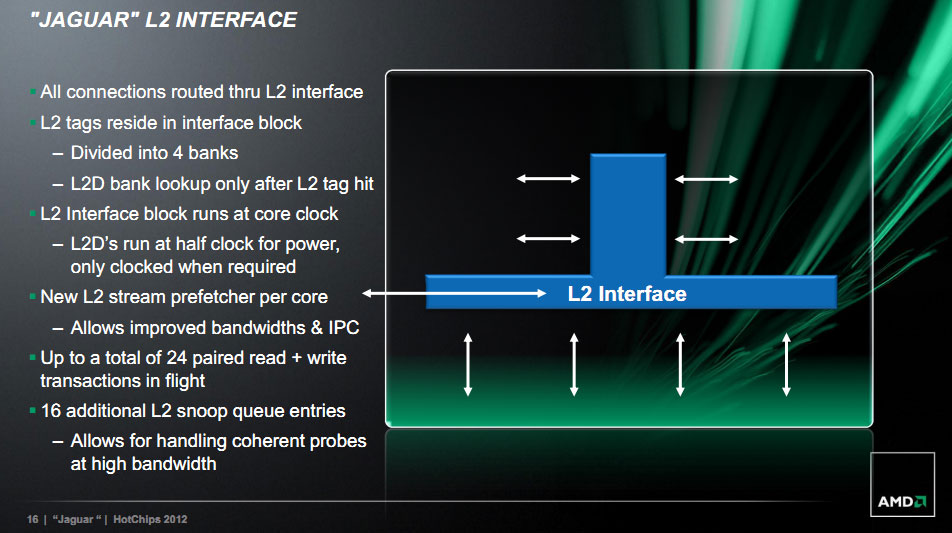

I wonder if AMD can customize L2 cache clock depending on target power/performance. Bobcat had 1/2 speed L2 and I'm under impression Jaguar for tablets and nettops will continue that trend.

At least some reports are suggesting AMD can run L2 at full clock which for console design is very desired.

At least some reports are suggesting AMD can run L2 at full clock which for console design is very desired.

Well AMD has stated that the L2 works at half speed (not the bus interface though).I wonder if AMD can customize L2 cache clock depending on target power/performance. Bobcat had 1/2 speed L2 and I'm under impression Jaguar for tablets and nettops will continue that trend.

At least some reports are suggesting AMD can run L2 at full clock which for console design is very desired.

They didn't spoke about it but I guess they could implement something akin to the feature that is supposed to make it into Streamrollers, power killing the unused part of L2.

--> The IPC of Piledriver and Jaguar CPU cores should be pretty close.

You left out that Bobcat can only decode/issue 2 instructions per cycle, while PD can decode 4 and issue 4 to an integer core and/or 4 to the FPU. And Bobcat can only do 1 load + 1 store per cycle, while PD can do 2 loads (I don't think it can actually do 2 stores though). These are not small differences - mainly, being able to support a load/store or two in conjunction with two ALU/branch/multiply/etc is a big deal, especially for x86. Even in FPU heavy code it's nice to be able to issue at least one integer instruction in addition to two FP ops for flow control/pointer arithmetic/etc. And PD's FPU is more flexible even w/o FMA code because it can do either 2 FMULs or 2 FADDs per cycle instead of just one of each.

PD also undoubtedly has much bigger OoO resources, and probably better load/store disambiguation.

As far as L1D Is concerned, Jaguar does have the bigger cache but loses in associativity (2-way vs 4-way) which is a liability on some workloads. And from test numbers I've seen its L2 is not just lower bandwidth but at least as high latency.

That is true but comparing 4 Jaguar cores vs. 2 Piledriver modules the overall decode throughput is indeed the same. Of course if it is running only one thread (per module) then it should be better on Piledriver, OTOH if you run into instructions (when there are two threads per module) which need the microcode decoder Jaguar might be better (as it won't block the other thread).You left out that Bobcat can only decode/issue 2 instructions per cycle, while PD can decode 4 and issue 4 to an integer core and/or 4 to the FPU.

PD can do either two loads or one load + one store per cycle (the cache has only two ports), whereas Jaguar is limited to one load + one store. This is not really that much of a difference but yes PD is better. I don't think though it's that much of an issue, essentially that's the same capability for Jaguar as intel had up to Nehalem (whereas SNB/IVB now are more like PD in that regard, either two loads or one load + one store).And Bobcat can only do 1 load + 1 store per cycle, while PD can do 2 loads (I don't think it can actually do 2 stores though). These are not small differences - mainly, being able to support a load/store or two in conjunction with two ALU/branch/multiply/etc is a big deal, especially for x86.

I thought Bobcat (and Jaguar) could issue more than 2 uops per clock as well (up to 6?, each 2 of integer, load/store, simd), as long as there are enough ops in the queues (obviously the decoder couldn't feed that). Maybe it can only retire 2 per clock though, K8 had serious restrictions there as well. I might be totally wrong hereEven in FPU heavy code it's nice to be able to issue at least one integer instruction in addition to two FP ops for flow control/pointer arithmetic/etc. And PD's FPU is more flexible even w/o FMA code because it can do either 2 FMULs or 2 FADDs per cycle instead of just one of each.

In any case if you argue with PD's FPU then again it is 2 FPU for BD vs. 4 for Jaguar so it's unclear if that's always a win for PD (interestingly, the benchmarks suggest that having only one FPU does not limit performance that much for multithreaded code even in fp-heavy code - maybe due to the typical quite long latencies of these instructions utilization might not be very high typically for the single-threaded case).

No doubt it has more OoO resources, but even Bobcat is quite respectable (e.g. int PRF size is 64 for Bobcat and 96 for BD (not sure if same for PD?) though yeah Bobcat has very few entries in the int/address/simd schedulers (but Jaguar should increase that). Bobcat's Load/Store unit is also quite robust (some amd paper stated it's more advanced than what any other amd cpu had at that time, so probably better than what K10 had).PD also undoubtedly has much bigger OoO resources, and probably better load/store disambiguation.

In contrast though, Bobcat doesn't suffer in some workloads due to L1 write-through cache like BD does.As far as L1D Is concerned, Jaguar does have the bigger cache but loses in associativity (2-way vs 4-way) which is a liability on some workloads.

I'm not sure what's better on average, 16KB/4-way vs. 32KB/2-way. I'd call it a draw

AMD stated 17 cycles for L2 for Bobcat (but Jaguar could be different) and 18-20 for BD (though the latter may include the L1 latency, not sure about the former - in any case the numbers don't look too different).And from test numbers I've seen its L2 is not just lower bandwidth but at least as high latency.

In short imho Jaguar doesn't look that much worse than Piledriver as far as IPC is concerned.

Last edited by a moderator:

Already discussed in the last page, but I short recap.

Bobcat vs K10 (Athlon II X4 630 downclocked at 1.6Ghz):

http://www.xtremesystems.org/forums...Core-performance-analysis-Bobcat-vs-K10-vs-K8

In generic integer calculations Bobcat has 5% slower IPC than similarly clocked K10. The slightly improved Stars core (Llano) has a few percents higher IPC than K10. Bulldozer has slightly worse IPC than Stars and Piledriver has pretty much equal IPC to Stars.

AMD promised a +15% IPC increase from Bobcat->Jaguar. If these predictions hold any water, the IPC of Jaguar might even be higher than Bulldozer's, and be very compatible with Piledriver.

The worst case of K10 against Bobcat was SIMD floating point performance (K10 has on avarage 50.19% better performance per clock). Jaguar doubles the SIMD float (and integer) performance (64 bit -> 128 bit SIMD) and introduces all the new AVX instructions. I don't think we have to worry about SIMD performance anymore. It should now be comparable to BD/PD.

Quote from Agner Fog's analysis:

"The decoders can handle four instructions per clock cycle. Instructions that belong to different cores cannot be decoded in the same clock cycle. When both cores are active, the decoders serve each core every second clock cycle, so that the maximum decode rate is two instructions per clock cycle per core."

So Jaguar and BD/PD cores can decode an equal amount of instructions per cycle.

Also BD/PD have an additional stall case, because the decoder is shared between two cores (Agner Fog):

"Instructions that generate more than two macro-ops are using microcode. The decoders cannot do anything else while microcode is generated. This means that both cores in a compute unit can stop decoding for several clock cycles after meeting an instruction that generates more than two macro-ops"

Bobcat vs K10 (Athlon II X4 630 downclocked at 1.6Ghz):

http://www.xtremesystems.org/forums...Core-performance-analysis-Bobcat-vs-K10-vs-K8

In generic integer calculations Bobcat has 5% slower IPC than similarly clocked K10. The slightly improved Stars core (Llano) has a few percents higher IPC than K10. Bulldozer has slightly worse IPC than Stars and Piledriver has pretty much equal IPC to Stars.

AMD promised a +15% IPC increase from Bobcat->Jaguar. If these predictions hold any water, the IPC of Jaguar might even be higher than Bulldozer's, and be very compatible with Piledriver.

The worst case of K10 against Bobcat was SIMD floating point performance (K10 has on avarage 50.19% better performance per clock). Jaguar doubles the SIMD float (and integer) performance (64 bit -> 128 bit SIMD) and introduces all the new AVX instructions. I don't think we have to worry about SIMD performance anymore. It should now be comparable to BD/PD.

BD/PD decoder is shared between two cores. A module can decode 4 uops per cycle, but the decoder is time sliced (every other cycle) between two cores.You left out that Bobcat can only decode/issue 2 instructions per cycle, while PD can decode 4 and issue 4 to an integer core and/or 4 to the FPU.

Quote from Agner Fog's analysis:

"The decoders can handle four instructions per clock cycle. Instructions that belong to different cores cannot be decoded in the same clock cycle. When both cores are active, the decoders serve each core every second clock cycle, so that the maximum decode rate is two instructions per clock cycle per core."

So Jaguar and BD/PD cores can decode an equal amount of instructions per cycle.

Also BD/PD have an additional stall case, because the decoder is shared between two cores (Agner Fog):

"Instructions that generate more than two macro-ops are using microcode. The decoders cannot do anything else while microcode is generated. This means that both cores in a compute unit can stop decoding for several clock cycles after meeting an instruction that generates more than two macro-ops"

Again PDs FPU is shared between two cores. If one core does 2 FMUL/FADD/FMA per cycle, the other core can do nothing. If resources are evenly split, both Jaguar and PD have equal flops throughput. However PD needs FMA code to reach it's peak, while Jaguar can do that on old code (with separate muls and adds). So Jaguar should have better peak performance in current/legacy code (FMA3/FMA4 usage in applications/games is still very low, partly because there's two implementations that are not compatible).And PD's FPU is more flexible even w/o FMA code because it can do either 2 FMULs or 2 FADDs per cycle instead of just one of each.

Yes, that's likely true however AMD improved OoO execution on Jaguar as well. According to (http://semiaccurate.com/2012/08/29/another-nugget-on-amds-jaguar/) the scheduler can handle more entries and the core has larger reorder buffers.PD also undoubtedly has much bigger OoO resources, and probably better load/store disambiguation.

Can you quote where you got this information? According to semiaccurate.com "the caches can run at half clock to save power when needed". If I understood that correctly, the caches can be dynamically down clocked to save performance (when CPU load is light).Well AMD has stated that the L2 works at half speed (not the bus interface though).

mczak said:That is true but comparing 4 Jaguar cores vs. 2 Piledriver modules the overall decode throughput is indeed the same. Of course if it is running only one thread (per module) then it should be better on Piledriver, OTOH if you run into instructions (when there are two threads per module) which need the microcode decoder Jaguar might be better (as it won't block the other thread).

Even if you really are running four fully loaded threads - which you quite often are not, but still want strong performance on one or two of them, especially if your clocks are low - that's not a fair comparison because PD will be able to achieve better flexibility by alternating between threads as it decodes. This is relevant because it means that when one core can't utilize the extra decode bandwidth (due to the OoO window being backed up) the other core gets better decode bandwidth. Assuming that that's how PD works, anyway.

I thought Bobcat (and Jaguar) could issue more than 2 uops per clock as well (up to 6?, each 2 of integer, load/store, simd), as long as there are enough ops in the queues (obviously the decoder couldn't feed that). Maybe it can only retire 2 per clock though, K8 had serious restrictions there as well. I might be totally wrong here.

I'm referring to issue the way Intel, AMD, ARM, and as far as I know most of the industry does: how many instructions can enter the reorder buffers per cycle, not how many instructions can move from the reoder buffers to the execution units. The latter is instead called "dispatch" by those parties, and it's a very important distinct since x86 uarchs often issue macro-ops/fused uops but dispatch uops. So the issue rate closer matches real instructions.

sebbbi said:Already discussed in the last page, but I short recap.

Bobcat vs K10 (Athlon II X4 630 downclocked at 1.6Ghz):

http://www.xtremesystems.org/forums/...t-vs-K10-vs-K8

In generic integer calculations Bobcat has 5% slower IPC than similarly clocked K10. The slightly improved Stars core (Llano) has a few percents higher IPC than K10. Bulldozer has slightly worse IPC than Stars and Piledriver has pretty much equal IPC to Stars.

The comparison that forum poster makes is poor. The benchmarks are not well chosen and his classifications aren't even correct. A few synthetics like Sandra, 3DMark, and "speedtraq" don't make for a comprehensive comparison in CPU performance.

This belief that Jaguar can surpass Llano's IPC is sorely lacking in any kind of architectural justification.

I read it on Hardware.frCan you quote where you got this information? According to semiaccurate.com "the caches can run at half clock to save power when needed". If I understood that correctly, the caches can be dynamically down clocked to save performance (when CPU load is light).

I guess they back their claim on that slide:

I'm not sure my self about what they mean by L2D.

Could it that the data in the L2 data bank are only powered when there is a cache hit?

I don't understand to say the truth

That's true... in theory. But the shared decoder has assumed to be one of the key bottlenecks in BD/PD architecture. In Steamroller (http://www.anandtech.com/show/6201/amd-details-its-3rd-gen-steamroller-architecture) the biggest change AMD is doing is moving back to having a separate decoder per core (just like they had in their earlier designs such as K10/Stars and Bobcat/Jaguar). AMD claims Steamroller is going to have 30% improved IPC, so it seems that shared decoder wasn't such a good idea.PD will be able to achieve better flexibility by alternating between threads as it decodes. This is relevant because it means that when one core can't utilize the extra decode bandwidth (due to the OoO window being backed up) the other core gets better decode bandwidth. Assuming that that's how PD works, anyway.

So yes. Shared decoder gives better flexibility, but some severe starvation to the cores as well. I wouldn't say BD/PD straight up beats Jaguar in this case.

Ok, lets use AMDs official slides then. Bobcat slides (years ago) claimed 90% of the IPC compared to their desktop parts (K10 Phenom at that time). Bobcat was basically a simplified K10 core. They removed the third ALU pipeline, because it had very low utilization ratio (same reason was given why it wasn't present in BD). They also removed everything that allowed the core to clock high (lots of extra pipeline stages are needed to reach high clocks). Their focus wasn't on reducing IPC, they tried to keep it close to the desktop CPUs (90% was the goal according to the slides).The comparison that forum poster makes is poor. The benchmarks are not well chosen and his classifications aren't even correct. A few synthetics like Sandra, 3DMark, and "speedtraq" don't make for a comprehensive comparison in CPU performance.

This belief that Jaguar can surpass Llano's IPC is sorely lacking in any kind of architectural justification.

The Stars core in Llano is a only slightly upgraded K10/K10.5. Benchmarks show maybe a 10% improvement. So it's basically 110% compared to Phenom, and Jaguar should be 90% * 115% = 103%. So yes, according to these rough calculations Llano/Stars core has slightly better average IPC than Jaguar, but only by few percents (6%). Jaguar has been on development for many years. It's certainly possible that AMD actually reaches the claimed 15% average IPC improvement (and not a maximum improvement of 15%). If you just look at Nehalem, Sandy Bridge and Ivy Bridge execution units, they are pretty much the same. However all other parts of the CPU have been improved a lot. Llano/Stars is a very old AMD architecture. Jaguar however is brand new. It's certainly possible that Jaguar has many new tricks in its sleeve to outperform the old Stars core (even if it has this one extra ALU pipeline that has very low utilization according to AMD).

Ok, lets use AMDs official slides then. Bobcat slides (years ago) claimed 90% of the IPC compared to their desktop parts (K10 Phenom at that time).

I feel the urge to reiterate that their slides did not mention what that percentage was calculated against, and that it was left deliberately foggy - we don't know if they were comparing against K8L (K10) or straight up K8, and there are strong indications in practice that it was the latter actually.

According to AMD architects the goal was to reach 90% of the integer IPC of a K8 Core, which they did. The 15% improvement should put Jaguar right between a K8 and K10 core in performance. Quite respectable considering that Jaguar takes up 3.1 mm² on 28 nm while a Stars core needs ~10 mm² on 32 nm. Jaguar is obviously more optimized for density, but I think that's still an impressive achievement.

For those looking for IPC comparisons between architectures, here is one that shows all Intel archs since Core and also includes K8 through Bobcat:

http://ht4u.net/reviews/2011/amd_zacate_e350_review/index10.php

For those looking for IPC comparisons between architectures, here is one that shows all Intel archs since Core and also includes K8 through Bobcat:

http://ht4u.net/reviews/2011/amd_zacate_e350_review/index10.php

That's true... in theory. But the shared decoder has assumed to be one of the key bottlenecks in BD/PD architecture. In Steamroller (http://www.anandtech.com/show/6201/amd-details-its-3rd-gen-steamroller-architecture) the biggest change AMD is doing is moving back to having a separate decoder per core (just like they had in their earlier designs such as K10/Stars and Bobcat/Jaguar). AMD claims Steamroller is going to have 30% improved IPC, so it seems that shared decoder wasn't such a good idea.

Totally false comparison. Yes, the shared decoder is worse than independent 4-wide ones. No, that doesn't make it the same as two independent 2-wide ones like in Jaguar.

Ok, lets use AMDs official slides then. Bobcat slides (years ago) claimed 90% of the IPC compared to their desktop parts (K10 Phenom at that time).

It wasn't clear what they were comparing against, but I'd rather use real performance numbers from useful software and not a lay-person's small collection of synthetic benchmarks nor company marketing slides that are meant to portray the product in as positive a light as possible.

Bobcat was basically a simplified K10 core. They removed the third ALU pipeline, because it had very low utilization ratio (same reason was given why it wasn't present in BD). They also removed everything that allowed the core to clock high (lots of extra pipeline stages are needed to reach high clocks). Their focus wasn't on reducing IPC, they tried to keep it close to the desktop CPUs (90% was the goal according to the slides).

That's not even remotely true, it's a totally different core. There's way more different than a third ALU + AGU, I don't really feel the need to iterate everything, you should go read up more on both of them. And the pipeline is of comparable length.

You misunderstood me. I wasn't claiming that 2-wide Jaguar decoder is in any way comparable with 4-way Steamroller decoder. I was just using Steamroller as an example why the shared 4-way decoder in BD/PD is a likely bottleneck, as the 2x independent decoders are the largest announced change in Steamroller and we see a big 30% IPC gain.Totally false comparison. Yes, the shared decoder is worse than independent 4-wide ones. No, that doesn't make it the same as two independent 2-wide ones like in Jaguar.

Shared 4-wide decoder (in BD/PD) will of course give some advantages when one of the threads stall (and that happens every time we have a cache miss). However the shared 4-way decoder only has the same peak throughput as two independent 2-wide decoders when both cores are used. Your earlier post made it look like BD/PD has double the decode throughput.

The designs are different, but there haven't been a "totally different core" in modern CPU & GPU design for a long time (even Xeon Phi core was based on old Pentium design). AMD has always reused lots of building blocks. When you compare the front ends, caches, branch predictors, execution units, etc, you will see lot of similarity to previous AMD designs.That's not even remotely true, it's a totally different core. There's way more different than a third ALU + AGU

That's true. I shouldn't use web benchmarks that much. It would be interesting to see how a Jaguar based APU compares to Llano. 1.84 GHz Jaguar (quad core) shouldn't beat any of the current Llano options (there are no sub 1.6 GHz models), but with some underclocking for the Llano part we should be able to put this debate to restIt wasn't clear what they were comparing against, but I'd rather use real performance numbers from useful software and not a lay-person's small collection of synthetic benchmarks nor company marketing slides that are meant to portray the product in as positive a light as possible.

Last edited by a moderator:

AMD presented BD and BC architectures together, although it seems only a few sites made a clock-to-clock cmp.

Here is a limited 1.6GHz test performed by a local site. Use any translator.

http://diit.cz/clanek/bulldozer-vs-merom-vs-bobcat-2-jadra-16-ghz-netburst-1-jadro-346-36-ghz-via

btw AMD's successors to Jaguar are likely named 'Margay' and 'Leopard'.

Here is a limited 1.6GHz test performed by a local site. Use any translator.

http://diit.cz/clanek/bulldozer-vs-merom-vs-bobcat-2-jadra-16-ghz-netburst-1-jadro-346-36-ghz-via

btw AMD's successors to Jaguar are likely named 'Margay' and 'Leopard'.

The designs are different, but there haven't been a "totally different core" in modern CPU & GPU design for a long time (even Xeon Phi core was based on old Pentium design).

AMD has always reused lots of building blocks. When you compare the front ends, caches, branch predictors, execution units, etc, you will see lot of similarity to previous AMD designs.

Bobcat and Bulldozer are both totally different than K7/K8/K10 which were of the same family.

There might some very small "old" ip blocks used , but the overall architecture is totally different.

So, lets compare those parts where you claim "similarities"..

1) front ends: Totally different. K7->K10 had the pre-decode bits. Bobcat and Bulldozer does not have.

2) caches:

a) Bobcat L2 running at half speed. AMD has never had half-speed internal cache before

b) Bobcat L1D. 32kiB/8 . Totally different than K7/K8/K10 64kiB/2. Also different than bulldozer 16kiB/2.

c) Bobcat L1I. 32kiB/8. Totally different than any other AMD instruction cache.

d) Bulldozer L2: much slower than any AMD's earlier L2 cache, and has to feed 4 L1 caches instead of 2. Totally new design.

e) Bulldozer L1D: 16kiB/2. Totally different than anything before.

f) Bulldozer L1I: 64kiB/2, just like K7/K8/K10. But: does not contain the pre-decode bits, so different design. might be based on K7 design though.

3) branch predictors: Bulldozer has totally new system of branch predictors where fast but bad predictor predicts first and better but slower predictor can do correction later. Completely new design.

I don't know much about bobcat branch predictors.

4) Execution units:

a) Bulldozer has totally new FPU with the FMA's, new multiplier, divider, AGUs.

b) Bobcat has totally new FPU(power optimized, slow x87, no 3dnow).

So, there is almost no similarities, or, practically only similarities are:

1) all three architectures have separate clusters for integer and fpu.

2) L1I size and associativity of bulldozer

Why do you consider Bobcat FPU slow with x87? As far as I can tell it is exactly as fast as you'd expect given the simd float capabilities. It even still has "free" fxch and unlike the atom (which seems to suffer from some design flaw) it's got essentially the same instruction throughput as when using (scalar) SSE.b) Bobcat has totally new FPU(power optimized, slow x87, no 3dnow).

Oh and dropping 3dnow is hardly relevant here, ripping that out should be fairly trivial and be quite orthogonal to how the design of the fpu looks like (and it probably won't really save many transistors neither).

Why do you consider Bobcat FPU slow with x87? As far as I can tell it is exactly as fast as you'd expect given the simd float capabilities. It even still has "free" fxch and unlike the atom (which seems to suffer from some design flaw) it's got essentially the same instruction throughput as when using (scalar) SSE.

Oh and dropping 3dnow is hardly relevant here, ripping that out should be fairly trivial and be quite orthogonal to how the design of the fpu looks like (and it probably won't really save many transistors neither).

Performance with 80-bit fp numbers is much slower, that's what I mean. (latency 5, throughput 3op/cycles, vs 4/1)

(and there are no 80-bit fp numbers on sse)

and it's not exactly as fast on smaller numbers either, for example:

1) fmisc pipe has been dropped

2) 64-bit multiply has throughput of only one op/2 cycles.

3) fp add latency down from 4 to 3

4) 32-bit fp mul latency down from 4 to 2.

5) fdiv no longer has variable latency

Actually, the 32-bit throughput is about the ONLY thing equal with K8 and bobcat fpu's.

So, internally K7/K8 fpu was an 80-bit fpu that could be split into two 32-bit halves for simd processing.

(and when executing ops with 32-bit data, more than half of the multiplier circuits are sitting idle doing nothing)

And internally Bobcat's fpu is 2*32-bit simd fpu's where the 2 lanes can be ganked together and loop same again to execute 64- and 80-bit operations. Totally different design.

Last edited by a moderator:

First time I heard of that. Source?Performance with 80-bit fp numbers is much slower, that's what I mean. (latency 5, throughput 3op/cycles, vs 4/1)

edit: the multiplier is indeed slower for DP and even slower for EP but this does not extend to other x87 instructions like add. Well maybe the things using the division hw are slower too but I believe that would be the case for all modern x86 cpus.

But yes otherwise I agree Bobcat's simd unit is quite different to K8, never questioned that.

Last edited by a moderator:

Similar threads

- Replies

- 70

- Views

- 19K

- Replies

- 98

- Views

- 32K