Note the plural "products".

So probably a GTX 970ti and the GTX 960 at least.

or just GTX 980M/970M.

Note the plural "products".

So probably a GTX 970ti and the GTX 960 at least.

The question is: does feature level 12_0 exist (and if so what new features will be available) or have we been mislabeling 11_3 as 12_0? My guess is 12_0 still exists (but perhaps not finalized).

It should be noted at this point in time this is not an exhaustive list of all of the new features that we will see, and Microsoft is still working to define a new feature level to go with them (in the interim they will be accessed through cap bits), but ...

Also, I don't see how current 11_1 cards could support 11_3 through a software update. 11_3 includes new non-trivial hardware requirements.

You're assuming all current 11_1 cards features are already exposed in 11_1, which necessarily isn't true (like PRT/Tiled Resources which weren't 'till 11.2)Also, I don't see how current 11_1 cards could support 11_3 through a software update. 11_3 includes new non-trivial hardware requirements.

...and Damien from Hardware.fr only measures only 52 ROPs on GTX 970:

http://www.hardware.fr/articles/928-3/performances-theoriques-pixels.html

http://forums.laptopvideo2go.com/topic/31126-inf-v5014/NVIDIA_DEV.13C0 = "NVIDIA GeForce GTX 980"

NVIDIA_DEV.13C2 = "NVIDIA GeForce GTX 970"

NVIDIA_DEV.13D7 = "NVIDIA GeForce GTX 980M"

NVIDIA_DEV.13D8 = "NVIDIA GeForce GTX 970M"

NVIDIA_DEV.13BD = "NVIDIA Tesla M40"

NVIDIA_DEV.13C1 = "NVIDIA Graphics Device "

NVIDIA_DEV.13C3 = "NVIDIA Graphics Device "

There seems to be a bit more going on. If you look at the blending results (other than plain 4x8bit) there's still lots of difference between GTX 980 and 970, even for things which are slow-as-molasses like 4xfp32 blend, which should not be limited by SMM export at all.It's not the ROPs. Each SMM can output 16 bytes (4x32b pixels) per clock. 970 only has 13 SMM's ==> 13x4 = 52.

You're assuming all current 11_1 cards features are already exposed in 11_1, which necessarily isn't true (like PRT/Tiled Resources which weren't 'till 11.2)

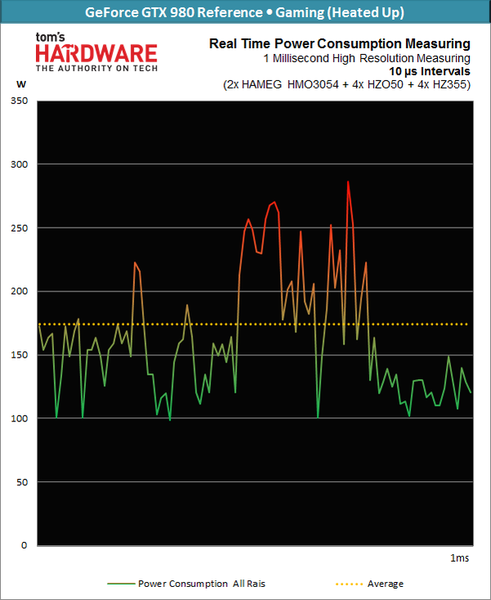

Nvidia's newest architecture presents us with a whole new set of challenges for measuring power consumption. If the maximum of all four possible rails are to be measured exactly (to find out Maxwell’s power consumption reduction secrets), then a total of eight analog oscilloscope channels are needed. This is because voltage and current need to be recorded concurrently at each rail in real-time. If the voltages are measured and then used later, the result may be inaccurate. So, how did we solve this problem?

We enlisted the help of HAMEG (Rohde & Schwarz) to search for a solution with us. In the end, we had to use two oscilloscopes in parallel (a master-slave triggered setup), allowing us to accurately measure and record a total of eight voltages or currents at the same time with a temporal resolution down to the microsecond.

To illustrate, let’s take a look at how Maxwell behaves in the space of just 1 ms. Its power consumption jumps up and down repeatedly within this time frame, hitting a minimum of 100 W and a maximum of 290 W. Even though the average power consumption is only 176 W, the GPU draws almost 300 W when it's necessary. Above that, the GPU slows down.

Agreed, although I don't fully understand what causes these spikes in the first place. Obviously some parts of a frame will be much more power-hungry than others but it's strange to see a spike at 275W+ for what's presumably nowhere near a true power virus. Is there some buffering going on? (i.e. capacitors in electrical terms I guessIf [...] the oscilloscopes are nothing but irrelevant nitpicking at the rate of millions of times a second.

Agreed, although I don't fully understand what causes these spikes in the first place. Obviously some parts of a frame will be more power-hungry than others but it's strange to see a spike at 275W+. Is there some buffering going on? (i.e. capacitors in electrical terms I guess)

Is there some buffering going on? (i.e. capacitors in electrical terms I guess)

We knew PRT was available at GCN's launch (obviously wasn't in D3D at launch). I doubt they've been hiding big features like conservative rasterization/etc. for this long.

Just making sure I understand this correctly - you're saying that both clock gating and power gating would result in a (short) higher peak when resuming after being turned off than the actual power consumption of the units when continuously turned on?current inrush from a ton of clock-gated units waking up [...]Waking up power-gated cores is a pretty intensive endeavor as well,

Good point. It still annoys me a bit that there's no way to disable turbo (even if it means always being at base clock!) for performance analysis purposes.all this happening right after the heuristic for turbo determined it had enough margin to ratchet voltage and clock

Tsk tsk, don't tell that to people who make a big deal out of chips exceeding their TDPs without thermal/power throttlingThere is more than enough hardware and wires than necessary to melt the chip down several times over.

There's an instantaneous power cost to wakeup, especially for power gating. Power gates themselves have a power cost when they switch, especially since they need to be physically larger than most gates to keep their leakage low and to offer a low-resistance path to the power that the rest of the unit/core relies on when on.Just making sure I understand this correctly - you're saying that both clock gating and power gating would result in a (short) higher peak when resuming after being turned off than the actual power consumption of the units when continuously turned on?

Without power and clock gating, the design is likely to be smaller. However, without guarantees as to what it might do in a pathological case, it might be smaller unless we provision for a throttle or fail-safe, otherwise it might be kept smaller or slower out of fear of a transient event that could damage it.i.e. in the pathological case of the exact same design having *no* power/clock gating whatsoever, the average power consumption would be massively higher, but the peak power consumption over an extremely short amount of time might be significantly *lower*? I do remember reading up some about that but I never thought about it much...

The thing is, particularly for the oscilloscope measurements, is that allowing for above TDP transients has been a thing probably since people though to set down standards for putting metal blobs on top of their CPUs. I don't get at this point what Tomshardware's setup does that isn't like pointing out that cars on a highway with a low speed limit have speedometers that go much higher.Tsk tsk, don't tell that to people who make a big deal out of chips exceeding their TDPs without thermal/power throttlingI agree the simple reality is there's no way to guarantee a TDP without losing the *majority* of your performance or supporting some form of throttling. The only decision is how much you value performance stability versus throttling in real-world applications... (see e.g. iOS vs Android devices).

Nvidia is yet to reveal the full might of the Maxwell architecture, and that will come with the GM210 in due course, but for now, the GeForce GTX 980 and GTX 970 set new standards in the premium space.

If the chip doesn't maintain above TDP power draw for periods that measure more than a few milliseconds, if none of the transient spikes exceed the maximum power rating (not the same thing as TDP), if the chip's local temperatures don't climb past ~100-120 C, and none of the packaging and silicon-level physical limits are exeeded, the oscilloscopes are nothing but irrelevant nitpicking at the rate of millions of times a second.

The amount we need to care about this is proportional to the measurement granularity.

If there is sustained draw above TDP, or regularly measured spikes that exceed the safe bounds listed for the chip or power delivery circuitry, it might be worth the bandwidth used to read the page.

I see no sign of that kind of analysis, and they might be interested in seeing how everything that has come before it has behaviors that show up with high-speed oscilloscopes.