Jawed

Legend

I don't think Kepler is the same ALU architecture. Certainly too early to say it's less elegant.As I wrote in another post i consider GCN architecture much more elegant than Fermi-Kepler one

I don't think Kepler is the same ALU architecture. Certainly too early to say it's less elegant.As I wrote in another post i consider GCN architecture much more elegant than Fermi-Kepler one

This is NVidia's "RV770 moment" though this time AMD has nowhere to hide, whereas NVidia back then still had the absolute performance crown.

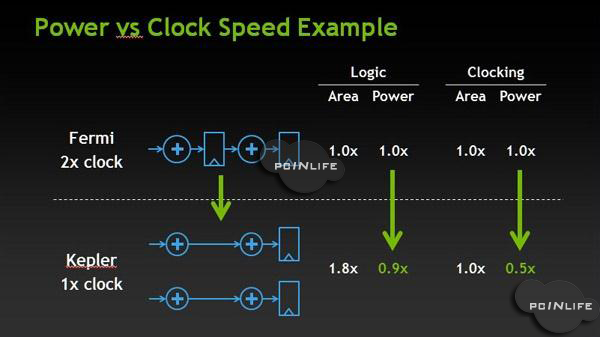

Maybe it was inevitable? G80 was already big enough, for the aging 90nm process at the time, and putting more SIMD lanes per multiprocessor at the base clock wasn't an option, if they wanted to meet the GFLOPs target.We'll never know why G80 has a hot clock in preference to a single clock, why NVidia rejected the single clock approach.

http://www.nvidia.com/object/win7-winvista-64bit-301.10-whql-driver.htmlNVIDIA NVENC Support –

adds support for the new hardware-based H.264 video encoder in GeForce GTX 680, providing up to 4x faster encoding performance while consuming less power.

Who, exactly are you arguing with?I don't see how anyone can argue that a hot-clocked design is easier than a single clock design.

As I've already said the only element I can think of here is that compilation complexity may be dramatically higher with Kepler, and compilation complexity was what NVidia was running away from back then.

I wouldn't go that far. RV770 was considerably smaller than G200 and opened up a huge perf/mm lead. This is just Nvidia taking the lead by a small margin.

Even then it's doubtful that they will retain it vs Pitcairn with their competitor there.

Well, given that they had a MASSIVE deficit there, and managed to outgun AMD in just one architectural generation, thats pretty impressive.

How do you know this? Sounds more like wishful thinking to me.

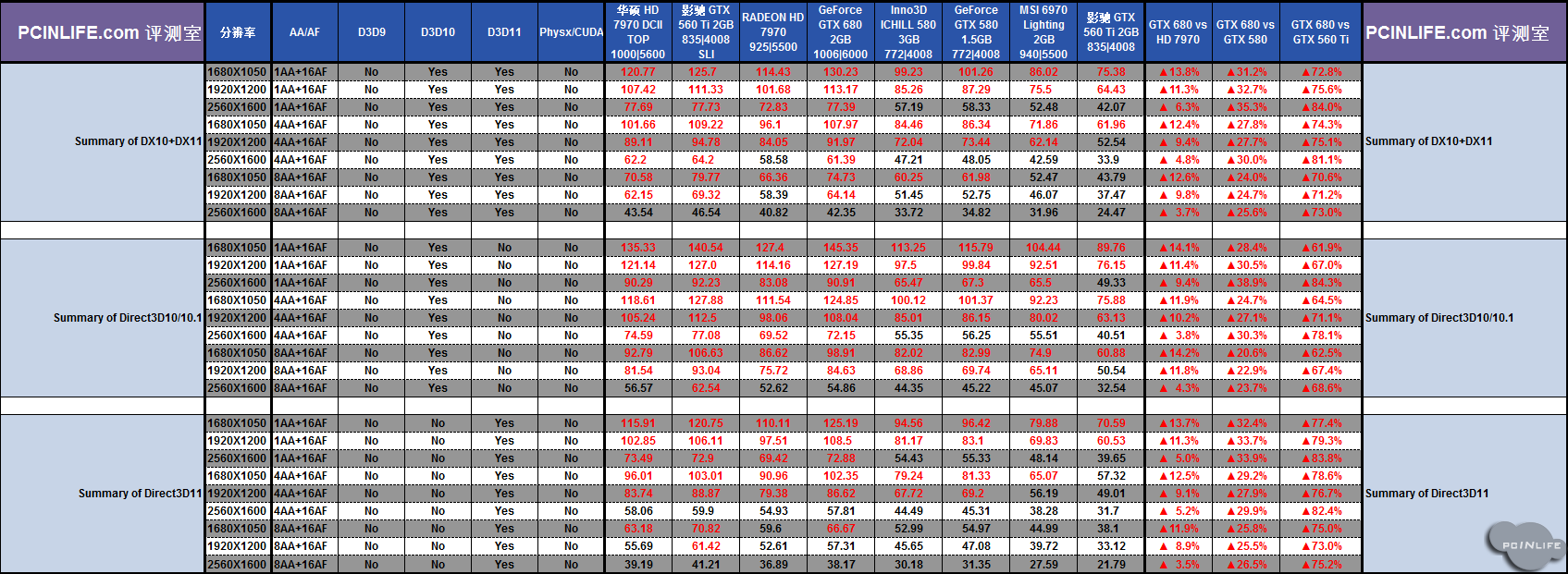

http://pc.pcinlife.com/Graphics/20120322/GeForce-GTX-680.html#%E5%89%8D%E8%A8%80

... many interesting theoretical numbers inside.

While AMD switched to a compute chip and Nvidia ditched theirs?

Because Pitcairn is hugely more efficient than Tahiti in mainstream gaming?

Thanks. Started the reviews thread.http://pc.pcinlife.com/Graphics/20120322/GeForce-GTX-680.html#前言

... many interesting theoretical numbers inside.

WOW.http://pc.pcinlife.com/Graphics/20120322/GeForce-GTX-680.html#%E5%89%8D%E8%A8%80

... many interesting theoretical numbers inside.