Yeah, I'd like to see someone build a Mass Effect game by "importing" real world stuff. And that was a fairly realistic art style I've picked, but we could go with Gears or Ratchet or this new one Wildstar... Scanned real life stuff wouldn't even work for 5% of games.

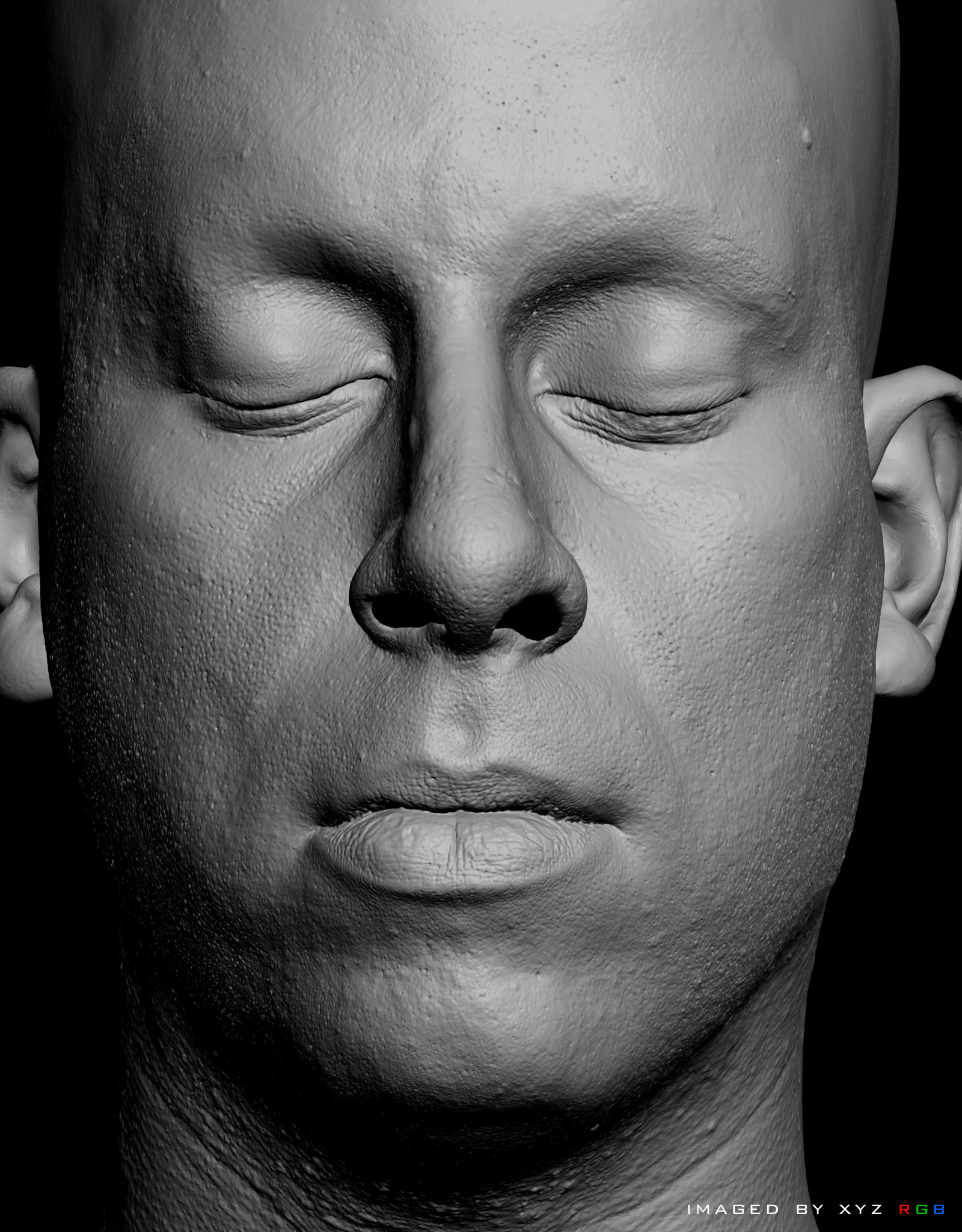

Also, it's not as easy or cheap as one would think, I've looked into proper scanning solutions and they're hideously expensive as you go up with the precision. There are the various photo based tools getting a lot of interest, but those aren't precise enough for closeups either.

But rebuilding the Euclidean demo with poly based assets would certainly be an interesting challange. Some Crysis fans might even give it a try...

Also, it's not as easy or cheap as one would think, I've looked into proper scanning solutions and they're hideously expensive as you go up with the precision. There are the various photo based tools getting a lot of interest, but those aren't precise enough for closeups either.

But rebuilding the Euclidean demo with poly based assets would certainly be an interesting challange. Some Crysis fans might even give it a try...