Who argued that? And where?The silly argument that "they're down now and won't be able to come back" is truly...silly.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

NVIDIA GF100 & Friends speculation

- Thread starter Arty

- Start date

Looks like a 4 to me in the next pic, picture #19.

I see K4G10325FE HC04. Only difference is the E which is 6th Gen and it is 8banks.

Edit- Maybe they purchased the high binned GDDR5 before they knew they messed up the MC(Charlie) or that they couldn't use the full clocks due to a power/TDP wall and decide to leave it on there to allow users/AIBs to overclock them.

Samsung GDDR5 product sheet

Ah thanks that's a much better picture!

The above is the same memory as on the 5870 i think.

There was a story on chiphell that Nvidia couldn't get access to the higher data rate memory it was all reserved for ATI, Nvidia could only purchase sufficient volumes of the lower speed. That is obviously false as they are both using the same manufacturer and grade.

I was looking on the samsung site, can anyone find the data sheet for the above memory? Couldn't find any spec sheets for GDDR5 anywhere there.

Who argued that? And where?

Maybe you should read what I quoted before my previous post ?

My point was "look back at a similar time when NVIDIA was down and ATI was up...and what happened next".

The silly argument that "they're down now and won't be able to come back" is truly...silly.

I taught myself never to say never again. But yes it's not very likely that they won't get back on their feet in due time.

No I'm actually suggesting what I said. Imagine if they would have reacted in time and something like the GF104 would had been in parallel development with GF100. Considering how crappy yields last year were under 40G, manufacturing costs would had been truly way too high to even think of production back then. However a die like the 104 which should fall somewhere slightly over the Cypress die area (speculative estimate as always) wouldn't had faced the same problem.I never suggested an entirely different strategy. I did say though that if the same strategy that they've been using this far, isn't working for them anymore, they will change it, is it the way you suggested; simply going the route ATI did with the high-end represented by two chips on a PCB or simply completely different.

In order to do so I'd think though that they either have to think of such a solution from the get go or damn early in development.

It's good then that that driver is actually the latest one provided by ATI, the famous 10.3a!

ROFL...

OK. But how does thisMaybe you should read what I quoted before my previous post ?

For the time being NVIDIA is anythng but "up" but that's besides the point.

imply this?

The silly argument that "they're down now and won't be able to come back" is truly...silly.

DegustatoR

Veteran

Which they actually should've done this time already.How about not changing their strategy entirely but merely modify it? Something like keeping the single high end chip strategy, but develop a performance/mainstream chip in parallel with the high end chip?

Which they actually should've done this time already.

Was it possible? I personally don't think so if an IHV hasn't thought of such an option early enough in development cycles.

I taught myself never to say never again. But yes it's not very likely that they won't get back on their feet in due time.

It's happened before, for both NVIDIA and ATI. It's bound to happen again. If the money making company's bottom line is being affected, then they'll rethink whatever there is to rethink. Even if GF100 performance is not that bad, but the production costs are just too big (and the profits from other markets are not enough to offset those costs) then they won't be pursuing the same strategy.

I think some people just think that since GT200 was a big chip and GF100 is one too, that NVIDIA will keep at it forever, but that makes no sense, if financially their products aren't justifying their cost.

Ailuros said:No I'm actually suggesting what I said. Imagine if they would have reacted in time and something like the GF104 would had been in parallel development with GF100. Considering how crappy yields last year were under 40G, manufacturing costs would had been truly way too high to even think of production back then. However a die like the 104 which should fall somewhere slightly over the Cypress die area (speculative estimate as always) wouldn't had faced the same problem.

In order to do so I'd think though that they either have to think of such a solution from the get go or damn early in development.

And I was agreeing that that is a definite possibility. It's still a change in strategy, even if not a complete one, which is what I was getting at

If GF104 really taped out a while back, as neliz suggested, then NVIDIA probably focused lots of resources on it, after knowing that GF100 wouldn't be what they intended it to be on TSMC's 40 nm proces.

OK. But how does this

imply this?

I said "what I quoted before my previous post" :smile:

My previous post then, was to Ailuros. The post before my previous post was this one:

http://forum.beyond3d.com/showpost.php?p=1412126&postcount=4671

onethreehill

Newcomer

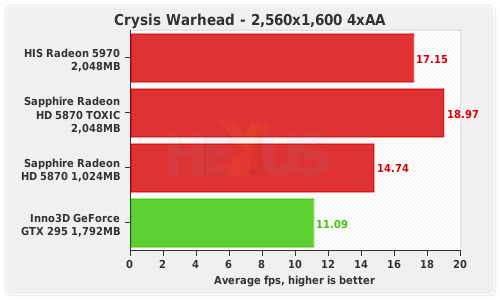

http://www.hexus.net/content/item.php?item=23079Sapphire HD 5870 TOXIC 2GB Review

Clock speeds: 925MHz/5,000MHz

We take a look at the Sapphire Radeon HD 5870 TOXIC 2GB just before GTX 480 launches. Let's see how much it beats up on all current competition and conjecture whether it stands a good chance against you-know-what....

The results are not a mistake. A 2GB frame-buffer enables the Radeon HD 5870 GPU to breathe when really taxed. Indeed, it's faster than a Radeon HD 5970 - a card that has to share its frame-buffer between the GPUs.

A near-30 per cent performance increase over a generic HD 5870 is down to more than just clock-speed, clearly.

DegustatoR

Veteran

Sure it was. Their decision to go with GF100 first was made after AMD's decision to abandon high-end GPUs. They might've thought about LRB of course but everyone know now how's that turned out. It's pretty clear that they should've done Cypress-level GF10x first and then they could've taken whatever time they needed to get GF100 to market. It's a pretty clear tactical mistake on NV's part.Was it possible? I personally don't think so if an IHV hasn't thought of such an option early enough in development cycles.

Breathe? More like gasp and sputter all the way up to 19fpsCrysis requires nothing short of a miracle.

I'm not sure what exactly is going on with Crysis (and Crysis Warhead respectively) but I'd think with all the triangle orgy that goes on in either/or things wouldn't look as awkward.

LOL "fundamentally inferior" is very funny. You need to read less Charlie articles

Also, you are seriously suggesting that by re-thinking their strategy of a big chip for the high-end, nothing will change ?

As I stated before, if that same strategy still works for them, they will keep it. If not, they will change it. You really need to think outside of your red box and go back to another time where ATI was up and NVIDIA was down.

Not really Silus.

nVidia got lucky with G80 while ATI had a horror show with R600. Since then, perf-transistor and perf-watt have been clearly in favour of ATI. Fermi was supposed to address that but on early indications nVidia has gone backwards from g200.

Your problem is you believe nVidia has been unlucky for the past 3 years. Well, in my opinion you can't be unlucky for so long - this right now is the normal operating performance for nVidia and it's well behind ATI. ATI are doing nothing particularly groundbreaking but nVidia still can't even keep up, in fact they are falling further behind.

I don't think luck had anything to do with any of this. It's about making a series of design decisions. Obviously ATI went for raw power, while nVidia went for a more flexible, efficient design capable of more different things.Not really Silus.

nVidia got lucky with G80 while ATI had a horror show with R600. Since then, perf-transistor and perf-watt have been clearly in favour of ATI. Fermi was supposed to address that but on early indications nVidia has gone backwards from g200.

Your problem is you believe nVidia has been unlucky for the past 3 years. Well, in my opinion you can't be unlucky for so long - this right now is the normal operating performance for nVidia and it's well behind ATI. ATI are doing nothing particularly groundbreaking but nVidia still can't even keep up, in fact they are falling further behind.

DegustatoR

Veteran

Not really. GF100 vs Cypress is a better situation for NV then GT200 vs RV770 was. They now have a lead in features, they have a clearly more future-proof products and they still are basically the only company with a GPU-based products for HPC markets. And although it's not really revealed yet I'd guess that AMD's transistor density advantage is lost on 40G.in fact they are falling further behind.

The only advantage 5800 has here in my opinion is a quite less power consumption. But that's just not something that matters to me in any way.

Not really. GF100 vs Cypress is a better situation for NV then GT200 vs RV770 was. They now have a lead in features, they have a clearly more future-proof products and they still are basically the only company with a GPU-based products for HPC markets. And although it's not really revealed yet I'd guess that AMD's transistor density advantage is lost on 40G.

The only advantage 5800 has here in my opinion is a quite less power consumption. But that's just not something that matters to me in any way.

If you mean 6 months later, GF100 is a better situation for nV that G200 vs RV770, then I'd maybe agree with that.

If you consider where ATI actually are along the road in terms of a refresh etc, they are further ahead than with RV770 v G200.

LonelyMind

Newcomer

Talk about a "cheesy" joke.I hope they provide some free cheese with all that whine.

I can't say I fully agree with this statement, but I find it amusing that during DX8-9 era exactly the same words were often spinned in favour of NVidia: ATI cards are more flexible and feature rich, but nVidia concentrates on raw power and it's all that matters.Obviously ATI went for raw power, while nVidia went for a more flexible, efficient design capable of more different things.

LonelyMind

Newcomer

Only reason that I can see for such a powerful fan is the need for extremely high static pressure, due to lots of cooling fins, long and close together. Dust buildup can only contribute negatively to that. Maybe they don't want another major recall á la "bumpgate"? Better safe than sorry?The bigger problem could be that the fan is rated at 0.3A and 4000 rpm. Thats only 16% fan power.

We will need to wait for the reviews to know more.

Similar threads

- Replies

- 37

- Views

- 4K

- Replies

- 64

- Views

- 5K

- Replies

- 351

- Views

- 36K