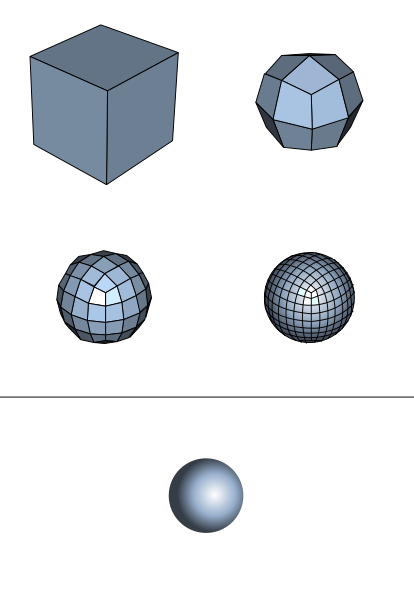

No, subdividing a cube (made from six polygons) will get you a sphere  Which is why the base model needs to get more polygon detail to control the derived surface.

Which is why the base model needs to get more polygon detail to control the derived surface.

Now for DX11 compatible tesselation, that's a completely different issue. Complex mechanical surfaces, like guns or combat armor, require very precise curves and corners and such. Reproducing these with tesselation and displacement mapping isn't going to look good enough unless they use an insane number of polygons (subpixel sized). So it's far more efficient to use good old modeling and normal mapping.

Look at the elbow armor piece on Marcus on this image:

You can see some pixelation there from the normal map. It isn't really disturbing though because the texture resolution is pretty high - most Gears characters use two 2K maps, and even mirror as many parts as they can (so they only store textures for one arm and leg).

Reproducing just this detail using a displacement map would then require a little more then 2K + 2K texels' worth of geometry, which is already about 8 million polygons.

Otherwise, the model could become quite jagged and noisy, not looking too smooth at all.

It's not something that can't be solved, though, by being careful about what to put into the geometry and what to use normal maps for, and carefully distributing polygons on the base mesh. Basically, all the main pieces of the armor had to be modeled. There's a reason the AvP demo does not try to use displacement on the colonial marine and goes for the more organic aliens instead... So tesselation and displacement in its current form is just not fast and efficient enough to produce details like that on its own, and what little it can add on current consoles might not be worth the effort.

Now for DX11 compatible tesselation, that's a completely different issue. Complex mechanical surfaces, like guns or combat armor, require very precise curves and corners and such. Reproducing these with tesselation and displacement mapping isn't going to look good enough unless they use an insane number of polygons (subpixel sized). So it's far more efficient to use good old modeling and normal mapping.

Look at the elbow armor piece on Marcus on this image:

You can see some pixelation there from the normal map. It isn't really disturbing though because the texture resolution is pretty high - most Gears characters use two 2K maps, and even mirror as many parts as they can (so they only store textures for one arm and leg).

Reproducing just this detail using a displacement map would then require a little more then 2K + 2K texels' worth of geometry, which is already about 8 million polygons.

Otherwise, the model could become quite jagged and noisy, not looking too smooth at all.

It's not something that can't be solved, though, by being careful about what to put into the geometry and what to use normal maps for, and carefully distributing polygons on the base mesh. Basically, all the main pieces of the armor had to be modeled. There's a reason the AvP demo does not try to use displacement on the colonial marine and goes for the more organic aliens instead... So tesselation and displacement in its current form is just not fast and efficient enough to produce details like that on its own, and what little it can add on current consoles might not be worth the effort.